Beyond Google: How AI Is Secretly Reshaping Your Website’s Search Visibility

Over the past couple of months, I’ve had numerous conversations with clients asking about optimizing their presence specifically for AI-driven search platforms and chatbots like ChatGPT and Claude.

Interest in this area has grown notably, and with good reason—since ChatGPT integrated web-based search capabilities, I’ve personally observed significant increases in traffic coming from these new AI-driven sources. Some of my clients now receive between 0.5% to as much as 6% of their total organic traffic from AI-driven platforms, underscoring just how impactful this trend already is.

AI Traffic Patterns: The New Frontier

My recent data shows some interesting patterns in AI-driven traffic:

- B2B websites are receiving higher AI traffic levels, accounting for up to 5-6% of their total SEO traffic. Companies like Vercel are reportedly getting up to 5% of their new signups via ChatGPT.

- AI traffic is more prevalent in desktop environments, and in US or other regions where AI adoption is greater (which tends to correlate with economic development but not limited to.

- Traffic distribution is highly asymmetric compared to traditional search patterns. Some get a lot. Some nothing.

One particularly interesting example is a B2B SaaS client whose presence in AI platforms is highly asymmetric compared to traditional search. While their Google rankings are solid, they rank exceptionally well on Perplexity, becoming the most cited source for many key queries, thus driving significant traffic. Notably, B2B SaaS is emerging as one of the sectors benefiting most substantially from AI-driven traffic.

Courtesy of one of my clients, this is what AI can look like in a publisher, where AI still brings in less than 1% of the total traffic (all channels).

When it comes to AI optimization—specifically optimizing your presence for Large Language Models (LLMs)—the truth is we’re still in the early days. We don’t know everything yet, but there are a few key aspects that we do know and should already be implementing to ensure we’re well-positioned in this emerging landscape.

See this example from Ahrefs, from early 2023:

to Vercel in early 2025:

The Beginner’s Guide to AI Optimization (AIO)

1. Allow crawling by LLMs

One of the first and most critical steps to optimizing for LLMs is ensuring your website is crawlable. Surprisingly, many websites currently block crawlers from these AI models, often inadvertently harming their potential visibility.

To illustrate, let me share a story from a company I previously worked for. The Chief Product and Technology Officer (CPTO) unilaterally decided, without considering expert guidance or available data, to block AI crawlers from accessing all websites owned by the company, simply because they disliked AI crawling the properties and distrusted OpenAI specifically.

Ironically, despite this stance, the company internally adopted OpenAI’s technology across various teams to enhance and power their own products. This approach was highly hypocritical and ultimately undermined their online visibility in AI-driven platforms in the long run.

Many websites are now blocking AI crawlers in two main ways:

- Through robots.txt: The transparent method that explicitly disallows certain bots.

- At the CDN level: A less transparent approach that can be opaque even to the companies implementing it.

To ensure your site is effectively optimized:

- Do not block LLMs via robots.txt: Explicitly allow crawling for all relevant bots, including but not limited to ChatGPT.

- Ensure your CDN isn’t blocking crawlers: Content Delivery Networks (CDNs) can unintentionally prevent bots from accessing content; double-check settings to avoid this.

- Avoid blocking important resources: Make sure CSS, JavaScript, images, and other essential resources are accessible.

Facilitating crawler access isn’t just about helping LLMs understand your content; it’s also crucial when it comes to Retrieval-Augmented Generation (RAG) systems, where AI models directly retrieve and cite content from your web pages. Without proper access, your content simply won’t appear.

If you don’t believe OAI crawling is real you should see the bot hits for this ecommerce brand as per Botify’s CEO, Adrien Menard:

2. Facilitate discovery via XML Sitemaps

Unlike Google Search Console or Bing Webmaster Tools, platforms like OpenAI or Claude don’t yet offer webmaster consoles. This means you must make your site easy to discover by proactively facilitating crawling:

- Include sitemap URLs in robots.txt: Clearly specify your sitemap location.

- Unify your crawling rules: Ideally, have the same rules for every user agent to ensure consistent crawling behavior across platforms.

Given that Bing powers numerous AI-driven platforms like ChatGPT, Perplexity, Grok, and Copilot, it’s particularly disappointing that Bing Webmaster Tools (BWMT) doesn’t yet offer specific insights into AI-driven crawling. Nonetheless, investing in Bing optimization is increasingly synonymous with optimizing for AI visibility.

Bing’s growing importance: With Bing’s integration into Perplexity and other AI platforms, its role in the AI ecosystem is becoming increasingly significant. This integration is reshaping AI traffic patterns and crawling strategies, making Bing optimization more important than ever before.

3. Understand AI Crawling Characteristics

AI crawlers differ significantly from traditional search engines in several key ways:

- JavaScript rendering limitations: AI crawlers typically don’t render JavaScript, making client-side rendered content invisible.

- Unique crawling patterns: AI crawlers have distinct patterns that differ from traditional search engines.

- Less focus on real-time updates: AI crawlers don’t require the same level of current information as traditional crawlers.

- Diverse bot ecosystem: There’s a notable increase in activity from various AI bots, including RAG bots, training bots, and indexing bots.

4. Limit client-side rendered content

Client-side rendering can be problematic for LLMs. A recent analysis from Vercel highlights significant limitations in AI crawlers’ ability to execute JavaScript. Data shows that none of the major AI crawlers, including GPTBot and Claude, currently render JavaScript, even though they fetch JavaScript files (ChatGPT: 11.50%, Claude: 23.84% of requests). Any content relying solely on client-side rendering remains effectively invisible to these crawlers.

For critical content:

- Prioritize server-side rendering (SSR), Incremental Static Regeneration (ISR), or Static Site Generation (SSG).

- Maintain proper redirects and consistent URL management to avoid high rates of 404 errors, which frequently occur with AI crawlers like ChatGPT and Claude (each around 34%).

You can find more details in Vercel’s comprehensive report on AI crawler behavior.

5. Proactively manage indexation

Beyond passive crawling, proactively pushing your content to AI and traditional search indexes offers increased visibility and control:

- Implement optimized XML sitemaps and submit via robots.txt.

- Use APIs like IndexNow and Bing’s URL Submission APIs: These allow you to directly signal new or updated content to search indexes.

6. Importance of great content and experience

LLMs are increasingly sophisticated—likely surpassing traditional search engines like Google or Bing in their ability to interpret content meaningfully. However, freshness remains critical, as AI models still heavily rely on traditional search engines, particularly Bing, for the latest and most updated information. Thus, ongoing SEO remains crucial.

Investing in outstanding content and seamless user experiences is more critical than ever, as these are key differentiators that AI models increasingly recognize and reward.

7. Optimizing Your Content for AI Systems

- Create structured, scannable content using tables, lists, and clear headings that organize information in AI-friendly formats. Research shows that “properly structured data receives 58% better engagement in AI-powered search.”

- Craft deterministic statements rather than nuanced ones – “Regular exercise reduces stress by 40%” is more effectively processed than “Some forms of physical activity might help certain individuals manage stress levels depending on various factors.”

- Establish clear question-answer patterns throughout your content, particularly in headings (H2/H3) followed by direct, concise responses. Include comprehensive FAQ sections that anticipate user queries.

- Leverage industry-specific terminology and insights that showcase exclusive knowledge – “According to our 10-year market analysis, Barcelona’s coastal property values outperform inland investments by an average of 12% annually.”

- Enrich semantic context by incorporating related terms, synonyms, and specialized vocabulary that expands the conceptual framework AI systems can recognize.

- Incorporate authentic human elements through personal anecdotes, case studies and testimonials that provide unique perspectives AI cannot generate – “Our client increased conversion rates by 32% after implementing these content changes.”

- Develop distinctive viewpoints on common topics rather than repeating widely available information. For example, instead of general real estate advice, analyze how specific micromarket trends affect particular buyer demographics.

- Balance technical precision with readability by including concrete figures, detailed analysis, and specialized information presented in digestible formats.

All these are increasingly relevant for Google AI Overviews, and the upcoming Google AI Mode. Both systems heavily rely on the content from search results but are displayed for in a UI generated text, similar to Perplexity for instance.

8. Monitor and optimize speed

Website speed significantly impacts crawling efficiency. Faster websites allow crawlers to discover and index more content effectively. Aim for a strong server response with a Time to First Byte (TTFB) of 500ms or less to maximize the efficiency of both traditional and AI crawlers.

9. Structured data: It might be important but we are not sure

While structured data remains crucial for traditional SEO—particularly on Google—it doesn’t appear to significantly influence your presence in LLM results today:

-

Keep implementing structured data for Google’s sake: While it doesn’t directly benefit LLM optimization now, structured data still enhances visibility on Google search.

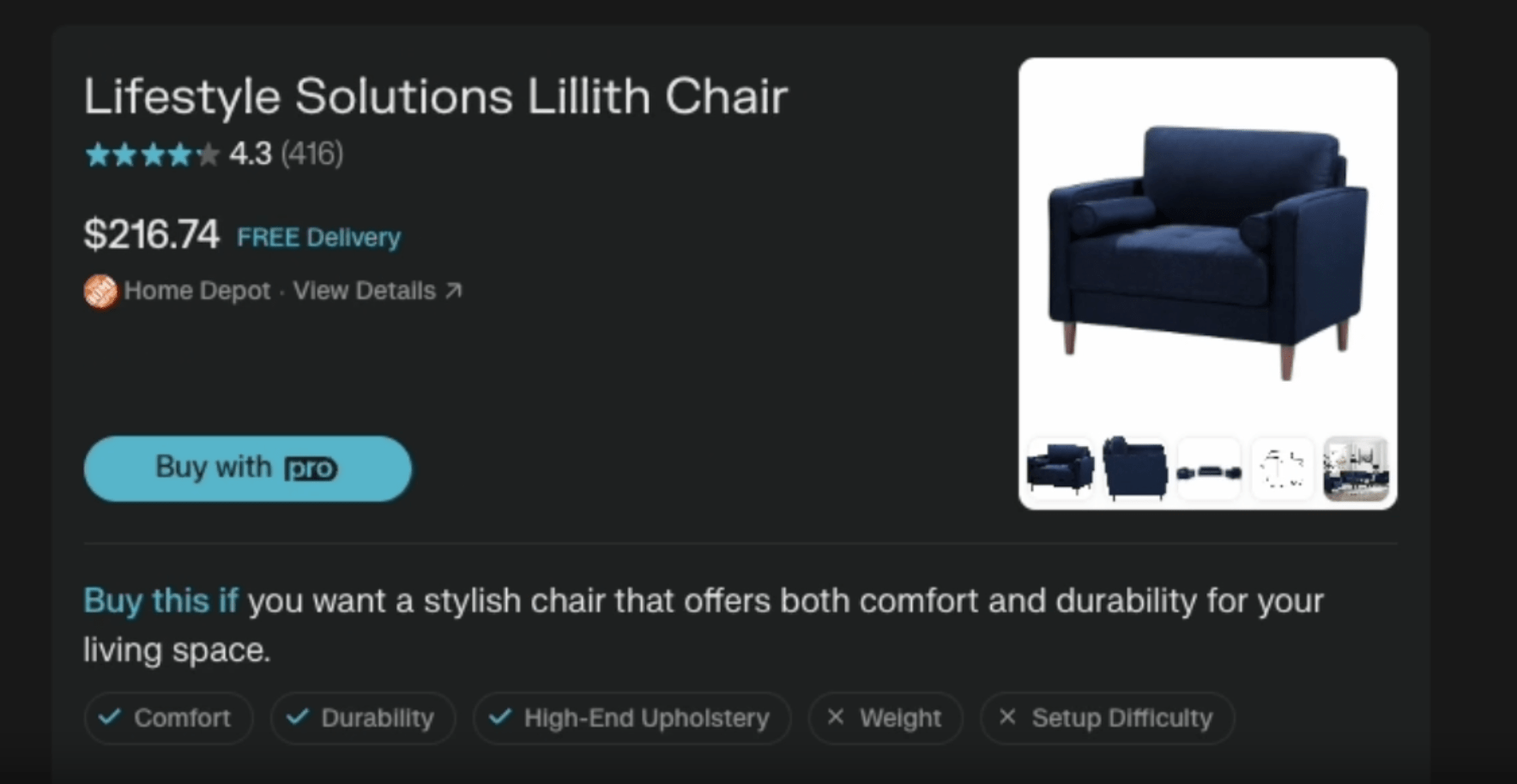

- This could also be relevant not so much in the concept of AI results or AI models training, but ancilliaries. Consider results like Perplexity Shop , that could be nurtured from a Product Schema. See pic below. Price, delivery, etc. Could be nurtured from a Schema markup most likely.

- Don’t expect structured data to improve your appearance in conversational AI: Currently, structured data does not seem to meaningfully affect how LLMs interact with content.

Remember that LLMs essentially read content, so they don’t care about metadata such as Schema.

10. Monitor bot activity via logs

Regularly monitoring your logs is essential to understanding how bots interact with your website:

- Use tools like Botify, Kibana from ElasticSearch, or other log-analysis software to identify crawling issues, track crawler behavior, and ensure AI bots are efficiently discovering your most important content.

- Track various AI bot types: Monitor activity from different types of AI bots including RAG bots, training bots, and indexing bots to understand their unique patterns.

11. Consider creating an LLMs.txt file

llms.txt is a new proposal for a standard file to let LLMs know how to better understand your website, where is what and so on, all in markdown format. While this file is clearly just a concept and probably not utilized by any major party so far, it can prepare you if this concept becomes more widespread. Personally I don’t think this will become a standard as it stands today without some modifications, but it can make sense to have something along the lines.

12. Track AI traffic with proper analytics segmentation

Being able to identify and segment AI-driven traffic is crucial for measuring your AIO efforts. In my work with clients, I’ve found implementing specific regex patterns in Google Analytics to be extremely effective for isolating and analyzing this growing traffic segment.

Here’s the regex pattern I personally use and recommend implementing in your analytics setup:

(?i).*(\.ai$|copilot|gpt|chatgpt|openai|neeva|writesonic|nimble|outrider|perplexity|google.*bard|bard|edgeservices|gemini.*google|claude|anthropic|grok|mistral|palm|falcon|stable.*lm|deepseek|mixtral|inflection|jamba|command.*r|phi|qwen|yi|exaone|bloom|neox).*

This pattern captures referrals from virtually all major AI platforms, allowing you to:

- Measure AI traffic’s contribution to your overall acquisition strategy

- Identify which AI platforms are sending the most visitors

- Analyze user behavior differences between AI-referred visitors versus traditional search

I’ve observed that AI traffic patterns are significantly more asymmetric than traditional search—some sites receive substantial traffic while others get virtually none, regardless of their Google rankings. This asymmetry offers unique opportunities, particularly for B2B companies, which I’ve seen receiving up to 5-6% of their total traffic from AI platforms.

13. Optimize for multimodal search capabilities

The AI search landscape is rapidly evolving beyond text-only queries to incorporate voice, image, and mixed-modality searches. From my experience, these multimodal interactions are growing exponentially, especially as devices like smart speakers and AI-enabled mobile cameras become increasingly sophisticated.

To effectively optimize for these capabilities:

- Convert existing content for voice search by restructuring for natural language patterns. Voice queries are typically longer, more conversational, and often posed as full questions rather than keyword fragments. My testing suggests voice-optimized content experiences up to 30% better engagement in AI settings.

- For visual search optimization, ensure all images have descriptive filenames, comprehensive alt text, and appropriate EXIF data. For product or property imagery, I’ve found that including multiple angles and clear, high-quality shots dramatically improves recognition and retrieval by visual AI systems.

When creating new content, think “beyond the keyword” by structuring it to answer complete questions that users might speak or type. This approach not only improves AI visibility but also enhances traditional search performance through comprehensive topical coverage.

14. Build strategic presence on AI reference sources

Understanding where AI models source their information has become a critical component of effective optimization. Through my work tracking citation patterns across multiple industries, I’ve identified clear priority platforms that disproportionately influence AI outputs.

Wikipedia remains the single most-cited source across all major AI systems, making a properly managed company or product Wikipedia page increasingly valuable. Similarly, platforms like GitHub (for technical content), Reddit (for community discussions), and established news outlets with strong domain authority consistently appear as citation sources.

Focus particularly on Bing optimization, as Microsoft’s search engine currently powers several major AI systems including ChatGPT, Perplexity, and Microsoft Copilot. Interestingly, I’ve worked with clients who rank modestly on Google but whose content is frequently cited by AI systems due to strong positioning on Bing.

Conclusion

We’re still in the early stages of understanding precisely how LLMs discover and prioritize content. However, one thing remains clear: while the fundamental principles of AI Optimization (AIO) and traditional SEO share similarities, AI optimization requires specific strategic approaches that extend beyond conventional SEO practices.

So, continue investing in solid SEO practices, create excellent content, and ensure a fantastic user experience. Additionally, you can now track your presence in AI-driven search and conversations similarly to traditional SEO, thanks to emerging AI rank tracking tools.

What we do know for sure:

- Allow clear and comprehensive crawling for all AI bots

- Facilitate access through sitemaps and unified crawling rules

- Understand and adapt to unique AI crawling characteristics

- Prefer server-side rendered content over client-side rendering

- Actively manage your content indexation strategies

- Optimize content format for AI consumption with structured, scannable content

- Monitor crawler behavior closely through log analysis

- Implement analytics segmentation to track and measure AI traffic

- Prioritize site speed for efficient crawling

- Adapt content for multimodal search capabilities (voice, image, etc.)

- Build strategic presence on key AI reference sources like Wikipedia and platforms that power AI systems

The asymmetric nature of AI traffic presents unique opportunities, particularly for B2B companies. As this landscape evolves, those who adapt their strategies to accommodate both traditional search and AI-driven discovery will gain significant competitive advantages.

Being visible in the world of AI optimization is about foundational clarity and accessibility, combined with proactive indexing strategies and deliberate content structuring. These basics form a robust foundation as we explore this evolving landscape.

If you’re interested in having a chat about AIO feel free to drop me a message or schedule a coffee intro.