在 Uber 使用 Integrated Gradients 实现深度模型可解释性

At Uber, machine learning powers everything from dynamic pricing and time of arrival predictions to fraud detection and customer support automation. Behind these systems is Uber’s ML platform team, Michelangelo, which builds Uber’s centralized platform for developing, deploying, and monitoring machine learning models at scale.

在 Uber,机器学习驱动着从动态定价、到达时间预测到欺诈检测和客服自动化的一切业务。支撑这些系统的是 Uber 的 ML 平台团队 Michelangelo,它构建了 Uber 集中化的平台,用于大规模开发、部署和监控机器学习模型。

As deep learning adoption at Uber has grown, so too has the need for trust and transparency in our models. Deep models are incredibly powerful, but because they’re inherently black boxes, they’re hard to understand and debug. For engineers, scientists, operations specialists, and other stakeholders, this lack of interpretability can be a serious blocker. They may want to know: “Why did the model make this decision?” “What feature was most influential here?” “Can we trust the output for this edge case?”

随着 Uber 深度学习应用的普及,对模型信任度和透明度的需求也在增长。深度模型极其强大,但由于它们本质上是黑盒,因此难以理解和调试。对于工程师、科学家、运营专家和其他利益相关者来说,这种缺乏可解释性可能是一个严重障碍。他们可能想知道:“模型为什么做出这个决定?”“哪个特征在这里最具影响力?”“我们能否信任这个边缘案例的输出?”

In response to these needs, we at Michelangelo invested in building IG (Integrated Gradients) explainability directly into our platform, making it possible to compute high-fidelity, interpretable attributions for deep learning models at Uber scale.

针对这些需求,我们Michelangelo团队投入资源,将IG(Integrated Gradients)可解释性直接内建到平台中,使我们能够在Uber规模下为深度学习模型计算高保真、可解释的归因。

This blog shares how we tackled the engineering and design challenges of implementing IG across multiple frameworks (TensorFlow™, PyTorch™), supporting complex pipelines, integrating with Michelangelo, and enabling broad adoption across teams. We’ll also highlight real-world motivations and use cases, and where we’re heading next on our explainability journey.

这篇博客分享了我们如何在多个框架(TensorFlow™、PyTorch™)中实现 IG、支持复杂流水线、与 Michelangelo 集成,并在各团队中推动广泛采用的过程中,解决工程和设计挑战。我们还将重点介绍真实的动机和用例,以及我们在可解释性旅程中的下一步方向。

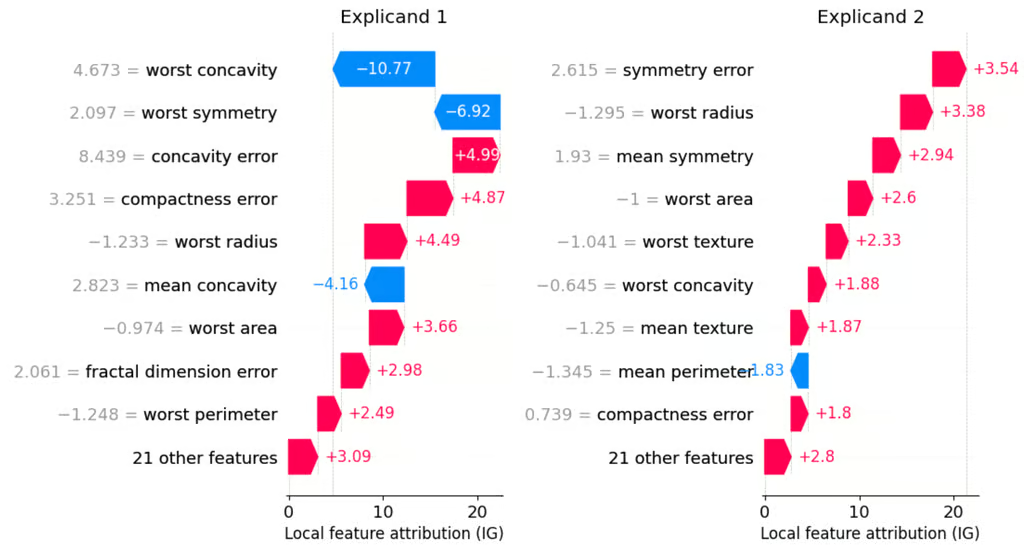

Figure 1: Examples of integrated gradients local feature attributions, plotted u...