Transforming Unstructured Documents to Standardized Formats with GPT: Building a Resume Parser

Among its numerous applications, GPT has become a game-changer in the processing and standardization of unstructured documents.

In this blog post, we'll explore how you can convert unstructured documents, specifically resumes, into a standardized format using GPT.

Resumes come in various shapes and sizes, with no two being exactly alike. This presents a unique challenge for recruiters who need to sift through hundreds or even thousands of resumes to identify suitable candidates.

As you can see, a quick Google search returns resumes in various designs and formats.

This is a well-structured resume but extracting the text from the PDF file would result in unstructured text, losing the original formatting.

The resume above uses a month/year format for dates but other resumes may use different date formats such as date/month/year or only the year. These variations make the task of parsing resumes challenging as it is difficult to account for all possible cases.

def extract_text_from_binary(file): pdf_data = io.BytesIO(file) reader = PyPDF2.PdfReader(pdf_data) num_pages = len(reader.pages) text = "" for page in range(num_pages): current_page = reader.pages[page] text += current_page.extract_text() return text

First, we need to extract the text from PDF. We can use the PyPDF2 library for this.

To call the OpenAI API, we use LangChain. LangChain is a community-driven framework for developing Language Model powered applications. It streamlines the development process by taking care of tedious tasks under the hood.

from langchain.llms import OpenAIChat

from langchain.chains import ConversationChain

from langchain.memory import ConversationBufferMemory

from langchain import LLMChain, PromptTemplate template = """Format the provided resume to this YAML template: --- name: '' phoneNumbers: - '' websites: - '' emails: - '' dateOfBirth: '' addresses: - street: '' city: '' state: '' zip: '' country: '' summary: '' education: - school: '' degree: '' fieldOfStudy: '' startDate: '' endDate: '' workExperience: - company: '' position: '' startDate: '' endDate: '' skills: - name: '' certifications: - name: '' {chat_history} {human_input}""" prompt = PromptTemplate( input_variables=["chat_history", "human_input"], template=template ) memory = ConversationBufferMemory(memory_key="chat_history") llm_chain = LLMChain( llm=OpenAIChat(model="gpt-3.5-turbo"), prompt=prompt, verbose=True, memory=memory, ) res = llm_chain.predict(human_input=resume) return res

Here we are asking GPT to structure the data into YAML format. I find YAML to be easy to read so I chose it for this task. Also, there has been a report that special characters such as {} in the prompt do not get processed correctly.

If you instruct GPT to follow the provided structure as much as possible you should set the temperature to 0.

I found gpt-3.5-turbo to perform very well and the best value for the cost.

And that's it! Very simple.

>- ---

name: 'IM A . SAMPLE X' phoneNumbers: - '308-308-3083' websites: - '' emails: - 'imasample10@xxxx.net' dateOfBirth: '' addresses: - street: '3083 North South Street, Apt. A -1' city: 'Grand Island' state: 'Nebraska' zip: '68803' country: '' summary: 'Seeking Position in Human/Social Service Administration or related field utilizing strong academic background, experience and excellent interpersonal skills' education: - school: 'Bellevue University' degree: 'BS in Human & Social Service Administration' fieldOfStudy: '' startDate: 'Jan 20xx' endDate: '' - school: 'Central Community College - Hastings Campus' degree: 'AAS in Human Services' fieldOfStudy: '' startDate: 'Dec 19xx' endDate: '' - school: '' degree: '75-Hr Basic Nursing Assistant Program' fieldOfStudy: '' startDate: 'Jan 20xx' endDate: '' workExperience: - company: 'Greater NE Goodwill Industries' position: 'Day Rehabilitation Specialist' startDate: 'June 20xx' endDate: 'Present' - company: 'Tiffany Square Care Center' position: 'Assistant Receptionist' startDate: 'Jan 20xx' endDate: 'June 20xx' - company: 'Central NE Goodwill Industries' position: 'Employment Trainer' startDate: 'Aug 19xx' endDate: 'May 20xx' - company: 'Crisis Center Inc & Family Violence Coalition' position: 'Criminal Justice/Shelter Advocate' startDate: 'July 20xx' endDate: 'Oct 20xx' - company: 'Tiffany Square Care Center' position: 'Social Services Assistant' startDate: 'Jan 20xx' endDate: 'Sept 20xx' skills: - name: '' certifications: - name: '' communityService: - organization: 'Women\'s Health Services & Resource Center' role: 'Volunteer' startDate: 'Fall 20xx' endDate: 'Present' responsibilities: - 'Assisted professional staff and participated in one-on-one discussions with women seeking advice on health-related issues' - 'Observed group training sessions to develop the skills needed to facilitate groups in the future'

GPT managed to parse resumes with human-level accuracy and precision; even perfectly handled work history. In the sample pdf we used, GPT was also able to accurately interpret the use of '20xx' as a year in the dates section, which can often be challenging for computers to understand.

It's worth noting that GPT's flexibility allowed it to create a new field for community service, even though it wasn't part of the provided YAML template.

Achieving this level of flexibility is usually challenging with traditional programming methods. Parsing unstructured documents like resumes can be very costly and time-consuming due to the vast number of patterns that need to be accounted for.

GPT demonstrated that it can be incredibly useful in transpiring unstructured data to a structured format for its low cost, high accuracy, and scalability. I am interested in exploring more on using GPT for data conversion.

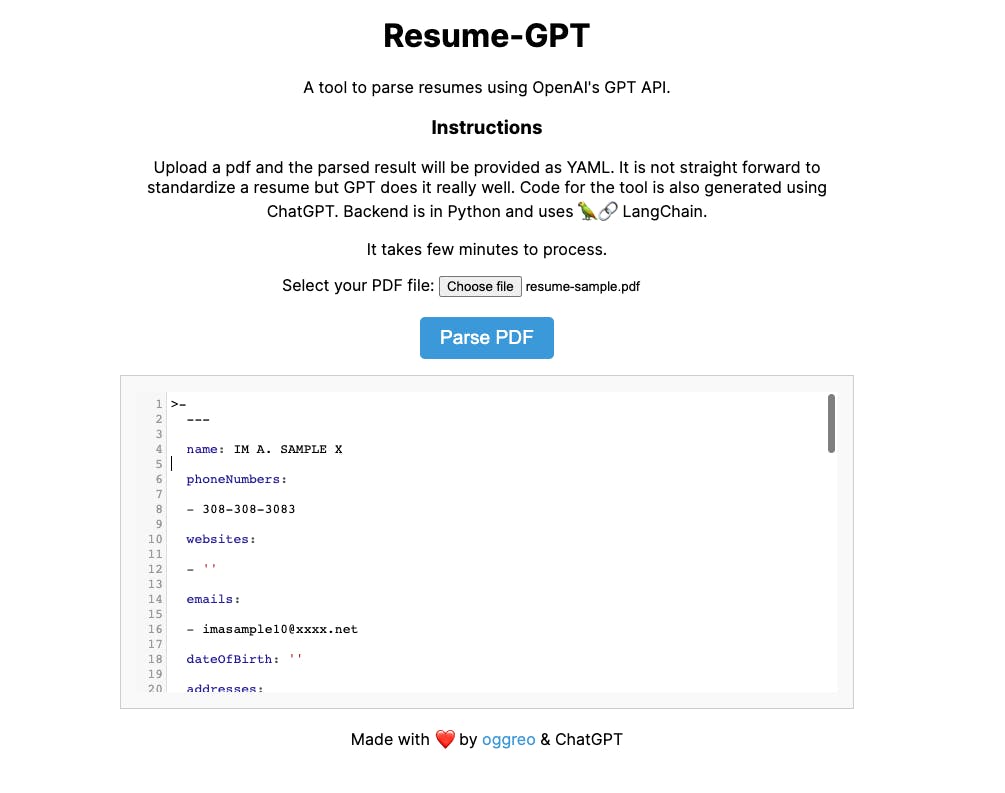

I put together a simple DEMO! I'm using FastAPI for the backend and deployed it on Render hosting.

You can upload any pdf and have GPT parse the resume. You will need to wait a few minutes for the pdf to be processed.

Hope you enjoyed it! I will be blogging more about using Language Models for applications so watch this space!