Writing clean JavaScript tests with the BASIC principles

Hold your BASIC shield armor while The JavaScript test bastards are coming

Language review: Jonathan Atia

✨ Visit my workshop — Nodeconf is the biggest European Node.js conference (October 18th-19th) .?? I’ll hold this year a five hours comprehensive workshop about— “Node.js testing — beyond the basics”, visit here for more details

Why do many teams abandon testing

Production code, the main code where the features live, might not be perfect. That’s a risk or a debt that teams manage in multiple ways. For testing code, the risk is much more dramatic — It can be abandoned altogether. Vanish, gone, not doing anything anymore. Hundreds of coding hours, meetings, and sweat can just get lost.

Have you encountered this? Here is a tyical story. 7 pm, late, it’s cold and dark outside. Sarah is a relatively new team member, and she is trying to deploy. All seems excellent except few tests, written by someone else, are failing. Sarah opens the tests file to look through those tests, her eyes can’t believe what they see — each test constitutes 50 lines of code (with Prettier it became 100 loc) including loops, if/else, callbacks. That’s annoying because she thought the challenging part was behind her — Coding the features. Wait, now she also notices that the test is calling other external classes that inherit from more classes and share state with other tests. It’s hard to follow the code traces, her eyes blink tiredly, 8 pm already, Sarah is angry and hungry, did I mention it’s raining outside?. Unlike the tests, the new code seems to work fine — Yes she also tested with Postman and her Browser. Desperately she makes a call to use the magic spell that makes all sorrows end. She adds one little and a sweet word — test.skip. All tests pass now. She deploys!

Guess what happened?

Nothing , it works. The production looks just fine, the tests were probably not updated or wrong.

Wait, what. What is the takeaway here? You might have believed that the worst thing that can happen to the team is a production bug. No, it’s the tests that are being skipped and losing credibility. As a consultant that works with ~20 different teams yearly on various Node.js challenges, I saw this happening frequently. It’s not only me noticing this — There is a known phenomenon called — The eroding testing pyramid, which describes the vanishment of tests from the codebase.

I hear you thinking, this won’t happen to us — We are disciplined, we have a daily scrum meeting, we measure the coverage! None of these will help. Tests just like an orgasm, are very easy to fake (Did I just write this?). These illusional faked testing come in different shapes — Developers might actually write tests but they won’t trust them. Then, in-silent, they will check the code using the browser or POSTMAN to gain real confidence. This manual testing defeats the whole reason for doing testing (speed, automation). Others write tests after the code was written, after the fact, just before production. This workflow implies that there were no tests to serve as a safety net for regression during coding (p.s. It’s not mandatory to write tests before the code, writing them during coding is also fine. Not in the end). With time, the tests become almost useless, a tax that poor developers are asked to pay. Then, with time testing get out of the agenda, they only exist physically like a zombie that lives among us.

What should my team do differently?

Why is this terrible phenomenon happening? Because developers are intelligent, spoily, and efficient creatures that seek the shortest path to the goal. We’re all kept very busy with the main code, the production code (you don’t go to the beach at 2 PM, right?), and have no headroom for additional systems, let alone a testing system. If by chance, there is an additional mental capacity for more code that promises to boost their workflow — it must be tiny and dead-simple. Let me put it loud and clear — Testing can shine only if developers are convinced and happily adopting this habit. This can happen if the tests are not a burden on their busy mind rather a dead-simple technique that brings significant value. The 1st principle of testing is — Plan for outstanding ROI, extracting significant bangs for the buck. Only this will make teams happy to maintain it.

Big words, how can one actually achieve this? By following three principles: First, strive for the minimal amount of testing needed to gain confidence (said also by Kent Beck, ‘father’ of TDD). Second, our tests must resemble the production conditions to provide compelling confidence (see the shift-right and testing-in-production concepts). These two principles will get discussed in a later blog post. The third principle is about writing extremely simple tests — This is the subject of this post.

By saying ‘simple test’, mean truly no-brainer and dead-simple code. Totally flat, complexity level equals one, stand-alone with almost zero dependencies, contains between 7–10 statements, and is written in human-readable language. If you follow this, there is not even a chance that Sarah will decide to skip this test — It’s just too easy. In reality, though, many forces will push developers to deviate from the golden route of simplicity: Tests will include loops and logic because no other way was found to test some behavior. Tests will become long, although the writer really tried to avoid this — There are just too many things to prepare for the test. Other challenges will encourage writing imperative and not-so-simple code. When this happens, stop right there. High tests complexity happens to those who are willing to accept it. The bastards will come to grind you down, but you gotta fight back, and kill it early. To be prepared for this battle, I’ve gathered a coupled of principles and techniques under the acronym BASIC. Let’s go over these principles and then exemplify a ‘nasty’ real-world test packed with unnecessary complexity. Then, let’s apply the BASIC principles over the test and see how it shrinks and turns into a beautiful short test.

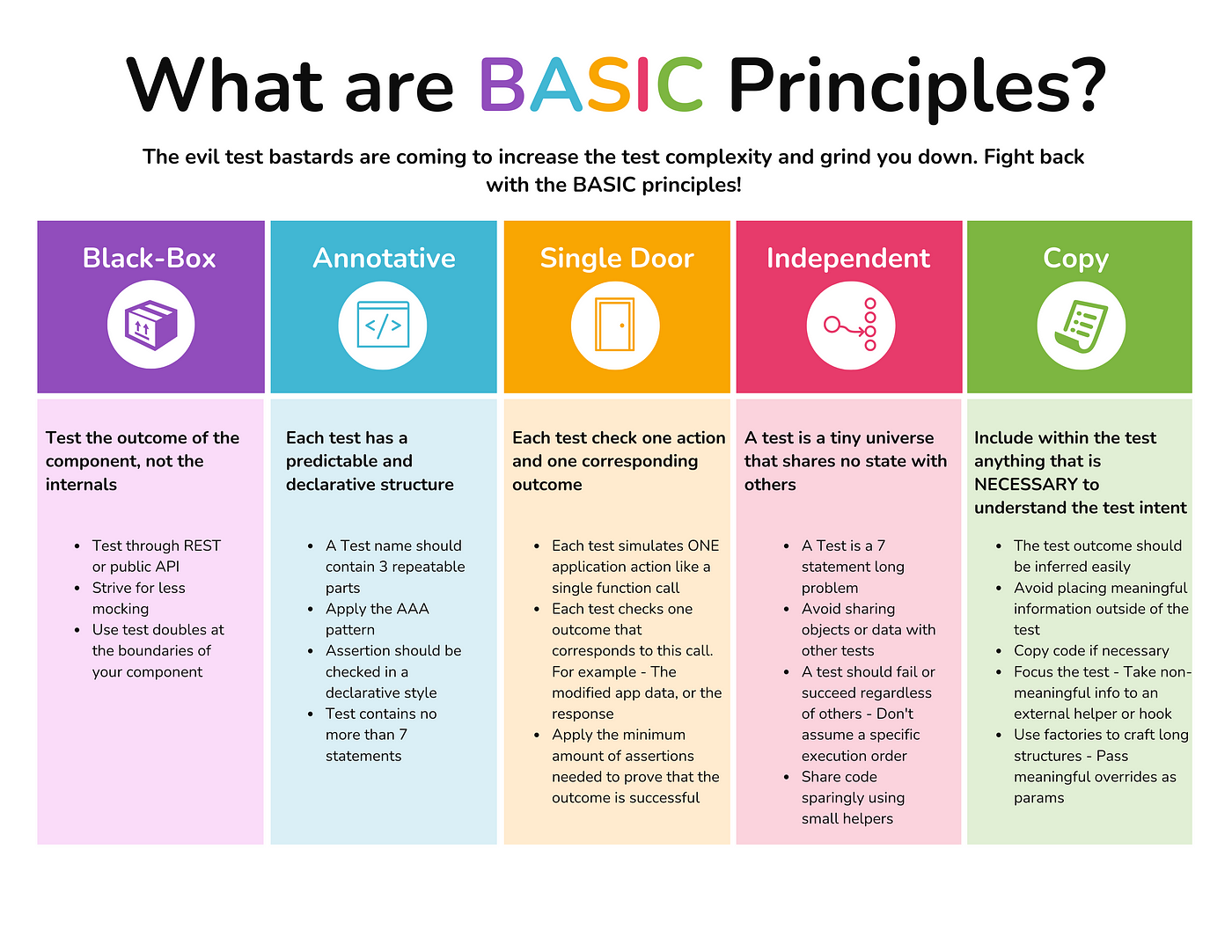

The BASIC acronym

Let’s go letter by letter, each represents a principle to consider while testing. Afterward, we will apply these BASIC principles over a long and cumbersome test and turn it into a beautiful short one.

Black-box — We care only about what the box, our component or object under test, produces and is visible for the caller. Whether it’s API (integration) or code objects (unit). If a bug can not be observed from outside, it won’t matter to our users, do we even care? Avoid testing HOW things work, focus on WHAT they do. It does not matter which functions were called, rather only the outcomes: Publicly noticeable things. For example, the unit under test response, the observed new state, significant calls to external code, and others. By focusing on the outer layer, the amount of details is dramatically reduced and the test writer is pushed to prioritize the important things that might affect the UX. Inherently the test’s length and complexity are lowered.

Annotative — A test must have a predictable structure with declarative language. It should feel more like annotations than code. Skimming through a production code is a journey with a clear start and an unknown end — The stack trace might unfold indefinitely and lead the focused reader through many corners of the app. During this time, she might need to stretch her brain to get the intent. Testing should not feel this way rather more like a declarative syntax like … HTML. When reading HTML docs, our mind effortlessly hopes through the various tags, almost expecting what is to come next — Rarely a developer will avoid or fail reading HTML snippets due to complexity.

How can we make the testing experience more like declarative and structured? Use 6 repeatable parts in every test with the AAA pattern (see below), declarative assertions, up to 7 statements. Read JavaScript Testing Best Practices for other similar patterns.

6 repeatable parts in every test

Single Door— Every test should focus on one thing. Usually it should trigger one action in the app and check one outcome that happened as a response to this action. My friend

is calling this — ‘Reaction’. An action might be a function call, button click, REST route invocation, new message in a queue, scheduled job start time or any other system event. After performing this action, up to three outcomes might get triggered: Response, change in the state (in-memory or DB), or a call to 3rd party service (Credit: Roy Osherove entry and exit point). In integration tests there might be six types of outcomes, see my checklist here for more details. These potential outcomes are tested using assertions. What is the benefit? Narrowing each test to single action-outcome encourages shorter tests and better root-cause analysis — In case of failure, it’s clear what is not working. This is a good rule of thumb to follow but not too rigidly — It’s OK to test 2 outcomes in a single test, maybe 3, absolutely not 10. Focus on the goal, not the number — Simple and short tests.

Independent —A test is a 7–10 line long problem, a tiny universe that shares nothing with others. When kept independent, short and declarative — It’s delightful to read by the occasional reader. Beware though, by just coupling a test to some global object — Suddenly it is exposed to side-effects from many other tests and the complexity is dramatically inclined. Coupling can also originate from shared DB records, relying on the execution order, UI state, uncleaned mocking, and others — Avoid all of these. Instead, let each test factor its dependencies, potentially using helpers, including its own DB state to keep it as a self-explanatory universe.

Copy only what’s necessary— Include all the necessary details that the reader needs to understand within the test. Nothing more, only what’s necessary. Too much duplication will result in unmaintainable tests; on the other hand , extracting important details outside will force the reader to seek pieces of information across many files. As an example, consider a test that should factor 100 lines of input JSON — Pasting this in every test is tedious. Extracting it outside to transferFactory.getJSON() will leave the test vague — Without data, it’s hard to correlate the test result with the cause (“why is it supposed to return 400 status?”). The classic book x-unit patterns named this pattern ‘the mystery guest’ — Something unseen affected our test results, we don’t know what exactly.

We can do better by extracting repeatable long parts outside AND mention explictly which specific details matter to the test. Going with the example above, the test can pass parameters that highlight what is important: transferFactory.getJSON({sender: undefined}). In this example, the reader should immediately infer that the empty sender field is the reason why the test should expect a validation error or any other similar adequate outcome.

Real-world example: A nasty test turns into a beautiful one

Theory aside, let’s transform a ‘nasty’ test with high-complexity into a simple one by applying the five BASIC principles

Code under test

This is the app that will be tested: a service that performs money remittance. The code will validate the money transfer request, apply some logic, ask a banking HTTP service to remit the money and finally persist to DB. This is intentionally not perfectly ‘testable’ code, to say the least—You probably want to learn to test any code, not only ‘perfect’ code.

A nasty test

Let’s test it! This test above checks that when a sender tries to transfer an amount that is bigger than his credit, the declined transfer won’t appear in the user history. In other words, it should not persist. It’s a long and cumbersome test, an anti-pattern, but every line here is allegedly sensible and tackles possible risks. Read it, truly understand the writer’s intent — Every line was included thoughtfully by a great developer who is keen on the details. Only then think about how to improve it. The X symbol means that there is something in that line that could be improved.

Transforming into a beautiful test with the BASIC principles

Let’s go now line by line and remove/fix it, by applying the BASIC principles

Line number 2: Ambuiguiy

❌ Bad (line 2):

✅ Better:

??? Related pattern: Annotative

? Explanation: What’s wrong here? A test should communicate its intent clearly in a structured and known format as explained in the ‘Annoative’ pattern (follow the 6 repeatable parts pattern)

Line number 3: Mystery

❌ Bad (line 3):

✅ Better:

??? Related pattern: Copy only what’s necessary

? Explanation: What’s wrong here? The test is about a money transfer without enough credit, this line abstracts the input JSON so there is no clue about how it would look like. The reader has to guess that this JSON would have some issues, but what exactly? Remember, a test is a 7 statements short and punchy story that the reader can understand clearly without leaving the test. A known story-telling technique, Foreshadowing, suggests that all the relevant details should “appears at the beginning of a story, or a chapter, and it helps the reader develop expectations about the upcoming events.”.

Always include within the test the details that matter for the test success or failure. Leave the rest outside. Practically, dynamic factories can greatly help here in factoring default input while getting specific overrides that matter from this test.

✨ Follow me on Twitter

Line number 4: Coupling

❌ Bad (line 4):

✅ Better: Create a dedicated service for every test, configure your own instance, not a global one

??? Related pattern: Independent

? Explanation: What’s wrong here? This line raises the test complexity dramatically. Once all the tests share a global object and can modify its properties, test failures might originate from any other test in the file. Instead of 7 lines long problem you just got a spaghetti of 50 tests to reason about. Maybe some test above set the system state to something that we didn’t plan for? Now instead of investigating a failure within one short test — One must skim through the other test cases in the same file and identify where things went wrong.

Line number 5: Magic numbers

❌ Bad (line 5):

✅ Better:

or

??? Related pattern: Copy only what’s necessary

? Explanation: What’s wrong here? Magic number. This line with the arbitarty number ‘110’ tells nothing to the reader, it’s just a number. Is it high? low? what does it represent? The writer meant to pick a value that is higher than the user credit, a critical piece of information that highly relates to the test results, but failed to communicate this. There are multiple techniques that could have told this story better: constant with a descriptive name (e.g. amountMoreThanTheUserCredit) or put the transfer amount nearby the insufficient credit.

Line number 6: White-box testing

❌ Bad (line 6):

✅ Better:

??? Related pattern: Black-box

? Explanation: What’s wrong here? The writer’s intentions are great, she wants to check that the declined transfer is not accidentally saved to DB, this is what the test is about. The chosen technique is questionable — Usage of mock to listen to the data-access method call and verify that it was not called. Whenever we use test doubles (mocking) out test face two weaknesses: False-positive alarms will fire anytime we legitimately modify the internal call ‘save’ method, although everything still works, the tests will fail. Even worse, our test will lead to positive-false scenarios where the data is saved to the DB using a different path or method, but the test will pass. Instead of checking the natural user flow through the public API, our test is bounded to the internal and increases the maintenance noise. Here is a golden testing rule: Whenever possible, test the user flow as it happens in production — This is what black-box testing is about.

Lines 8–10: Not relevant

❌ Bad (line 6):

✅ Better: Just remove as these checks are not part of the tests story

??? Related pattern: Single-door

? Explanation: What’s wrong here? The coder of this test is ambitious and keen to catch any bug in the entire transfer flow. If the transfer currency is not $$ dollar or the response does not contain mandatory fields — Something is wrong. All of this is true and should be tested. But there is one more thing to consider — Tests should be short and focused. If we test a long flow with many outcomes in a single test case, this will result in a less readable test. Once we see a failure, it might be neglectable detail or maybe the entire system is down? Realizing the root-cause will become harder. When testing, it’s just fine to sacrifice some details to emphasize the right trees in a huge forest.

Lines 11: Implementation details

❌ Bad (line 11):

✅ Better: Remove it, we don’t care about the implementation — If the outcome is satisfactory, the implementation is likely to be fine

??? Related pattern: Black-box

? Explanation: What’s wrong here? It seems like the code internally is making use of a field ‘numberOfDeclined’ to store failures. Probably this will get used to fire metrics or report errors. This assertion checks the implementation of the software, not the publicly available outcome. Inherently, there are much more implementation details than outcomes. Should tests check every function, field, and interaction, it might end with dozens or hundreds of tests per feature, and consequently, much longer test files. Alternatively, when checking only the publicly available result, there are fewer tests and the same issues are caught. Could it be that something internally is wrong but is not reflecting outside? If so, the bug is not affecting the user; Do we even care? Note that checking outcomes does not mean checking only API and UX matters — The ops is also a vital user and checking that metrics, errors, logging are fired is imperative. Those are outcomes, things that the box produces, not implementation details.

Lines 16–17: Overlapping

❌ Bad (line 16–17):

✅ Better:

??? Related pattern: Single-door

? Explanation: What’s wrong here? With great will and motivation, the writer wishes to check that the response array is valid. Great job, it’s just redundant — When asserting that the array does not contain the transfer, all the other checks are validated implicitly. Overlapping assertions is a very popular testing habit, our intuition is to test more, cover more things, if we already wrote a test — Why not include more and more assertions? Because it blurs the story. In testing, strive for less, focus the reader's mind, emphasize the important part. All the more when the same confidence is achieved using less code as demonstrated here.

Lines 20–26: Sighhh imperative code

❌ Bad (line 20–26):

✅ Better:

??? Related pattern: Annotative

? Explanation: What’s wrong here? The tester wants to ensure that the declined transfer is not saved in the system and is retrievable, so she loops over all the user’s transfers to ensure it does not. Seems legit, isn’t it? No, this is imperative code, one must skim through the implementation to get the intent. If a team goes this route and allows all the programming guns — Loops, if/else, inheritance, try-catch — There is no limit to the potential complexity. A better path is keeping the tests super-simple with declarative (annotative) code — This style involves no implementation details, just read and understand immediately. Practically, stick to declarative assertion libraries (Chai expect, Jest matches). If the community does not offer the desired assertion logic — Write a custom one. Configure linters that prohibit imperative code in testing like jest/no-if, here’s an example video below of issues that test linters can catch. When a complexity checker is in use, allow a complexity level of no more than 1 (totally flat code)

✨ The Result: Short, dead-simple, and beautiful test

Remember that long and cumbersome test that we’ve started with? Here it is now after applying the BASIC principles — Short and concise, isn’t it?

Few words before we say goodbye

The art of testing is also about gaining the right amount of confidence with the minimal effort possible. Even Kent Beck, ‘father of TDD’, the most extremist testing paradigm, wrote once:

I get paid for code that works, not for tests, so my philosophy is to test as little as possible to reach a given level of confidence

Minimizing test effort is as important as increasing the test coverage. This post covered how to reduce the time spent on dealing with tests complexity — An important cornet stone in an efficient testing strategy. However, one might still have too much testing or anemic testing — These are the next two achievements to unlock toward testing serenity.

Before you leave, here is a small gift: A poster of the key points that were covered.