Event Sourcing for an Inventory Availability Solution

- ATF — Available to Fulfill inventory

- On-Hand — Physical amount of Inventory available

- SKU — Stock Keeping Unit, which represents a distinct type of item for sale.

- Location — Representation of a physical location like a store or warehouse where SKU’s are present

- Location Group — A Logical aggregation of typically one or more Locations.

- Reservation or Inventory Reservation — Reserving a quantity of a SKU. For example: Reservation of 5 iPod-Nanos-Red

- Inventory Records — A quantity for a SKU at a particular location.

Salesforce Commerce Cloud is a product that provides e-commerce solutions for both business-to-business (B2B) and business-to-consumer (B2C) companies. To continue enhancing what we are able to offer our customers, we needed to build a multi-tenant, omnichannel inventory availability solution that could be shared by multiple Salesforce products, including B2C Enterprise, Order Management, and B2B. This solution needed to be highly available, highly performant, and highly accurate in its bookkeeping, while unlocking new features like Location Level Inventory Aggregation.

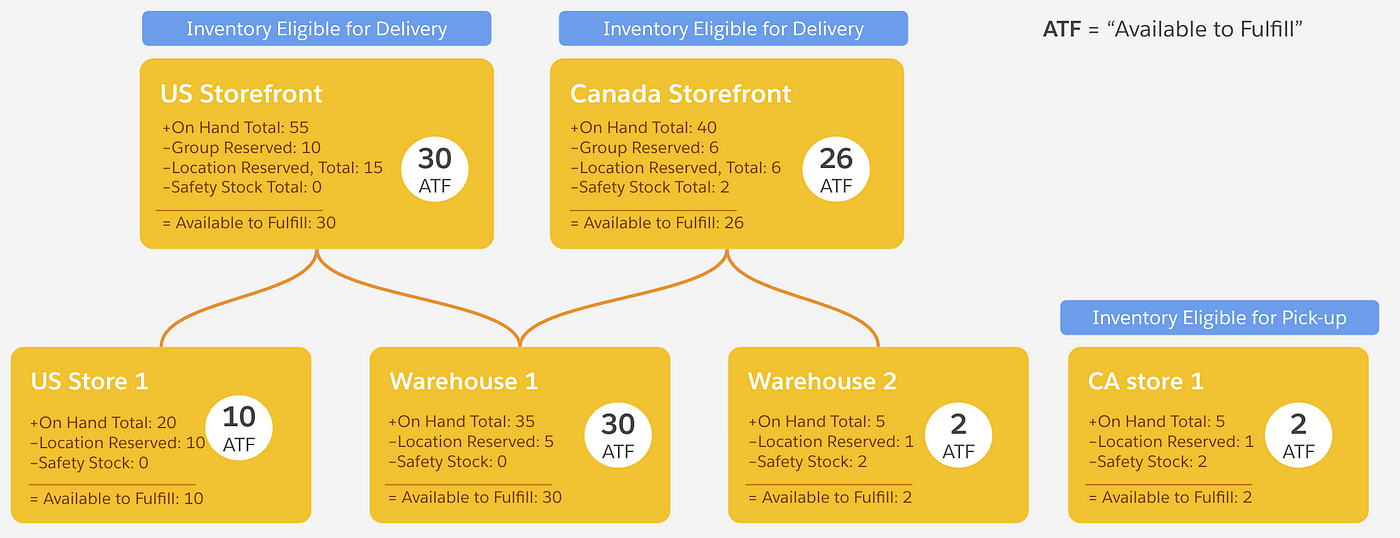

Location Level Inventory Aggregation is a feature that enables the aggregation of inventory from one or more locations into a Location Group, thereby allowing inventory reservations to be performed at either Location or Location Group level for BOPIS (Buy On-line and Pickup in store) among other omnichannel scenarios.

The solution which was to be built had to deal with high contention reservation and row lock concerns associated with scenarios like flash sales over a few highly sought after items in a limited time window.

Put yourselves in the shoes of a major retailer with the holiday shopping season fast approaching and you’ll be primed to understand the need for this solution.

Requirements

-

Accuracy: Inventory reservations must be accurate

— No duplicate reservations should be allowed

— Solution must prevent overselling, i.e., prevent an inventory reservation from happening if there is no quantity available. -

Consistency: Availability reads can display relatively stale inventory levels and do not need be immediately consistent to what is written.

— No need for inventory updates to be reflected in a call to obtain availability immediately after an inventory update. Acceptable to have up to 60 seconds of staleness between the time the write is reflected in the read.

The solution was expected to support thousands of tenants globally with the maximum number of inventory records per tenant to extend into the billions. The solution also needed to be able to process hundreds of reservation requests per second with low latency while doing the same returning availability of SKUs for each tenant. There was also a requirement to maintain an audit log of changes to the inventory for diagnosability and forensics.

Thinking back to our imagined role as a major retailer, we can put these requirements even more simply: if someone reserves a pair of, say, sneakers, that reservation needs to be based on inventory that actually exists, and the person shouldn’t be able to reserve the same pair of shoes twice. As the retailer, you need everything behind the scenes of your website to happen seamlessly.

So how did we satisfy all of these requirements in way that could scale to the levels needed by our many customers?

Direction for Inventory Solution

Event Sourcing pattern is an approach to operations around data where changes are recorded to an append-only datastore in a sequential manner as discrete immutable events. The store behaves as the system of record maintaining the history of changes, and domain objects can be materialized by replaying the individual events and folding them into the aggregate.

Event Sourcing

Or as Greg Young stated it rather simplistically —

Greg Young on Event Sourcing

Reconstituting the aggregate by folding the events up during every query is not tractable. For this reason SNAPSHOTs are implemented and only the events since the last snapshot are folded-in to provide the current state of the aggregate. We should also note that the Event Log is an ordered sequence of facts of things that have happened and is not series of intentions or commands.

Reconstituting the aggregate by folding the events up during every query is not tractable. For this reason, SNAPSHOTs are implemented and only the events since the last snapshot are folded-in to provide the current state of the aggregate. We should also note that the Event Log is an ordered sequence of facts of things that have happened and is not series of intentions or commands.

Inventory availability mutations typically occur to change on-hand values or create reservations. These operations can positively or negatively affect the Available to Fulfill (ATF) of a stock-keeping unit (SKU, basically a barcode, but used here to mean more generally “an item”).

For the sake of illustration in this blog, let us consider a fictional merchant, Acme. Acme has a website, acme.com, and has a warehouse in Salt Lake City, UT, from where they ship their merchandise. In addition, Acme also has physical stores in Las Vegas, NV and Seattle, WA. These two stores also support shipping from the store of products purchased on acme.com.

Acme Location Graph Setup

In the example, we have a SKU, acme-hat-blue which is present at the warehouse, and at the Seattle and Las Vegas stores. The warehouse has 220 on-hand and there are 20 reservations made against it, effectively leaving 200 items that can be fulfilled by the warehouse — are available to be sold. The Location Inventory State is shown below:

Location Inventory Aggregate

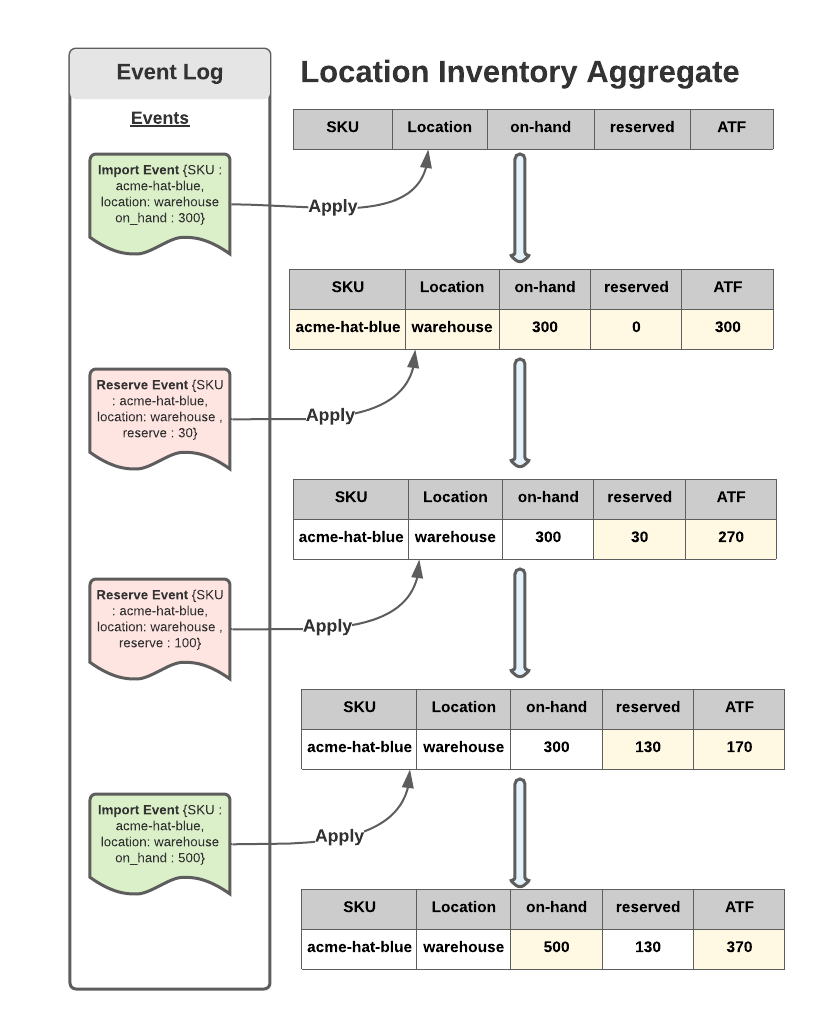

In the Event Sourcing model, a bunch of immutable events occur and these are then applied in order to build the state of the aggregate.

The following shows a sample of how the Location Inventory Aggregate is built over time with different events. The yellow rows on the table show the impacted data points at each state:

Location Inventory Aggregate Updates over time

In the example shown above, the initial state of the aggregate is NULL, i.e., nothing in it. When the first import event comes in, it gets applied, and the aggregate reflects the same. Subsequent reservation events affect the aggregate similarly, thereby altering the ATF number.

The important thing to note here is that the Event Log is a sequence of persistent facts that have occurred, and, at any time, the aggregate can be reconstituted from the Event Log by replaying all events since inception. However, this is not likely to be tractable as the number of events grow. To avoid this, events are folded and snapshotted at a regular interval and stored in an entity called Location Inventory Aggregate as shown.

Using Subscriptions to create the Group Inventory View

As we needed to surface the Location Group Inventory by aggregating inventory across different locations, we do the same using an entity that is realized called GroupInventoryView. This Group Inventory View has the aggregated inventory counts in it.

Group Inventory View

GroupInventoryView entries are computed by rolling/folding up the inventory availability of the different locations part of the group into the group. One of our initial approaches involved computing this aggregation on the fly when an API’s invocation was performed to obtain the Location Group’s inventory where the different location level inventory would be combined to serve the Group Level Numbers. This, however, proved to be a performance concern due to the amount of I/O, slower response time, and additional compute added to the invocation.

To address this problem, we availed one of the core benefits of Event Sourcing, which are subscriptions where additional actions can be taken by subscribing to the Event Sourced Log. This is similar to Change Data Capture (CDC) in databases. We utilized this CDC ability to roll up the Location level inventory availability into the _GroupInventoryView_by subscribing to the event stream and updating the Location Group numbers and persisting them to the GroupInventoryView. This approach worked for us due to the acceptable staleness of the records allowed for the GroupInventoryView.

Group Inventory View Update using Event Sourcing Subscription Feature

High Contention on Select SKUs during Reservations

High Contention Reservation

One of our primary objectives is to avoid overselling, as outlined in the first functional requirement above, while also providing a performant and responsive experience during inventory reservation. When inventory approaches low levels, if more than a single caller is competing to grab the item, the regular approach using a transactional datastore is to lock the row and update/decrement the inventory would result in large latency due to high contention. In other words, a website, store terminal or other consumer attempting to do inventory reservations would be slow, and the request might even error out without completing, so the user experience of the person trying to reserve the item would suffer.

To address this problem, we decided to use an optimistic concurrency approach to avoid row locking. We utilize an Event Sequence number that is monotonically increasing associated with a SKU-Location record.

The following shows a sample Location Inventory Events for Reservation and Inventory Import:

Location Inventory Event for Reservation —

Location Inventory Event for Inventory Import —

The algorithm to apply a reservation event looks like this:

The event log is queried to determine the current sequence number of the last inserted event. The Reservation Event’s sequence number is set to one above the last known sequence number. An append of the Event is then attempted on the event log. A constraint exists on the event log that ensures uniqueness of [Location-id, SKU, event_sequence_number], so if another thread had attempted to insert an event for the SKU/Location and had gotten through, this insert would have failed. A retry is attempted for a few times before giving up.

Staleness of Read Data

Reads performed against the inventory solution by consumers are not promised to be strongly consistent.

Weakly Vs. Strongly Consistent Read

Queries performed against the aggregate represent data that is not guaranteed to be strongly consistent and reflective of the latest state of the event log. This is acceptable for almost all read use cases where stale data on the READ side is fine. If a more consistent read were required, it would entail reading in the Location Inventory Aggregate and applying all events in the stream to it in real time, which could result in higher latency.

Other Options Considered for the Solution

We considered other options to solve this problem, such as directly mutating rows and then having some form of Change Data Capture (CDC) to capture the changes for auditability and forensics. During the evaluations, it became more apparent that, rather than maintaining a separate Event Stream of changes, using Event Sourcing Pattern with its Event Log gave us the historical changes baked into the solution without having to deal with the complexities of a CDC and a separate log.

CDC

We wanted our solution to also avoid dealing with distributed transactions, for example, where we created an entry in a database and then fired a message into a message broker due to the inherent unreliability of such operations from an atomic perspective.

Resulting Solution

To implement the solution, we had to pick the appropriate data store and eventing system. The following were some of the needs of our Event log and Aggregates:

Event log

- Event log is totally ordered, immutable (attempts to update or delete events are rejected) and is append-only

- Event log contains each event exactly once (attempts to append a duplicate event are rejected)

- Event log append may enforce additional user-defined constraints (e.g., attempt to append a reservation event is rejected if insufficient inventory available)

- High performance append log predictability from a time perspective on inserts.

From an aggregate perspective, we needed to make sure the solution supports SNAPSHOTs.

We considered several popular log-like data-stores like Kafka, Cassandra, and EventStore to build the above but had certain reservations at that time around each of them:

- Kafka — Did not have the ability to support unique events (not to be confused with Kafka Producer idempotence). Kakfa also presented a challenge to be able to support consistent query/read all events for a particular SKU+Location without an explosion of Topics.

- EventStore— Seemed to have challenges with scaling of high throughput writes, though reads looked more promising.

- Cassandra — Cassandra had the ability to enforce event uniqueness with LWT (Light Weight Transactions) albeit with higher latency.

Out solution ended up using an in-house data service called Zero Object Service (ZOS), which is an elastically scalable microservice designed to handle data storage and retrieval for multi-tenant, meta-data-driven, high-volume, and low-latency use cases. It does so by providing a set of template patterns like Event Sourcing and Reservation Sets that are built on top of key-value datastores that support per-row atomic writes with strong consistency. The actual implementation of the ZOS Event Sourcing template is beyond the scope of this blog post, but, with ZOS, the Inventory service was able to achieve its desired features for event sourcing and meet the desired scale. The solution was deployed on public cloud across multiple global regions.

Learnings from our Implementation

Our implementation and adoption of the pattern left us with some learnings that we’d like to share:

Consistency and Staleness

One of the foremost things that stands out is our Product and Engineering teams embraced the concept of eventual consistency and that it is acceptable that inventory numbers have different degrees of staleness at different points of the architecture. Inventory on a web page might show available, but, when you try to buy it, it could be sold out. Product Searches and other indices do not need to reflect 100% consistent inventory results and neither do Product detail or Product listing pages. But, when attempting to Reserve/Check-out, it is extremely important to be accurate to not promise something that could potentially not be available. For that reason, the writes for Inventory reservations (as we refer to them) were strongly consistent operations.

Event Sourcing Pattern

Event Sourcing as a pattern can be a bit difficult to wrap one’s head around. It often is easily confused with a simple event stream/topic, where the messages themselves are no longer relevant once the stream is processed. Our team had to go through a learning curve around Event Sourcing, how it differs from CQRS and the benefits it brings over straight up manipulation of data and CRUD. Embracing the need to maintain two data points, namely an event log and the entity/aggregate/view, instead of interacting with just the entity/aggregate/view took some time to digest. The fact that the Event Sourcing pattern promotes the separation of Write/Read paths, thereby enabling performance optimizations on either end, was a big benefit that we observed in our performance tests. The ability to build aggregates from the stream while coalescing multiple event records were performance optimizations that gave us the benefit of cost saving as well as reducing the number of I/O operations. Avoiding lock contention by multiple threads by serializing the updates to entities over single threads for mutation seemed to gain us good performance during High Contention scenarios like flash sales.

Another benefit of Event Sourcing is subscriptions and providing our consumers the ability to subscribe for changes, which meant that they could cache the availability state closer to their application and reach back to the inventory solution for deltas (changes) while always using the inventory solution for performing strongly consistent reservations. This effectively led to improved performance for consumers as their data was closer to them for the READ path, as well as reducing the cost to serve, as the Inventory Solution did not have to be accessed for every GET availability operation.

Audit and Forensics

The benefit of ensuring we have a historical log of changes with the Event Sourcing system without the need for a supplemental audit log helped us many times during forensics to replay and re-construct states. The audit is not something that is an accessory to the primary model but is instead what drives the model.

Below are a couple of spots where we found the event log to be very helpful for supporting live customers

- The event log provides footprints to trace back the path to arrive at the current inventory state for a SKU. By providing the Event log to the consumers as a self service tool, it helped them trouble shoot the actions leading to the current state.

- The release version of the service is stamped on every event which is helpful for finding the affected events in case of bug introduced in the release, and we are able to isolate the affected events and replay those events to get it to the correct state.

Performance

The performance of the Architecture of the Inventory Availability Service→ Zero Object Service→ Key/Value Store provided the desired results we needed from the solution for throughput and responsiveness of inventory reservations and availability retrieval.

Conclusion

Since launching in February 2021, the Inventory solution has garnered the interest and adoption of many brands globally. The solution offers a headless API as part of the overall suite of Commerce API offerings. (Read more about that at the Commerce Cloud Developer Center.) It integrates with Salesforce B2C Commerce, which many merchants have loved using over the years, and also with Salesforce Order Management.

We would like to thank and acknowledge Robert Libby and Benjamin Busjeager for their contributions toward this solution and also for helping review this blog. We also would like to acknowledge and appreciate all the teamwork and effort from members of Product and Technology at Salesforce who were involved in the making of this offering.

Interested in working on problems like these? Join our Talent Network or reach out to Sanjay on his blog, Twitter, or LinkedIn and Balachandar on his LinkedIn.