Fine-tuning a reasoning model with GRPO for passport data extraction

Extracting structured data from passports isn’t just about OCR—it’s about reasoning. Traditional OCR methods struggle with formatting inconsistencies, multilingual text, and real-world variations, but fine-tuning a model with GRPO enhances contextual understanding, improving accuracy and adaptability. This blog helps developers fine-tune a reasoning model with GRPO to optimize passport data extraction, covering key challenges, implementation techniques, and best practices.

Fine-tuning a language model isn’t just about feeding it data and hoping for the best. If you’re extracting structured data—like passport details—you need a model that reasons through the problem, not one that just memorizes patterns. That’s where Group Relative Policy Optimization (GRPO) comes in.

In this post, we’ll walk through fine-tuning a reasoning model for passport data extraction using GRPO. We’ll start with Supervised Fine-Tuning (SFT) and then refine it using reinforcement learning (RL) to improve accuracy and reasoning.

We’ll use:

-

Base Model: Qwen/Qwen2.5-1.5B-Instruct

-

Dataset: Custom Passport EN dataset

-

Training Method: SFT + GRPO

All code at Github

Supervised fine-tuning ( or SFT) is effective for training a baseline model, but it struggles with generalization. When extracting structured data, slight variations in input format can lead to errors. Standard SFT lacks the adaptive reasoning needed to handle these cases effectively.

This is where GRPO improves the model. The DeepSeekMath paper introduces GRPO as an RL post-training technique designed to enhance reasoning skills in large language models (LLMs). Unlike traditional heuristic-based search methods, GRPO relies solely on RL for optimization, helping the model generalize better to unseen input variations.

GRPO has been used in DeepSeek-R1, and its training approach appears similar to the methods used in OpenAI o1 and o3 models, though exact details are unconfirmed. The Hugging Face Science team is working to reproduce the DeepSeek-R1 training process in their Open-R1 project, which is worth exploring for more insights.

We’ll implement GRPO using the TRL library (Transformer Reinforcement Learning) library and focus on improving structured data extraction from passports.the extraction of structured data

To understand GRPO, let’s break it down with an example before diving into the technical details.

GRPO helps a model learn by comparing different actions in groups and making controlled updates. Instead of updating the model after every single observation, it collects multiple observations before adjusting its strategy—similar to mini-batch gradient updates in deep learning.

1. Sampling different paths: The robot tries out each path multiple times and records the results:

-

Path A: Reaches the goal 2 out of 3 times.

-

Path B: Reaches the goal 1 out of 3 times.

-

Path C: Reaches the goal 3 out of 3 times.

2. Evaluating performance: It calculates the success rate:

-

Path A → 66.67% success

-

Path B → 33.33% success

-

Path C → 100% success

3. Comparing paths: It identifies Path C as the best option but doesn’t completely ignore the other paths.

4. Adjusting strategy: The robot increases the probability of choosing Path C, but it occasionally tries A and B to avoid overfitting to one solution.

5. Controlled updates: Instead of jumping to a 100% preference for Path C, it gradually shifts probabilities while maintaining exploration.

Now, we will go throught GRPO algorithm mathematics

1. Policy and Actions

The policy, denoted as πθ (where θ represents the policy’s trainable parameters), defines the decision-making strategy. For a given state ( s ), the policy outputs a probability distribution over possible actions, written as πθ(a∣s). This represents the likelihood of selecting action ( a ) in state ( s ).

The objective is to optimize the policy parameters to maximize the expected cumulative reward J(θ), defined as:

where τ=(s0,a0,s1,a1,… ) is a trajectory (a sequence of states and actions), and r(st,at) is the reward received at time ( t ).

2. Group Sampling

For a given state ( s ), GRPO samples a group of ( N ) actions, {a1,a2,…,aN}, using the current policy πθ. Each action a_i is independently drawn from the policy distribution:

This creates a set of candidate actions to evaluate.

3. Reward Scoring

Each sampled action a_i is assessed using a reward function R(a_i), which quantifies the action’s quality. This function might represent the immediate reward r(s,a_i) or a discounted sum of future rewards starting from the state ( s ) and action a_i, depending on the problem setup.

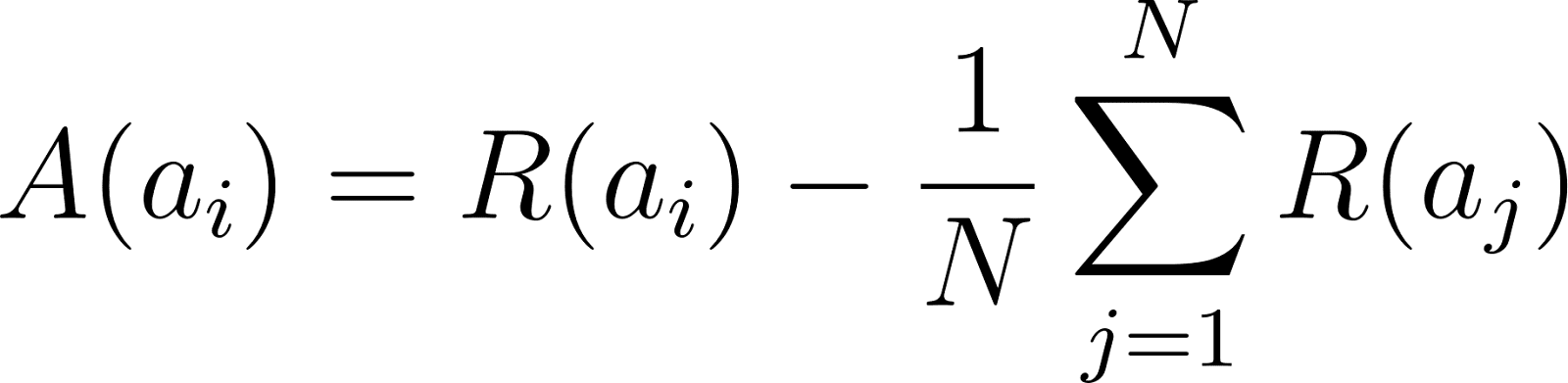

4. Advantage Calculation

The advantage A(a_i) measures how much better or worse action a_i performs compared to the average performance across the sampled group. It is typically calculated as:

Where the second term is the average reward of all ( N ) actions. A positive advantage indicates an above-average action, while a negative advantage indicates a below-average one.

5. Policy Update

GRPO adjusts the policy parameters θ to favor actions with positive advantages (increasing their probability) and disfavor actions with negative advantages (decreasing their probability). This is done by optimizing the policy based on the advantage values, typically via gradient-based methods.

6. KL Divergence Constraint

To prevent the updated policy πθ from straying too far from the previous policy, GRPO imposes a Kullback-Leibler (KL) divergence constraint:

where πθ_old is the policy before the update, and is a small threshold. This ensures stable and gradual policy improvements.

7. GRPO Objective

The overarching goal of GRPO is to maximize the expected cumulative reward J(θ) while maintaining stability in policy updates. This is achieved by balancing the reward improvement (guided by the advantage) with the KL divergence constraint, resulting in an optimization problem of the form:

Just like the robot learns from multiple trials, GRPO enables an LLM to refine its structured data extraction ability by:

-

Evaluating different extraction strategies for variations in passport formats.

-

Adjusting model predictions based on relative success rather than absolute correctness.

-

Ensuring controlled updates, allowing for better generalization.

To build a high-quality dataset for passport information extraction, we needed a diverse and extensive collection of passport samples. We designed a structured framework to systematically extract key details while ensuring accuracy and consistency. Raw passport images and text had to be transformed into a structured format that machine learning models could efficiently process. To achieve this, we developed a token-based representation system that encodes essential details in a machine-readable format.

However, we needed to go beyond simple structuring and convert this format into a more intuitive, reasoning-based representation—essentially translating structured data into a "language" the model can process effectively. This system allows us to generate step-by-step navigation sequences, making the representation highly suitable for training models to reason through information extraction tasks.

Inspired by Camel-AI, a multi-agent framework for data generation that utilizes the Gemini API, we leveraged this approach to generate dataset reasoning (as reflected in my code). As a result, we have compiled a dataset of 400 examples, available here: github

Here's how we structured the dataset:

1. Field extraction: We systematically extract essential passport information, including:

-

Passport Number

-

Full Name

-

Gender

-

Nationality

-

Date of Birth

-

Place of Birth

-

Date of Issue

-

Date of Expiry

-

Place of Issue (if available)

-

Machine Readable Zone (MRZ)

2. Token-based representation: Instead of relying solely on free-text extraction, we encode passport data into structured tokens, ensuring uniformity across all entries. This transformation makes it easier for models to learn patterns and generalize across different passports.

-

Entity tokens: Each extracted field is wrapped with a special token (e.g., “passport_number”: “200858064”), making it easier for models to identify and process key details.

-

Date formatting: Dates are standardized to a consistent format (DD-MM-YYYY) to maintain consistency across all samples.

-

MRZ encoding: The Machine Readable Zone (MRZ) is preserved as a key feature, allowing the model to cross-validate extracted details.

3. Dataset structure: Each passport entry in the dataset follows this structured format:

{ "passport_number": "200858064", "full_name": "Daniel Warren", "gender": "M", "nationality": "United Kingdom", "dob": "06-05-1945", "place_of_birth": "Gloucestershire", "place_of_issue": null, "issue_date": "12-12-2020", "expire_date": "06-05-2032", "mrz": "PAGBRDANIEL<<WARREN<<<<<<<<<<<<<<<<<<<<<<<<<\nB1137484W7GBR4505064M3205068200858064<<<<<50"

}

4. Step-by-step extraction process:

-

The system scans the passport document and detects predefined fields.

-

Each field is extracted with high-confidence OCR and natural language processing techniques.

-

The extracted information is formatted into structured JSON, ensuring consistency and completeness.

-

A verification step cross-checks the MRZ data with the extracted fields to validate correctness.

5. Scalability & diversity: Our dataset includes a variety of passports with different layouts, fonts, and security features, ensuring robustness against variations in real-world documents. By leveraging automated pipelines, we generate thousands of high-quality labeled passport samples for model training.

Goals

-

Train the model to accurately extract structured passport information from raw OCR text.

-

Establish a strong baseline for later stages of reinforcement learning or additional finetuning.

Methodology

We explored two training strategies:

-

Direct extraction model: The model is trained to predict all passport fields directly from the raw OCR text.

-

Step-by-step extraction model: The model is trained to extract each field sequentially, incorporating intermediate reasoning to verify information consistency (e.g., cross-checking MRZ data with extracted fields).

SFT Hyperparameters:

Parameter | Value |

model_name_or_path | Qwen/Qwen2.5-1.5B-Instruct |

disable_gradient_checkpointing | true |

finetuning_type | lora |

deepspeed | ds0 |

cutoff_len | 4096 |

train_on_prompt | true |

per_device_train_batch_size | 32 |

gradient_accumulation_steps | 1 |

learning_rate | 1.0e-5 |

num_train_epochs | 1.0 |

lr_scheduler_type | cosine |

warmup_ratio | 0.1 |

bf16 | true |

This structured approach ensures that our passport dataset is optimized for training AI models in document analysis, identity verification, and automated data extraction, making it highly reliable and scalable for real-world applications.

We use llama-factory to SFT the model.

To benchmark the fine-tuning performance, we trained the model on a system equipped with two RTX 3090 GPUs. Below is the recorded training time:

| Hardware

|

Num GPUs

|

Total training time

|

Time per step

2x RTX 3090

|

2

|

≈ 7 minutes 45 seconds

|

~3.10s

|

For comparison, we also fine-tuned the model on an A100 GPU to evaluate its efficiency:

| Hardware

|

Num GPUs

|

Total training time

|

Time per step

1x A100

|

1

|

≈ 4 minutes 30 seconds

|

~1.80s

|

After completing SFT, we applied GRPO to refine the model’s extraction capabilities. GRPO helps the model learn from its own generated extractions, iteratively improving accuracy and robustness.

Constructing the GRPO reward function

A well-designed reward function is essential for reinforcement learning. We developed a composite reward function that evaluates multiple aspects of accurate passport extraction:

-

Format reward

-

Purpose: Ensures the model outputs responses in a structured format.

-

Mechanism: Uses regex to verify that the output follows a predefined structure (e.g.,

... ... ). -

Reward: +1.0 for correctly formatted output, 0.0 otherwise.

-

-

Accuracy reward:

-

Purpose: Checks if the model's output matches the ground truth.

-

Mechanism:

i. Compares the model’s JSON output with the labeled data.

ii. If the extracted fields perfectly match the ground truth, the model receives full reward.

iii. If only some fields match, the reward is proportional to the number of correctly extracted fields.

-

-

Reward: +1.0 for a perfect match, partial score for partial correctness, 0.0 for invalid JSON.

Total forward calculation

-

Final reward = Format Reward + Accuracy Reward

-

Maximum possible reward: 2.0

GRPO training hyperparameters

Parameter | Value |

learning_rate | 1e-6 |

alpha | 128 |

r | 128 |

weight_decay | 0.1 |

warmup_ratio | 0.1 |

lr_scheduler_type | cosine |

optim | paged_adamw_8bit |

per_device_train_batch_size | 8 |

gradient_accumulation_steps | 1 |

num_generations | 4 |

max_prompt_length | 2048 |

max_completion_length | 2048 |

max_grad_norm | 0.1 |

Data preparation

Before training, we need to format our dataset properly. Each data sample consists of a user query (passport data) and an assistant response (extracted structured details). We structure the conversation for the model using a helper function:

def make_conversation(example): """Prepare conversation data for training.""" conversation = example["conversations"] user_content = next((msg["content"] for msg in conversation if msg["role"] == "user"), "") assistant_content = next((msg["content"] for msg in conversation if msg["role"] == "assistant"), "") SYSTEM_PROMPT = """ Respond in the following format: <think> Explain the steps taken to extract the requested information from the provided data. Mention any challenges, assumptions, or decisions made (e.g., date formatting, handling missing data). Ensure confidence in the extracted data and avoid guessing. </think> <answer> Provide the extracted information in JSON format based on the specified schema. Include only the fields listed in the schema and return null for any missing data. </answer> Task: Extract information from the provided document ONLY if you are confident. Do not guess or make up answers. Return the extracted data in JSON format according to the schema below. List the extracted information in the order it appears in the document. Output schema: { "id_number": "ID number of the person", "passport_number": "Passport number of the person", "full_name": "Full name of the person", "gender": "Gender of the person", "nationality": "Nationality of the person", "dob": "Date of birth of the person (format: DD MMM YYYY, e.g., 26 JUL 1969)", "place": "Place of birth of the person", "place_issue": "Place of issue of the passport", "issue_date": "Date of issue of the passport (format: DD MMM YYYY)", "expire_date": "Date of expiry of the passport (format: DD MMM YYYY)", "mrz": "Machine Readable Zone (full two-line code)" } """ return { "prompt": [ {"role": "system", "content": SYSTEM_PROMPT}, {"role": "user", "content": user_content}, ], "response": assistant_content

}

We apply this function to the dataset to structure the input for training.

Reward functions

GRPO optimizes the model using reward signals. We define two reward functions:

-

Format reward: Ensures the model outputs responses in a structured format.

-

Accuracy reward: Compares the model output with ground truth data.

Format-based reward function

import re def format_reward(completions, **kwargs): """Reward function that checks if the completion has a specific format.""" pattern = r"^<think>[\s\S]*?</think>\s*<answer>\s*\{[\s\S]*?\}\s*</answer>\s*$" completion_contents = [completion[0]["content"] for completion in completions] print("format completion: ", completion_contents) return [1.0 if re.match(pattern, content) else 0.0 for content in completion_contents]

Accuracy-based reward function

def accuracy_reward(prompts, completions, **kwargs): """Reward function that checks if the model output matches the ground truth.""" rewards = [] conversations_list = kwargs.get("conversations", []) for completion, conversations in zip(completions, conversations_list): try: assistant_response = next( (msg["content"] for msg in conversations if msg["role"] == "assistant"), "" ) model_output = completion[0]["content"] json_match = re.search(r"</think>\s*<answer>\s*(\{[\s\S]*\})\s*</answer>\s*$", model_output, re.DOTALL) assistant_match = re.search(r"</think>\s*(\{[\s\S]*\})\s*$", assistant_response) if json_match: json_str = json_match.group(1) else: rewards.append(0.0) continue assistant_response = assistant_match.group(1) parsed_solution = json.loads(assistant_response) parsed_output = json.loads(json_str) if parsed_solution == parsed_output: rewards.append(1.0) else: matching_keys = sum( 1 for key in parsed_solution if key in parsed_output and parsed_solution[key] == parsed_output[key]) total_keys = len(parsed_solution) rewards.append(matching_keys / total_keys) except Exception: rewards.append(0.0) return rewards

Training with GRPO

With the dataset and reward functions ready, we now train the model using GRPO with LoRA fine-tuning.

The training experiments were conducted on a server equipped with an NVIDIA GTX 3090 GPU. This setup allowed us to fine-tune the model efficiently while managing memory constraints, particularly when using LoRA for reduced VRAM consumption.

from transformers import AutoModelForCausalLM

from peft import LoraConfig, get_peft_model

from trl import GRPOTrainer, GRPOConfig

from datasets import load_dataset

import wandb

import torch def train_model(args): """Train the model using GRPO.""" wandb.init(project="grpo") dataset = load_dataset("json", data_files=args.dataset, split="train") dataset = dataset.map(make_conversation) train_test_split = dataset.train_test_split(test_size=0.1) train_dataset = train_test_split["train"] test_dataset = train_test_split["test"] model = AutoModelForCausalLM.from_pretrained( args.model_id, torch_dtype="auto", device_map="auto", ) lora_config = LoraConfig( task_type="CAUSAL_LM", r=8, lora_alpha=32, lora_dropout=0.1, target_modules=["q_proj", "v_proj"], ) model = get_peft_model(model, lora_config) model.print_trainable_parameters() training_args = GRPOConfig( output_dir=args.output_dir, learning_rate=args.learning_rate, remove_unused_columns=False, gradient_accumulation_steps=args.batch_size, num_train_epochs=args.epochs, bf16=True, max_completion_length=64, num_generations=4, max_prompt_length=128, report_to=["tensorboard"], logging_steps=10, push_to_hub=True, save_strategy="steps", save_steps=10, ) trainer = GRPOTrainer( model=model, reward_funcs=[format_reward, accuracy_reward], args=training_args, train_dataset=train_dataset ) trainer.train() trainer.save_model(training_args.output_dir) trainer.push_to_hub(dataset_name="passport_en_grpo")

Below are the fine-tuning results for GRPO on a system with two RTX 3090 GPUs:

Hardware | Num GPUs | Total Training Time | Time per Step |

2x RTX 3090 | 2 | ≈ 38 minutes 24 seconds | ~24.25s |

Compared to other models, GRPO takes longer to fine-tune due to the complexity of policy optimization.

For comparison, we estimate the fine-tuning time on a single A100 GPU:

Hardware | Num GPUs | Total Training Time | Time per Step |

1 x A100 | 1 | ≈ 22 minutes | ~13.9s |

-

This script:

-

Loads and processes the dataset

-

Applies LoRA for efficient fine-tuning

-

Trains the model using GRPO with the defined reward functions

-

Saves and uploads the trained model

We evaluate the results on the Dataset set of 200 files

We used LLaMA-Factory to fine-tune the Qwen2.5-1.5B model with Supervised Fine-Tuning (SFT) and LoRA. The fine-tuned model, Qwen2.5-1.5B-SFT-LoRA, significantly outperformed the base instruct model, improving accuracy from 66.58% to 87.62%. In comparison, the larger 14B instruct model achieved only 70% accuracy, suggesting that fine-tuning on targeted tasks yields greater performance gains than simply scaling model size. However, extraction errors persisted in certain structured fields, particularly with Machine Readable Zone (MRZ) data.

Applying GRPO after SFT further improved accuracy to 94.21%, as seen in the Qwen2.5-1.5B-SFT-LoRA-GRPO model. This reinforcement learning approach helped the model handle structured fields more effectively. Notably, fields like MRZ, which previously had errors in the Qwen2.5-1.5B-SFT-LoRA model, were extracted with higher accuracy in the Qwen2.5-1.5B-SFT-LoRA-GRPO variant.

These results highlight the benefit of reinforcement learning in structured data extraction, especially for fields with strict formatting rules like MRZ.

Results of supervised fine-tuning and GRPO post-training

Model Name | Accuracy (%) | Notes |

Qwen2.5-1.5B-Instruct | 66.58% | Base instruct model, struggles with structured fields like MRZ. |

Qwen2.5-14B-Instruct | 70.00% | Larger model, but only slight improvement over the 1.5B-Instruct model. |

Qwen2.5-1.5B-SFT-LoRA | 87.62% | Significant improvement after SFT and LoRA, but MRZ errors persist. |

Qwen2.5-1.5B-SFT-LoRA-GRPO | 94.21% | Best performance, improved MRZ extraction due to GRPO reinforcement. |

These results highlight the benefit of reinforcement learning in structured data extraction, especially for fields with strict formatting rules like MRZ.

However, despite these improvements, some extraction errors still persist. One possible reason is the quality of the "think" data generated using the free Gemini API, which may not always provide highly accurate or structured reasoning sequences. A potential approach to further enhance performance is to improve the quality of the "think" data by using a more reliable data-generation method or a higher-quality reasoning API.

-

GRPO without a cold start is challenging: Starting GRPO training from a base model (without SFT) proved difficult. The model struggled to learn the task and exhibited "overthinking" behavior, exceeding the context length.

-

SFT provides a strong foundation: Initializing with an SFT model significantly accelerated learning and improved the effectiveness of GRPO. The model quickly converged and produced usable results.

-

LoRA works well: Using LoRA, was sufficient for achieving good results on this task, demonstrating its potential for resource-constrained scenarios.

-

Reward function design is crucial: The carefully designed reward function played a critical role in shaping the model's behavior, guiding it toward correct solutions and proper formatting.

We fine-tuned a reasoning model for passport data extraction using a two-stage approach:

-

SFT for baseline accuracy.

-

GRPO for reasoning and structured output.

By designing a structured dataset and a reward-based GRPO system, we improved accuracy, consistency, and reasoning abilities. The GRPO-enhanced model demonstrated better handling of structured fields, particularly MRZ, compared to SFT alone.

However, some fields still contain extraction errors, even after GRPO fine-tuning. To further enhance performance, we need to refine the reward function and explore more advanced optimization techniques.