Modernizing Our Search Stack

Neighbors all over the world turn to Nextdoor and look for local information through explicit queries in the search bar. Neighbors use the search bar to connect with other neighbors, find business recommendations, information about local non-profits, and find out what is happening around them.

Sometimes the intent of our users is very clear. When neighbors search for “plumber recommendations” we know they want to find out what experiences neighbors have had with plumbing companies nearby.

However, when neighbors search for “home decor,” neighbors may think about hiring a company that specializes in home decor or they may be looking for ideas about home decoration.

Along the same lines, when someone searches for “barbecue” they could be looking to buy a barbecue grill from the neighbors, or they could be trying to find a nearby restaurant that serves barbecue.

We use these examples to portray the complexity of the intent our neighbors express. The Nextdoor Search Team is a small team that tackles serving neighbors with local and accurate search results. This work is at the intersection of Information Retrieval, Machine Learning, building scalable infrastructure, and classical software engineering.

Query Understanding Engine

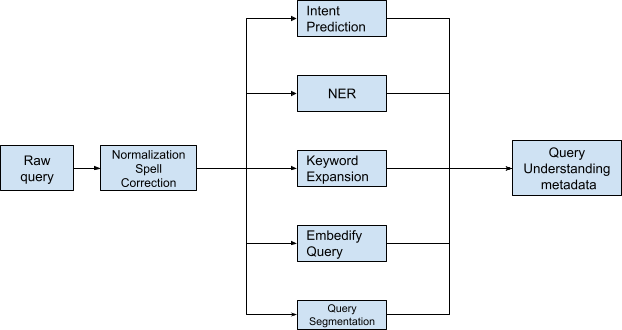

There are many dimensions that involve interpreting user intent and subsequently returning the content matching that intent. To help us understand our neighbors’ queries better, we built the Query Understanding engine. The Query Understanding is the stage in the search flow where given the input query, we extract high-level features from the neighbor’s intent.

The key element to understanding our neighbors is world-class query understanding. By running a series of A/B tests, we managed to showcase how important investment is in this stage of the search pipeline. For example, by being better at query categorization we showed a lift on many fronts: revenue from partnerships got accelerated, increased recall precision generated more targeted structured queries, and subsequently search results became better.

Every query term conducted by our neighbors has a certain distribution of the topics associated with it and the aggregated probability distribution over topics can give us a query-to-vertical association. For example, for a popular query like `car` we can see that we are more confident that the car is associated with the business vertical compared to users and posts.

For that purpose, we built an extensible Query Understanding pipeline that allows us to extract metadata from the neighbor’s intent. As of now, our query understanding stage includes query cleanup, normalization, and spell correction sequenced by the fan-out stage where we invoke many models in order to prepare a query for the recall stage. The fan-out stage invokes inferences of several models like Intent Prediction, Named Entity Recognition (NER), and Keyword Expansion with tight model inference service level agreements (SLAs).

Query Understanding Engine

In this diagram, we omitted some of the models that are irrelevant for the Query Understanding stage (ie. embedifying the query). In the following sections, we will describe each model in detail.

Query Expansion

Search results are highly determined based on the currently available inventory. If a neighbor searches for ‘sofa’ and we do not have search results available at a given time, it makes sense to show results for the expanded or replacement inventory ie. ‘ottoman’ or ‘couch’. In this case, the best way to provide close search results is by utilizing representation learning. Representation learning is the application of Deep Learning where the representation of the entities is learned through the data. When neighbors use the search and the neighbor calls a local business as a result, that information can be used to connect the queries used to find the business. In other words, the queries at hand share the same context. In the embeddings space, queries that share the same context are close to each other compared to queries that do not share the same context. Thus, the query ‘sofa’ will be close to the query ‘ottoman’ in the embedding space.

Inspired by GloVe, word2vec, and other related work, we designed the following query2vec model with our neighbor search behavior data. Instead of using a word in the query as the token or using the query itself as the token, we decided to use them both to get the query embedding representation.

In the Query Understanding fan-out stage, given that normalized query, we would run the K-Nearest retrieval in the embeddings space. Responses would be added as the candidates for the recall.

Spell Correction

At Nextdoor, there have been many attempts to solve spell correction. These approaches range from simple word edit distance and statistical approach to using HMM.

After running lots of experiments, we decided to go with the Deep Learning Approach utilizing sequence to sequence architecture with Attention.

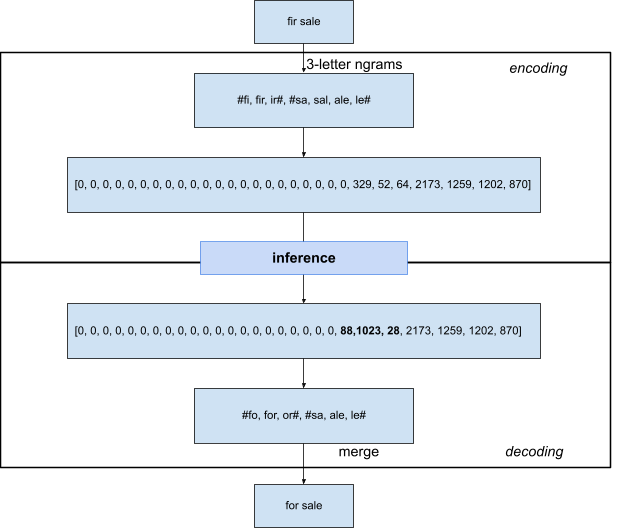

The crux of the algo lies in how we preprocess the query. The spell correction flow in an initial stage would take a raw query and we would split it into letter-trigrams. This allows us to control the dimensionality of the input space and have the ability to spell correct words that are out of vocabulary (OOV). At the same time, we are reducing the dimensionality of the input space by reducing the space from 100m to 50k word space. Additionally, we capture sub-word semantics (ie. prefix and suffix are close to each other in embeddings space) and the number of potential collisions is quite small (0.0036%).

Once we encode the raw query, we run the inference and get the spell-corrected tri-letter representation of the word. The last step in the inference included decoding the tri-letter n-gram back to spell correction suggestion. The diagram below depicts the whole flow for the misspelled word fir sale.

Spell Correction Encoder-Decoder Flow

To get the training data set for this model we used the sequence of queries entered by neighbors while conducting the searches across the platforms. Query sequences within the same session would be considered for the candidate set. We used these queries and associated transition probabilities to create final training, test, and validation sets. The core of the architecture that we used represents a version of the Sequence-to-Sequence Architecture with Attention.

We were playing with multiple stacked LSTMs and figuring out how to balance the trade-off between model accuracy and latencies constraints at hand. In our case, a simpler architecture proved to be better and landed with LSTM with an Attention layer stacked on top of it.

Intent Prediction

Intent prediction is a very important piece of the search ecosystem. It impacts recall, precision, and revenue. The model went through many iterations until we got it right. Initial versions of the model relied on the neighbor feedback through clicks. The basic idea was to leverage the bipartite graph between the queries and documents the neighbor clicked on.

For example, if someone searches for ‘lawn and garden maintenance’ followed by clicking on the search result from the Home and Garden topic, we would consider <lawn and garden maintenance, Home and Garden> as positive samples for associating the query with the topic at hand. However, we faced many challenges with this approach as challenges ranged from incomplete taxonomy all the way to the lack of, or inaccurate, labels for the documents.

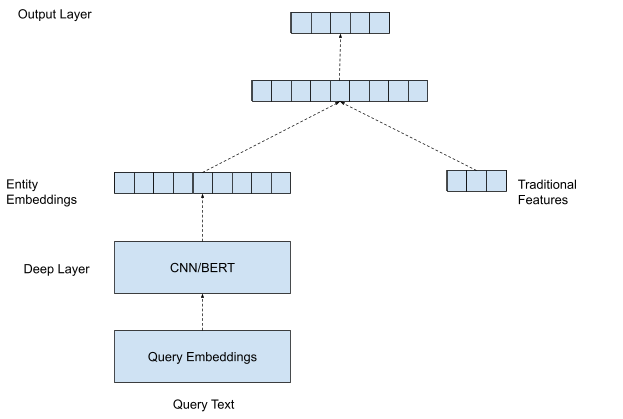

After some iterations, we decided to acquire a manually labeled dataset and run a different model architecture. We landed on the deep and wide architecture depicted below.

Intent Prediction Architecture

The architecture represents a scalable character-level deep model that is capable of predicting the intent of the complete and incomplete queries. This is quite useful for both autocomplete and SERP pages. The initial query is embedified (the process of generating an embedded version of the entity) and passed through the multiple deep layers where it gets merged with wide features. The output layer predicts the class of the input, in this case the topic of the query.

Compared to the previous version, the model above showcases more accurate results in the offline evaluation. Since we used manually labeled data, we were able to debias training corpus. Using CNNs in the architecture described above was the right choice for our use case considering we had to make between model capacity and inference latencies.

An important aspect of search is the ephemeral intent signal we get from the search. Such a signal has application across the board. Besides having the capability of mapping query to the topic and using it for the search, an output of intent prediction model can be used for short-term intent prediction for users.

Natural Language Recognition

Another important piece of query understanding involves identifying the different types of entities, such as people, places, or businesses, that can be present in queries. For this, we’ve developed a custom named entity recognition (NER) model that runs on our queries.

While there are several open-source solutions for NER available, we found that we would need to create a custom model for various reasons. The primary reason is that most existing NER models were trained on sentence-level content, and did not perform well when we evaluated them on search queries, which are much shorter. Another reason is that we wanted to introduce some Nextdoor-specific entity types, such as SERVICE (e.g. “handyman” or “plumber”).

We algorithmically collected the training data for this model based on search logs, which allowed us to iterate much faster compared to manual data labeling. Entities were assigned for queries based on the search results that users interacted with. For example, if the majority of the clicks for the query “John Doe” were on a user profile result, then we can determine that the query contains a PERSON entity. From there, we applied some rule-based data cleanup and augmentation based on common query patterns.

The model itself uses a transformer-based architecture with a token classification head on top. To leverage transfer learning, we trained several different versions of the model using different base transformers, including cased and uncased variants of BERT, DistilBERT, and RoBERTa. Ultimately, we selected a variant using uncased DistilBERT, since it provided the best tradeoff between inference latency and model performance.

Since we’ve deployed this model, we’ve used it to track the different entities Nextdoor users are searching for, giving us better insight into search demand. We’ve also started to explore using the detected entities for better relevance (most importantly for location understanding), and are actively working on further iterations of the model to refine that.

Conclusion

The Nextdoor Search Team is always striving to get the best app experience for our neighbors. Query understanding plays an important role in the Search funnel. Given the complexity of our platform, there are many trade-offs and places for improvement within our search stack. Some of the challenges that we have ahead of us lay in the realm of search infrastructure, model performance tracking over time, transformer interference latencies, and model time to market. If you are passionate about solving problems in the search space, please visit our careers page and apply to join our team.

Thank you to the whole Search team including engineering, data science, design, product, and NOPs for the continued hard work that helps better our search.

Authors: Bojan Babic, Tong Liu