使用 Tauri 构建本地 LM 桌面应用程序

[

[

A guide on combining the llama.cpp runtime with a familiar desktop application framework to build great products with local models faster

关于将 llama.cpp 运行时与熟悉的桌面应用程序框架结合以更快地构建出色产品的指南

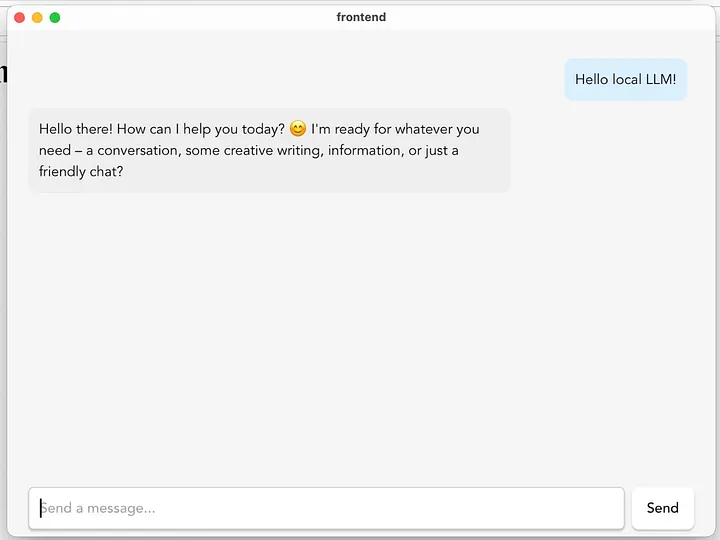

Local LM application we are building with Tauri and llama.cpp

我们正在使用 Tauri 和 llama.cpp 构建的本地 LM 应用程序

The world of local LMs is incredibly exciting. I’m constantly impressed by how much capability is now packed into smaller models (around 1B–7B parameters) and I’d argue it’s becoming increasingly possible to perform some of the basic tasks I typically rely on models like Claude or GPT-4o for using these lighter alternatives (drafting emails, learning basic concepts, summarising documents).

本地 LMs 的世界令人兴奋。我不断对现在较小模型(大约 1B–7B 参数)中所包含的能力感到惊讶,我认为使用这些更轻量的替代品(起草电子邮件、学习基本概念、总结文档)来执行我通常依赖于 Claude 或 GPT-4o 的一些基本任务变得越来越可行。

Several key tools in the current landscape such as Ollama, LM Studio, and Jan make it easier for people to experiment with and interact with local models. As an engineer, I really admire these tools and how much they’ve enabled me to explore my own curiosity. That said, I can’t help but feel that their developer-oriented workflows and design may limit broader accessibility, preventing non-developers/everyday people from fully experiencing the power of local models.

当前环境中几个关键工具,如 Ollama、LM Studio 和 Jan,使人们更容易实验和与本地模型互动。作为一名工程师,我非常欣赏这些工具以及它们让我探索自己好奇心的能力。尽管如此,我仍然觉得它们面向开发者的工作流程和设计可能限制了更广泛的可及性,阻止非开发者/普通人充分体验本地模型的强大功能。

Beyond that, I’ve noticed a bit of a gap between the ability to run local models and the creation of truly polished, user-friendly applications built on top of them.

除此之外,我注意到在运行本地模型的能力与基于它们构建的真正精致、用户友好的应用程序之间存在一些差距。

Upon realising this, it led to a fun little Sunday afternoon hacking project: bundling a local language model and an appropriate inference runtime into a compact desktop...