Why AI Needs a Face: Building Dew, My Duolingo-Inspired AI Character

[

A hands-on breakdown of designing, animating, and syncing voice for an AI assistant using OpenAI, Rive, and a lot of trial and error

Press enter or click to view image in full size

We keep talking about how AI will change the world, but most AI apps look like futuristic marbles floating in space, polished, perfect, and honestly, a bit soulless. The tech gets smarter, but the personality never does.

Why do we settle for talking to something that looks like a screensaver?

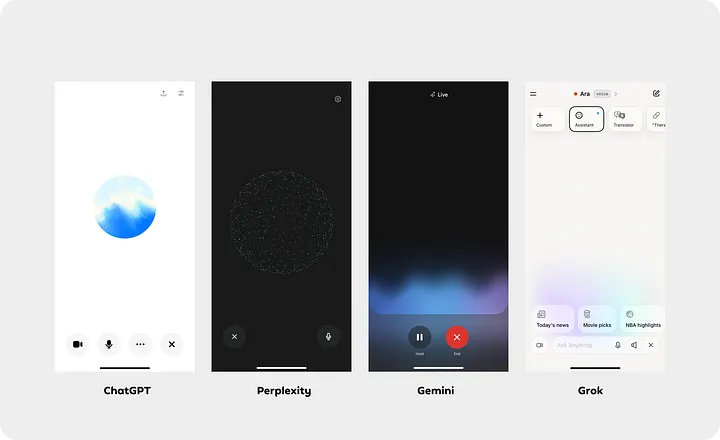

I’ve always liked the look of these abstract orbs in apps like ChatGPT and Perplexity. They’re clean, modern, and safe. But here’s the thing: they don’t feel personal.

When we interact with characters in games or apps, we remember their quirks, their faces, and even their jokes. That emotional spark is missing from most AI experiences. I realised that if AI is going to be part of our daily lives, it needs to do more than just answer questions — it needs to feel alive.

That’s how Dew was born.

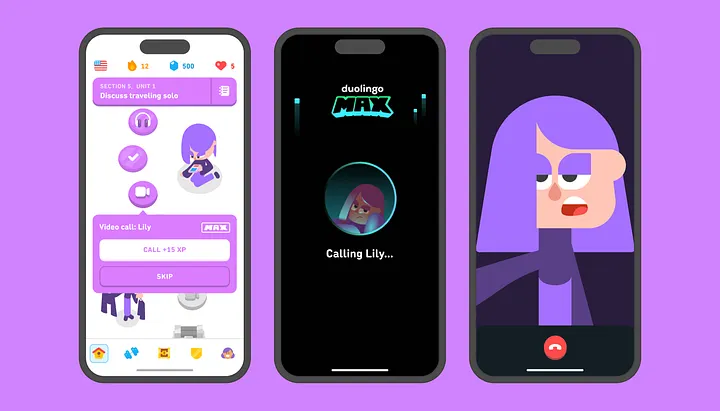

I was inspired by characters like Lily from Duolingo – sarcastic, expressive, and fun. I wanted to create something similar: an AI assistant that feels alive, reacts naturally, and makes you smile.

Press enter or click to view image in full size

Duolingo’s Lily

Deep Philosophy Behind It

One thing I realised early on: we need to be deeply invested in the philosophy of the character. It’s not just about making something cute, it’s about making something emotionally resonant.

A character that listens, reacts, and connects on a deeper level can completely change how people interact with technology.

That’s the goal. Not just a talking avatar, but something that reshapes how we use AI in our everyday lives.

Most AI interfaces today have settled on a visual language that’s instantly recognisable: abstract orbs, smooth gradients, and playful animations. ChatGPT and Perplexity use orbs that pulse or shimmer, Gemini and Grok go with gradient waves, and Perplexity even lets you “touch” the AI, making the orb react with a dynamic particle system. Honestly, these are great design choices.

Press enter or click to view image in full size

How most AI apps look today

For a lot of users, especially those new to AI, these cues are helpful. They make the technology feel alive and interactive, not just a wall of text. But at the end of the day, these elements are still just signals that “something is happening.” They look great, but they’re not personal. They don’t invite you to build a relationship or talk to the AI as if it’s anything more than a smart system behind the glass.

The process: It All Started with a Sketch

Before touching any code or animation tools, I sketched Dew on paper. I used Duolingo’s guide to make the characters. I wanted him to look simple but friendly. Something about him should feel familiar. As I drew, I imagined how he would move, how his eyes would blink or how he’d react when someone talks to him.

Once I was happy with the sketch, I moved to Figma to clean things up. There, I turned Dew into precise vector shapes. Every part eyes, mouth, and eyebrows had its layer. That way, I could animate each bit later without any mess.

Press enter or click to view image in full size

Dew’s journey: from early pencil sketches, to a vector-based character, to a fully animated avatar.

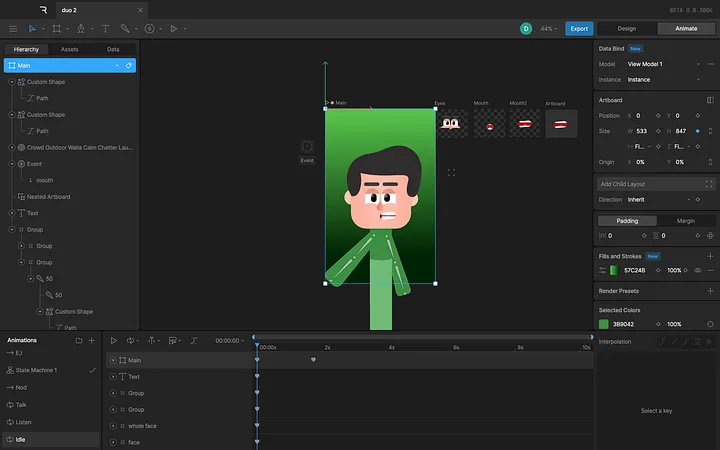

Bringing Dew to Life in Rive

Next, I brought all the pieces into Rive. If you haven’t used Rive, it’s a tool made for real-time, interactive animations, and it’s a game-changer.

I split things up into different artboards:

• Main body: This had subtle breathing animations to make him feel alive.

• Eyes: I used ping-pong loops for blinking, with smooth easing to make it look natural.

• Mouth: This was the tricky part. I built a full set of mouth shapes (phonemes) based on viseme charts. These were the key to syncing his lips with spoken words.

Originally, I tried connecting the nested artboards (like the eyes and mouth) to the main artboard using separate state machines. But I ran into latency issues when syncing input across them. So I redesigned it with a layered approach. I created one unified state machine inside the main artboard that controlled the eyes, mouth, and body together. This way, everything responds in sync without delays. I used this article as a reference.

Press enter or click to view image in full size

Animating Dew in Rive: every blink, nod, and smile is built up from individual layers and state machines.

Making Him Talk and Listen

To make Dew talk and listen, I set up a simple pipeline using Swift:

1. Recording voice using AVFoundation

2. Sending audio to OpenAI Whisper for transcription

3. Using ChatGPT to generate a response

4. Turning that response into speech with echo TTS

But I didn’t stop there. The most important part of realism was lip sync. I built a simple viseme system, a way to map groups of letters and sounds to specific mouth shapes. Instead of trying to animate every single word, I grouped common sounds and assigned each a matching shape. For example, “TH,” “CH,” and “SH” all use similar mouth forms, and vowels like “O,” “A,” and “E” each have their own. Dew’s mouth moves in sync with what he’s saying, thanks to this mapping function:

private func generateVisemes(from reply: String) -> [Int] {

let upper = reply.uppercased()

let multi: [String: Int] = ["TH": 10, "CH": 6, "SH": 6]

let single: [Character: Int] = [

"O":1, "E":2, "I":2, "A":0, "U":11,

"L":3, "B":4, "M":4, "P":4,

"F":5, "V":5, "J":6,

"R":7, "Q":8, "W":8,

"C":9, "D":9, "G":9,

"K":9, "N":9,

"S":9, "T":9, "X":9, "Y":9, "Z":9

]

It’s not Pixar-level perfection, but it’s fast, responsive, and way more engaging than static mouth flaps.

A major headache in the process was getting live, uninterrupted conversation working, letting Dew talk and listen naturally, without the user needing to tap a button. But constant feedback loops made it almost impossible; Dew would end up listening to himself, creating annoying echoes. To solve this, I had to compromise: I switched to a Tap-to-talk system. Now, the mic only records while you hold the button, and Dew replies only after you finish. It sounds obvious, but this change completely transformed the experience, no more awkward overlaps, just smooth turn-taking.

Dew vs. Lily: What I Learned

Press enter or click to view image in full size

I want to be clear — Dew is nowhere near the level of Duolingo’s characters like Lily. Lily is a fully realised product, and the Duolingo team nailed her personality, emotions, and expressiveness. What I built with Dew is fundamentally different: an AI assistant, powered by OpenAI’s voice and language models, with a character layered on top.

I’m not trying to make a direct comparison. Lily is the result of creative work and tight execution, with a whole team behind her. Dew is me following my own rules, making the most of whatever technology I had access to, and being driven by a desire to build something people could connect with.

When I tested Dew with five users, the response surprised me. I saw real smiles, people talking to Dew as if he were a person, not just an information machine. That’s when it clicked for me: people don’t want to talk to a cold, transactional bot. They light up when the experience feels personal, even if it’s simple. That single moment, a user grinning at Dew’s response was a breakthrough. It proved that this approach has legs, and that even a small project, built with heart and a bit of personality, can make people want to talk.

Looking Forward

Right now, Dew’s expressions are tied to direct user inputs. He hasn’t decided what to feel yet. That’s something I want to change.

In the future, I want Dew to interpret the meaning. Like if a sentence sounds exciting, maybe he leans forward. If something’s confusing, maybe he frowns or tilts his head. To get there, I’ll need to build a kind of emotional “brain” that understands language not just semantically, but emotionally.

Imagine mapping strings to emotional weights – joy, surprise, confusion – and assigning animations based on that. It’s a challenge, but it’s where I believe AI characters need to go.

And if we get that right? Then we’re not just designing assistants. We’re creating companions.

What I Learned

This whole project taught me a lot. Here are a few things I’ll take with me:

• Start small and build modularly

• Name your layers and states clearly (you’ll thank yourself later)

• Subtle animations go a long way

• It’s okay to break things while learning, just keep iterating

Dew’s not just a project. He’s an example of how AI can feel warm, funny, and engaging. Not just useful, but likeable. And I’m excited to keep exploring where this can go next.

If you’re building something similar or just want to geek out about Rive, AI, or animation, let’s connect. I’d love to hear your story. https://devadhathan.com/