Why we built TLDW

I’m excited to launch a side project I’ve been building with two friends: TLDW (Too long; didn’t watch), a tool that helps people learn from long YouTube videos.

TLDW was inspired by my own pain points when learning from YouTube.

Over the past year, I’ve noticed a shift in my content consumption habits, where I’ve been spending more and more hours watching long YouTube videos to learn: video podcasts, founder interviews, lectures, tutorials... Many of them are more than 1-hour long and packed with high-value information.

I also started to listen to audio podcasts less and started watching more of the video versions on YouTube. Because:

1. Many of them are very dense in information, and I get easily distracted when listening to audio only. I can focus better when watching the video version.

2. Many of these shows contain visual information like product demos, screen shares, and slides. Also, even watching the speaker’s dynamics and body language can help me digest the content better.

Apparently, many other people feel the same, because YouTube is now the biggest podcasting service in the US. Yep, YouTube, not Apple Podcasts or Spotify.

When I recommended my followers do the same, I noticed that many people didn’t have the attention span to sit through these hour-long videos. Instead, they tend to toss the link to an AI tool (say Gemini or NotebookLM) and get it to generate a text summary.

1. Converting video (a high-bandwidth format) into text (a low-bandwidth format) is very problematic. You lose all the rich visual information (demos, slides, speakers’ emotional cues). You also lose lots of details that made this video worth consuming in the first place: the anecdote/example that hits you in the gut, the turn of phrase that makes a line memorable... Many times, it’s not the gist of the video that we remember, but the little details.

2. Text summaries are generic (unless you put in an extremely detailed prompt specifying what you’re looking for, which 99% of people don’t do). Your summary and my summary will look the same. But given the same hour-long video, we will obviously care about different things. An AI researcher watching a talk by Sam Altman might pay attention to parts about model training, whereas a product manager might be more interested in how models are being productized. Summaries should be personalized.

So here’s the dilemma: How do we allow people to consume long-form videos efficiently, while also preserving the fidelity of the original content AND making it a personalized experience?

We felt like the answer lies in this new format: highlight reels.

In the past, AI has mostly been used for “compression”: turning a 1-hour video into a 1-paragraph summary. But what if we use it for “filtering” or “curation” instead? Pick out the 5 minutes that I should pay attention to, but just give me the original clip without watering it down.

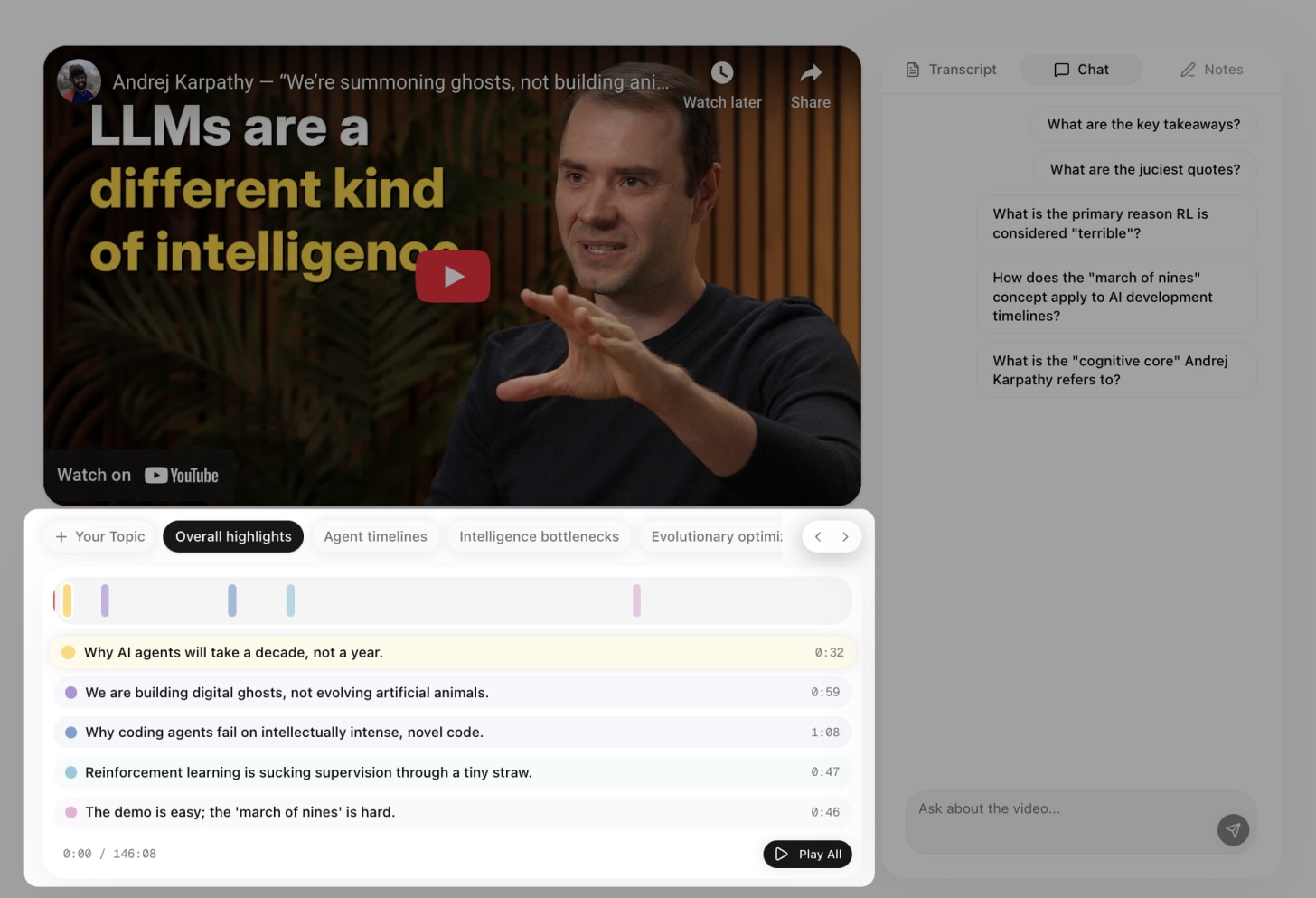

This is what gave rise to TLDW’s key feature: personalized highlight reels. We run the video transcript by an LLM which will identify the most high-signal parts of a video. These “highlight reels” are then displayed using different colors on the progress bar, and clicking on each will take you directly to the corresponding timestamp. And this can be personalized, because different viewers care about different things: Say you’re someone working in education, you could type in “future of education”, and the model will highlight all the parts that discussed education in the video.

[

We believe “personalized highlight reels” are a better solution than “generic text summary”. Since launch, many users said they really like this user interface, and that this is the tool they’ve been waiting for.

In addition, we also have a few features that addressed my own pain points when trying to watch technical videos to learn AI:

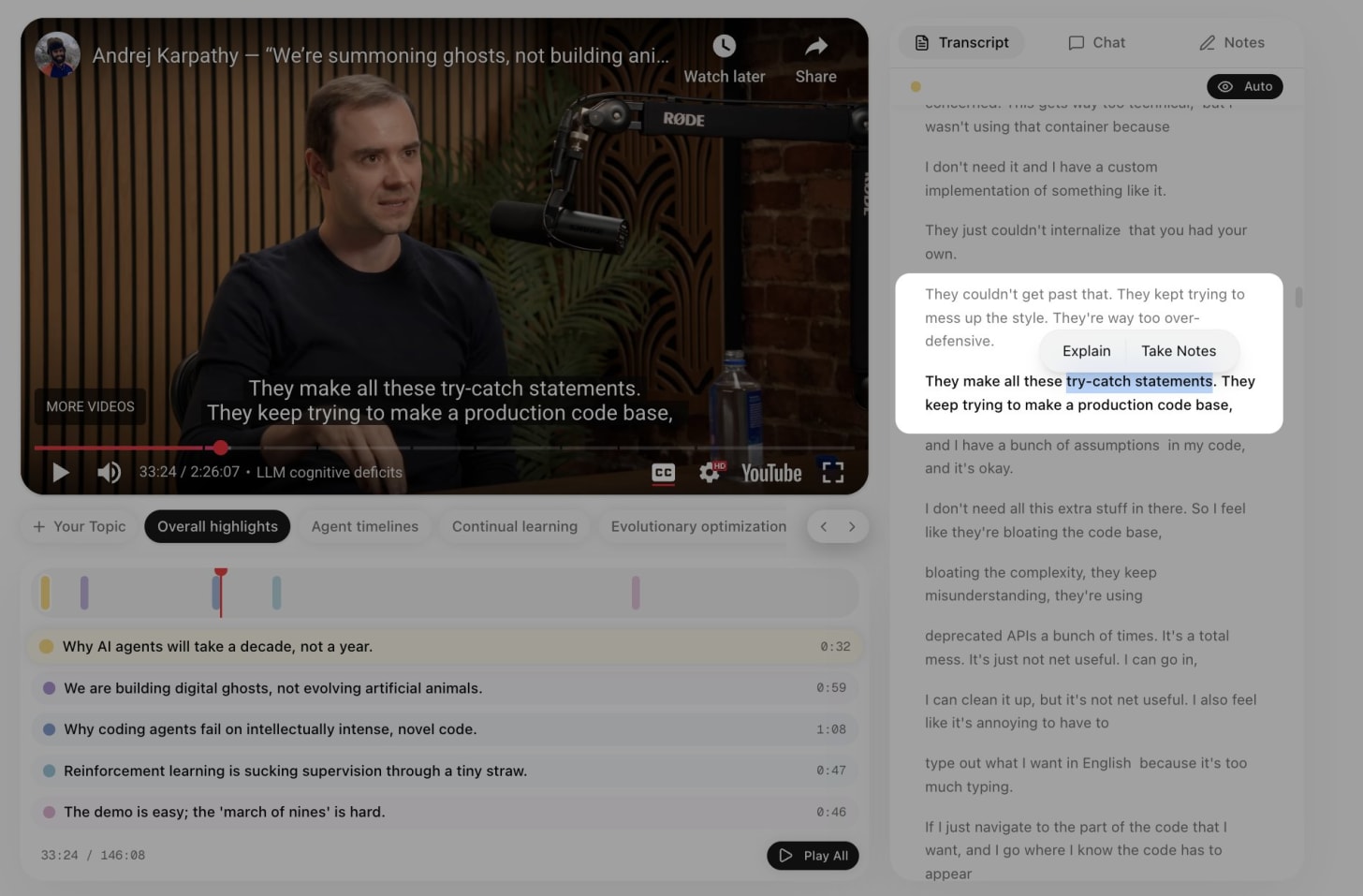

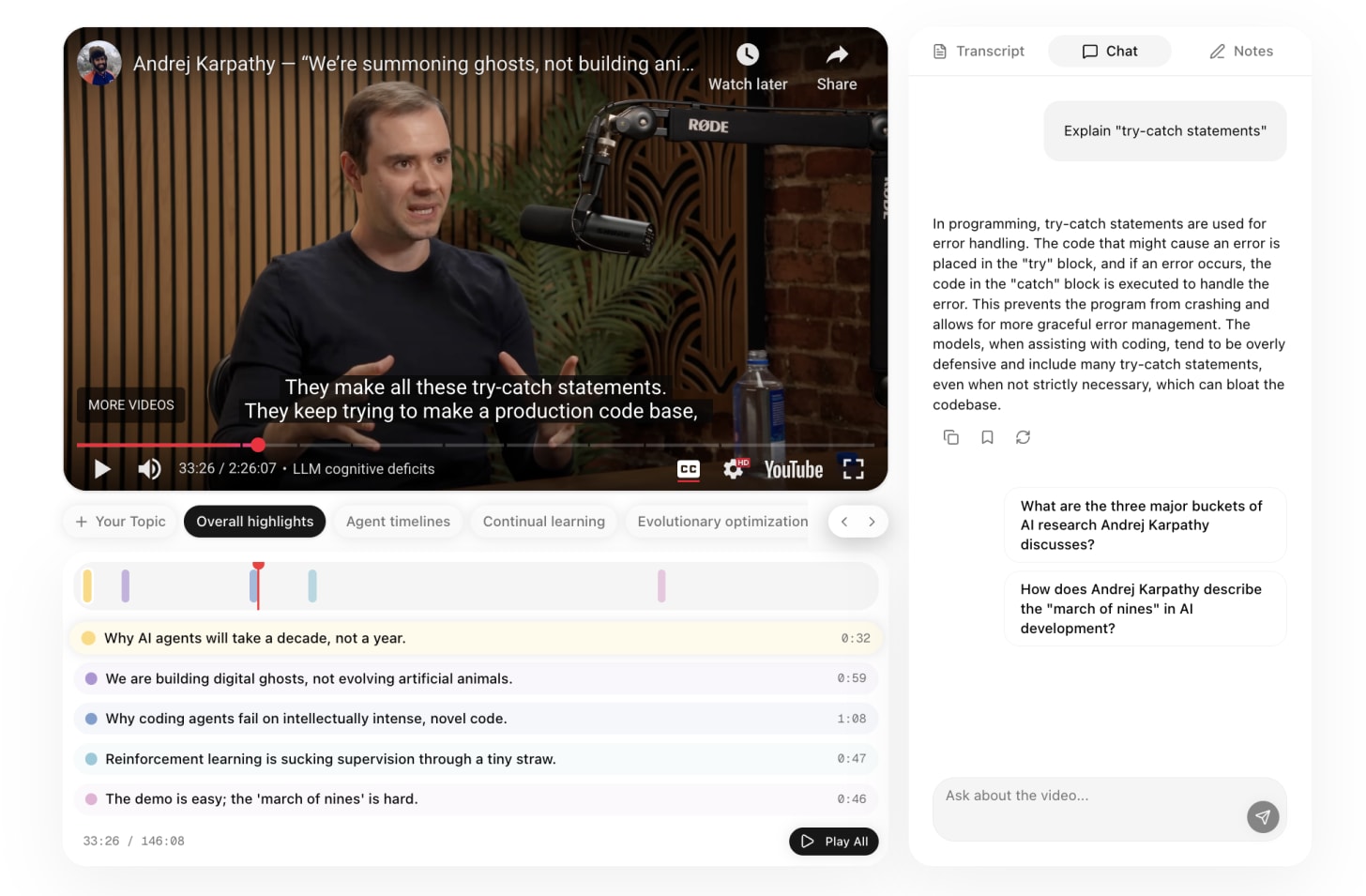

1. When you come across a term/jargon you don’t understand, you can select it in the transcript and click “Explain” - the AI will explain it for you in CONTEXT. The key here is “in context”. Before, I used to manually type out these terms in ChatGPT, but ChatGPT didn’t have the context of what I was watching. The meaning of words/phrases changes depending on the context. Because our AI has the whole video transcript in its context window, it’s able to provide a context-specific explanation in one click.

[

[

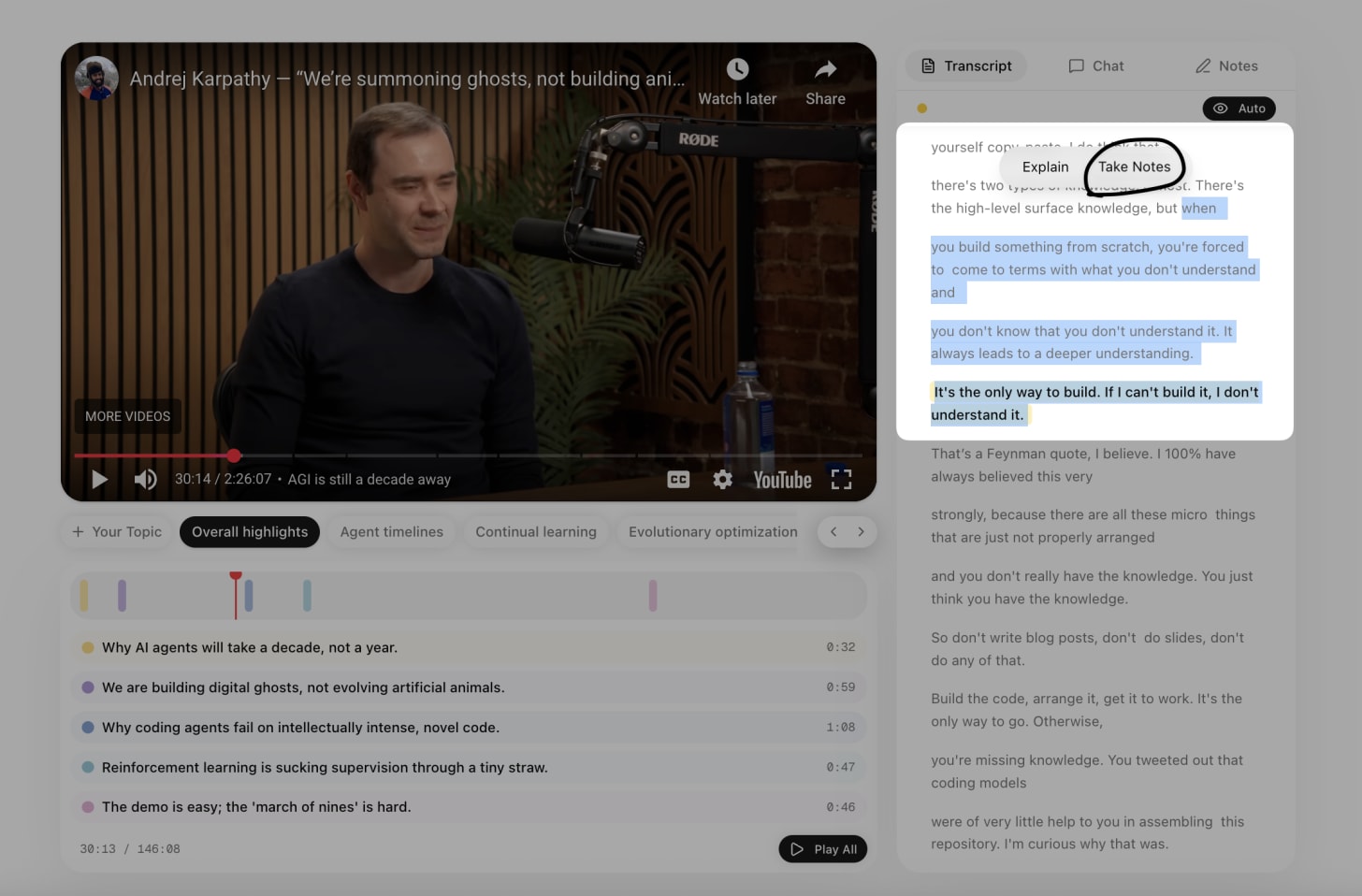

2. When you come across a memorable line, you can select it in the transcript and click “Take notes”, and this will automatically be added to your personal notebook.

[

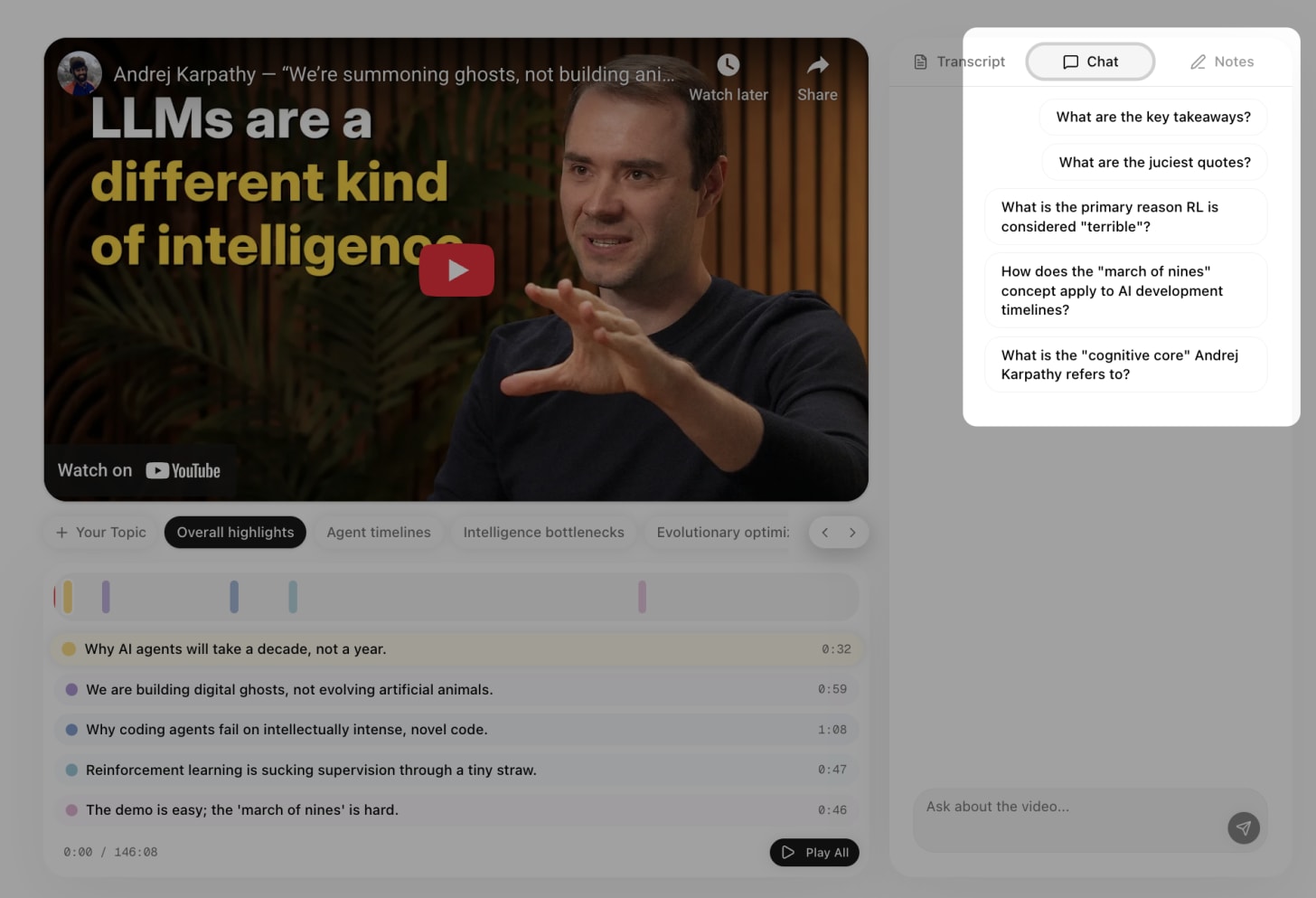

3. You can chat with the video transcript. Ask questions such as “what are the juiciest quotes” or “how does the speaker feel about [insert topic]”.

[

4. All your past videos and notes are saved in one central place, so this could become your personal learning hub.

Things we’re planning to add in the future:

1. Being able to share quotes/clips easily with other people/on social media, so that you can learn in public

2. Multilingual support and adding translation features

3. Enhancing the personalization: the AI could learn about your taste and interests and recommend reels based on those

I worked on TLDW on nights and weekends with 2 amazing partners: Samual Zhang (developer) and Yiqi Yan (designer). This was not our full-time job; we’ve been working on it out of genuine passion for the problem space and a desire to get our hands dirty building in AI. We also open-sourced the code here.

The product is still very early and has a lot of room for improvement, so would appreciate your feedback through emailing me (zara.r.zhang@gmail.com) or tagging me on X (zarazhangrui).