Building a Next-Generation Key-Value Store at Airbnb

[

Press enter or click to view image in full size

How we completely rearchitected Mussel, our storage engine for derived data, and lessons learned from the migration from Mussel V1 to V2.

By Shravan Gaonkar, Chandramouli Rangarajan, Yanhan Zhang

How we completely rearchitected Mussel, our storage engine for derived data, and lessons learned from the migration from Mussel V1 to V2.

Airbnb’s core key-value store, internally known as Mussel, bridges offline and online workloads, providing highly scalable bulk load capabilities combined with single-digit millisecond reads.

Since first writing about Mussel in a 2022 blog post, we have completely deprecated the storage backend of the original system (what we now call Mussel v1) and have replaced it with a NewSQL backend which we are referring to as Mussel v2. Mussel v2 has been running successfully in production for a year, and we wanted to share why we undertook this rearchitecture, what the challenges were, and what benefits we got from it.

Why rearchitect

Mussel v1 reliably supported Airbnb for years, but new requirements — real-time fraud checks, instant personalization, dynamic pricing, and massive data — demand a platform that combines real-time streaming with bulk ingestion, all while being easy to manage.

Key Challenges with v1

Mussel v2 solves a number of issues with v1, delivering a scalable, cloud-native key-value store with predictable performance and minimal operational overhead.

- Operational complexity: Scaling or replacing nodes required multi-step Chef scripts on EC2; v2 uses Kubernetes manifests and automated rollouts, reducing hours of manual work to minutes.

- Capacity & hotspots: Static hash partitioning sometimes overloaded nodes, leading to latency spikes. V2’s dynamic range sharding and presplitting keep reads fast (p99 < 25ms), even for 100TB+ tables.

- Consistency flexibility: v1 offered limited consistency control. v2 lets teams choose between immediate or eventual consistency based on their SLA needs.

- Cost & Transparency: Resource usage in v1 was opaque. v2 adds namespace tenancy, quota enforcement, and dashboards, providing cost visibility and control.

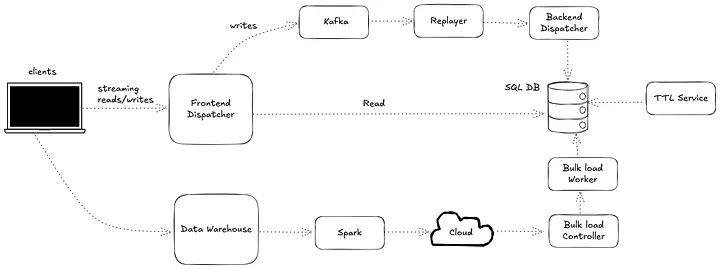

New architecture

Press enter or click to view image in full size

Mussel v2 is a complete re-architecture addressing v1’s operational and scalability challenges. It’s designed to be automated, maintainable, and scalable, while ensuring feature parity and an easy migration for 100+ existing user cases.

Dispatcher

In Mussel v2, the Dispatcher is a stateless, horizontally-scalable Kubernetes service that replaces the tightly coupled, protocol-specific design of v1. It translates client API calls into backend queries/mutations, supports dual-write and shadow-read modes for migration, manages retries and rate limits, and integrates with Airbnb’s service mesh for security and service discovery.

Reads are simplified: Each dataname maps to a logical table, enabling optimized point lookups, range/prefix queries, and stale reads from local replicas to reduce latency. Dynamic throttling and prioritization maintain performance under changing traffic.

Writes are persisted in Kafka for durability first, with the Replayer and Write Dispatcher applying them in order to the backend. This event-driven model absorbs bursts, ensures consistency, and removes v1’s operational overhead. Kafka also underpins upgrades, bootstrapping, and migrations until CDC and snapshotting mature.

The architecture suits derived data and replay-heavy use cases today, with a long-term goal of shifting ingestion and replication fully to the distributed backend database to bring down latency and simplify operations.

Bulk loadBulk load remains essential for moving large datasets from offline warehouses into Mussel for low-latency queries. v2 preserves v1 semantics, supporting both “merge” (add to existing tables) and “replace” (swap datasets) semantics.

To maintain a familiar interface, v2 keeps the existing Airflow-based onboarding and transforms warehouse data into a standardized format, uploading to S3 for ingestion. Airflow is an open-source platform for authoring, scheduling, and monitoring data pipelines. Created at Airbnb, it lets users define workflows in code as directed acyclic graphs (DAGs), enabling quick iteration and easy orchestration of tasks for data engineers and scientists worldwide.

A stateless controller orchestrates jobs, while a distributed, stateful worker fleet (Kubernetes StatefulSets) performs parallel ingestion, loading records from S3 into tables. Optimizations — like deduplication for replace jobs, delta merges, and insert-on-duplicate-key-ignore — ensure high throughput and efficient writes at Airbnb scale.

TTL

Automated data expiration (TTL) can help support data governance goals and storage efficiency. In v1, expiration relied on the storage engine’s compaction cycle, which struggled at scale.

Mussel v2 introduces a topology-aware expiration service that shards data namespaces into range-based subtasks processed concurrently by multiple workers. Expired records are scanned and deleted in parallel, minimizing sweep time for large datasets. Subtasks are scheduled to limit impact on live queries, and write-heavy tables use max-version enforcement with targeted deletes to maintain performance and data hygiene.

These enhancements provide the same retention functionality as v1 but with far greater efficiency, transparency, and scalability, meeting Airbnb’s modern data platform demands and enabling future use cases.

The migration process

Challenge

Mussel stores vast amounts of data and serves thousands of tables across a wide array of Airbnb services, sustaining mission-critical read and write traffic at high scale. Given the criticality of Mussel to Airbnb’s online traffic, our migration goal was straightforward but challenging: Move all data and traffic from Mussel v1 to v2 with zero data loss and no impact on availability to our customers.

Process

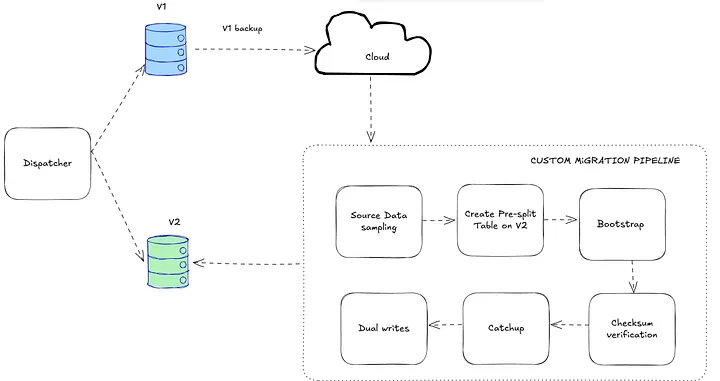

We adopted a blue/green migration strategy, but with notable complexities. Mussel v1 didn’t provide table-level snapshots or CDC streams, which are standard in many datastores. To bridge this gap, we developed a custom migration pipeline capable of bootstrapping tables to v2, selected by usage patterns and risk profiles. Once bootstrapped, dual writes were enabled on a per-table basis to keep v2 in sync as the migration progressed.

The migration itself followed several distinct stages:

- Blue Zone: All traffic initially flowed to v1 (“Blue”). This provided a stable baseline as we migrated data behind the scenes.

- Shadowing (Green): Once tables were bootstrapped, v2 (“Green”) began shadowing v1 — handling reads/writes in parallel, but only v1 responded. This allowed us to check v2’s correctness and performance without risk.

- Reverse: After building confidence, v2 took over active traffic while v1 remained on standby. We built automatic circuit breakers and fallback logic: If v2 showed elevated error rates or lagged behind v1, we could instantly return traffic to v1 or revert to shadowing.

- Cutover: When v2 passed all checks, we completed the cutover on a dataname-by-dataname basis, with Kafka serving as a robust intermediary for write reliability throughout.

To further de-risk the process, migration was performed one table at a time. Every step was reversible and could be fine-tuned per table or group of tables based on their risk profile. This granular, staged approach allowed for rapid iteration, safe rollbacks, and continuous progress without impacting the business.

Migration pipeline

Press enter or click to view image in full size

As described in our previous blog post, the v1 architecture uses Kafka as a replication log — data is first written to Kafka, then consumed by the v1 backend. During the data migration to v2, we leveraged the same Kafka stream to maintain eventual consistency between v1 and v2.

To migrate any given table from v1 to v2, we built a custom pipeline consisting of the following steps:

- Source data sampling: We download backup data from v1, extract the relevant tables, and sample the data to understand its distribution.

- Create pre-split table on v2: Based on the sampling results, we create a corresponding v2 table with a pre-defined shard layout to minimize data reshuffling during migration.

- Bootstrap: This is the most time-consuming step, taking hours or even days depending on table size. To bootstrap efficiently, we use Kubernetes StatefulSets to persist local state and periodically checkpoint progress.

- Checksum verification: We verify that all data from the v1 backup has been correctly ingested into v2.

- Catch-up: We apply any lagging messages that accumulated in Kafka during the bootstrap phase.

- Dual writes: At this stage, both v1 and v2 consume from the same Kafka topic. We ensure eventual consistency between the two, with replication lag typically within tens of milliseconds.

Once data migration is complete and we enter dual write mode, we can begin the read traffic migration phase. During this phase, our dispatcher can be dynamically configured to serve read requests for specific tables from v1, while sending shadow requests to v2 for consistency checks. We then gradually shift to serving reads from v2, accompanied by reverse shadow requests to v1 for consistency checks, which also enables quick fallback to v1 responses if v2 becomes unstable. Eventually, we fully transition to serving all read traffic from v2.

Lessons learned

Several key insights emerged from this migration:

- Consistency complexity: Migrating from an eventually consistent (v1) to a strongly consistent (v2) backend introduced new challenges, particularly around write conflicts. Addressing these required features like write deduplication, hotkey blocking, and lazy write repair — sometimes trading off storage cost or read performance.

- Presplitting is critical: As we shifted from hash-based (v1) to range-based partitioning (v2), inserting large consecutive data could cause hotspots and disrupt our v2 backend. To prevent this, we needed to accurately sample the v1 data and presplit it into multiple shards based on v2’s topology, ensuring balanced ingestion traffic across backend nodes during data migration.

- Query model adjustments: v2 doesn’t push down range filters as effectively, requiring us to implement client-side pagination for prefix and range queries.

- Freshness vs. cost: Different use cases required different tradeoffs. Some prioritized data freshness and used primary replicas for the latest reads, while others leveraged secondary replicas to balance staleness with cost and performance.

- Kafka’s role: Kafka’s proven stable p99 millisecond latency made it an invaluable part of our migration process.

- Building in flexibility: Customer retries and routine bulk jobs provided a safety net for the rare inconsistencies, and our migration design allowed for per-table stage assignments and instant reversibility — key for managing risk at scale.

As a result, we migrated more than a petabyte of data across thousands of tables with zero downtime or data loss, thanks to a blue/green rollout, dual-write pipeline, and automated fallbacks — so the product teams could keep shipping features while the engine under them evolved.

Conclusion and next steps

What sets Mussel v2 apart is the way it fuses capabilities that are usually confined to separate, specialized systems. In our deployment of Mussel V2, we observe that this system can simultaneously

- ingest tens of terabytes in bulk data upload,

- sustain 100 k+ streaming writes per second in the same cluster, and

- keep p99 reads under 25 ms

— all while giving callers a simple dial to toggle stale reads on a per-namespace basis. By pairing a NewSQL backend with a Kubernetes-native control plane, Mussel v2 delivers the elasticity of object storage, the responsiveness of a low-latency cache, and the operability of modern service meshes — rolled into one platform. Engineers no longer need to stitch together a cache, a queue, and a datastore to hit their SLAs; Mussel provides those guarantees out of the box, letting teams focus on product innovation instead of data plumbing.

Looking ahead, we’ll be sharing deeper insights into how we’re evolving quality of service (QoS) management within Mussel, now orchestrated cleanly from the Dispatcher layer. We’ll also describe our journey in optimizing bulk loading at scale — unlocking new performance and reliability wins for complex data pipelines. If you’re passionate about building large-scale distributed systems and want to help shape the future of data infrastructure at Airbnb, take a look at our Careers page — we’re always looking for talented engineers to join us on this mission.