Caching Without Marshal Part 2: The Path to MessagePack

In part one of Caching Without Marshal, we dove into the internals of Marshal, Ruby’s built-in binary serialization format. Marshal is the black box that Rails uses under the hood to transform almost any object into binary data and back. Caching, in particular, depends heavily on Marshal: Rails uses it to cache pretty much everything, be it actions, pages, partials, or anything else.

Marshal’s magic is convenient, but it comes with risks. Part one presented a deep dive into some of the little-documented internals of Marshal with the goal of ultimately replacing it with a more robust cache format. In particular, we wanted a cache format that would not blow up when we shipped code changes.

Part two is all about MessagePack, the format that did this for us. It’s a binary serialization format, and in this sense it’s similar to Marshal. Its key difference is that whereas Marshal is a Ruby-specific format, MessagePack is generic by default. There are MessagePack libraries for Java, Python, and many other languages.

You may not know MessagePack, but if you’re using Rails chances are you’ve got it in your Gemfile because it’s a dependency of Bootsnap.

The MessagePack Format

On the surface, MessagePack is similar to Marshal: just replace .dump with .pack and .load with .unpack. For many payloads, the two are interchangeable.

Here’s an example of using MessagePack to encode and decode a hash:

MessagePack supports a set of core types that are similar to those of Marshal: nil, integers, booleans, floats, and a type called raw, covering strings and binary data. It also has composite types for array and map (that is, a hash).

Notice, however, that the Ruby-specific types that Marshal supports, like Object and instance variable, aren’t in that list. This isn’t surprising since MessagePack is a generic format and not a Ruby format. But for us, this is a big advantage since it’s exactly the encoding of Ruby-specific types that caused our original problems (recall the beta flag class names in cache payloads from Part One).

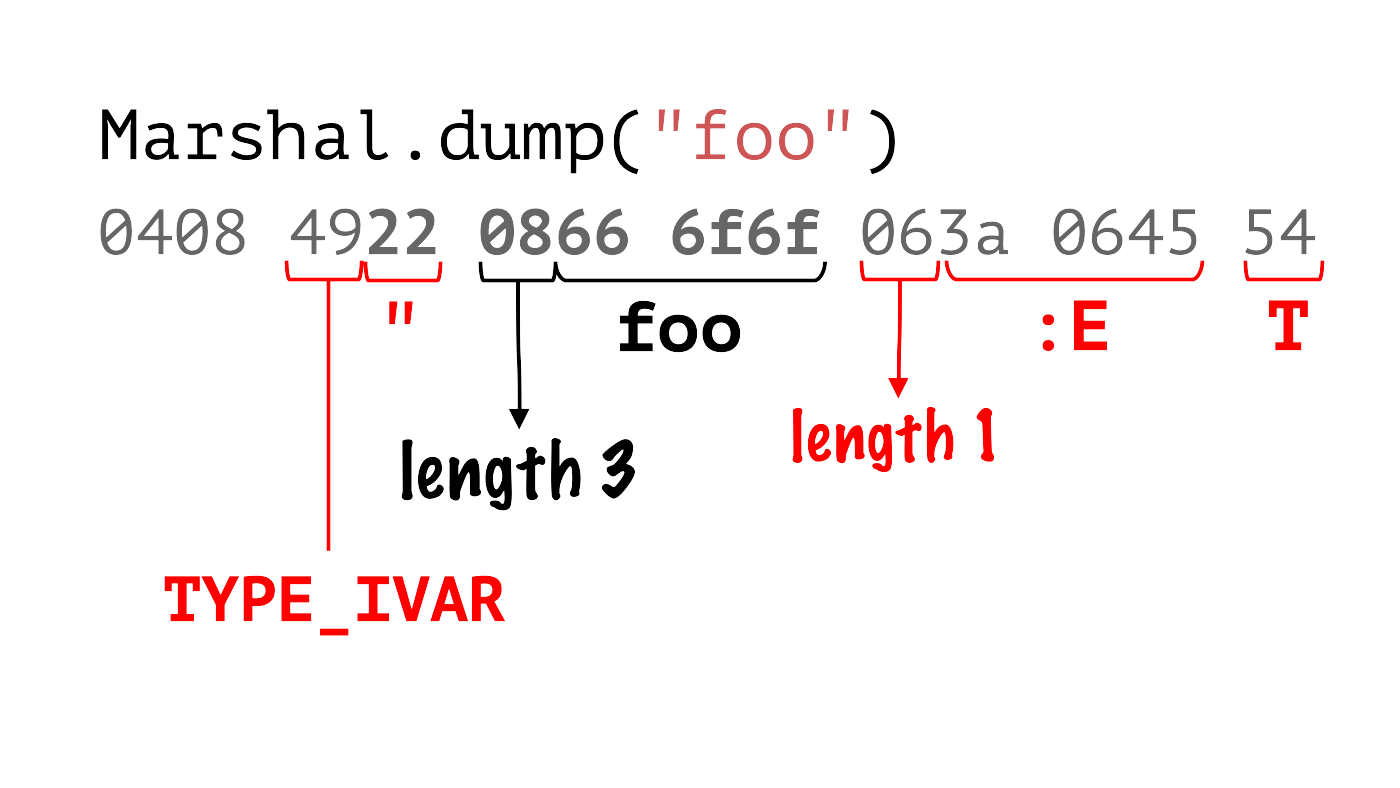

Let’s take a closer look at the encoded data of Marshal and MessagePack. Suppose we encode a string "foo" with Marshal, this is what we get:

Encoded data from Marshal for Marshall.dump("foo")

Let’s look at the payload: 0408 4922 0866 6f6f 063a 0645 54. We see that the payload "foo" is encoded in hex as 666f6f and prefixed by 08 representing a length of 3 (f-o-o). Marshal wraps this string payload in a TYPE_IVAR, which as mentioned in part 1 is used to attach instance variables to types that aren’t strictly implemented as objects, like strings. In this case, the instance variable (3a 0645) is named :E. This is a special instance variable used by Ruby to represent the string’s encoding, which is T (54) for true, that is, this is a UTF-8 encoded string. So Marshal uses a Ruby-native idea to encode the string’s encoding.

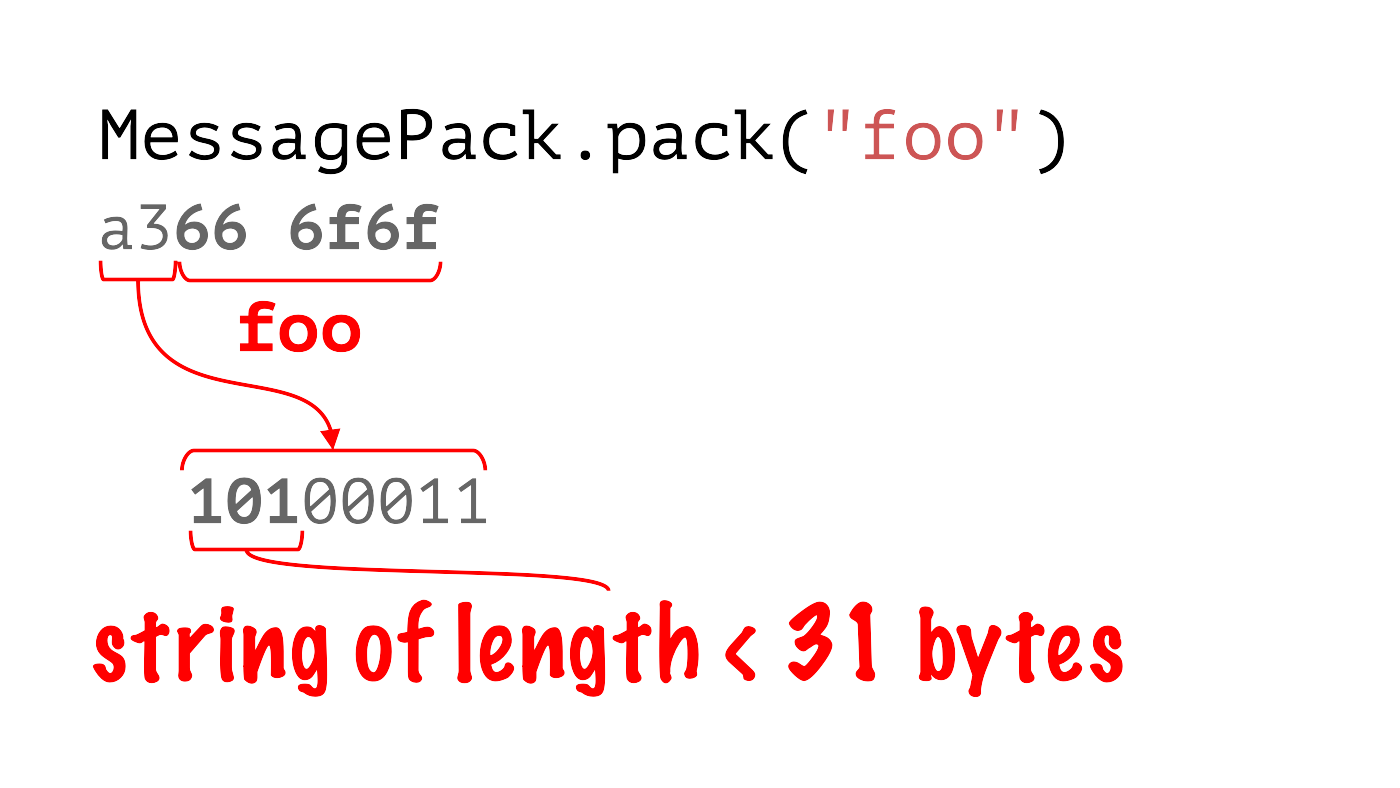

In MessagePack, the payload (a366 6f6f) is much shorter:

Encoded data from MessagePack for MessagePack.pack("foo")

The first thing you’ll notice is that there isn’t an encoding. MessagePack’s default encoding is UTF-8, so there’s no need to include it in the payload. Also note that the payload type (10100011), String, is encoded together with its length: the bits 101 encodes a string of less than 31 bytes, and 00011 says the actual length is 3 bytes. Altogether this makes for a very compact encoding of a string.

Extension Types

After deciding to give MessagePack a try, we did a search for Rails.cache.write and Rails.cache.read in the codebase of our core monolith, to figure out roughly what was going into the cache. We found a bunch of stuff that wasn’t among the types MessagePack supported out of the box.

Luckily for us, MessagePack has a killer feature that came in handy: extension types. Extension types are custom types that you can define by calling register_type on an instance of MessagePack::Factory, like this:

An extension type is made up of the type code (a number from 0 to 127—there’s a maximum of 128 extension types), the class of the type, and a serializer and deserializer, referred to as packer and unpacker. Note that the type is also applied to subclasses of the type’s class. Now, this is usually what you want, but it’s something to be aware of and can come back to bite you if you’re not careful.

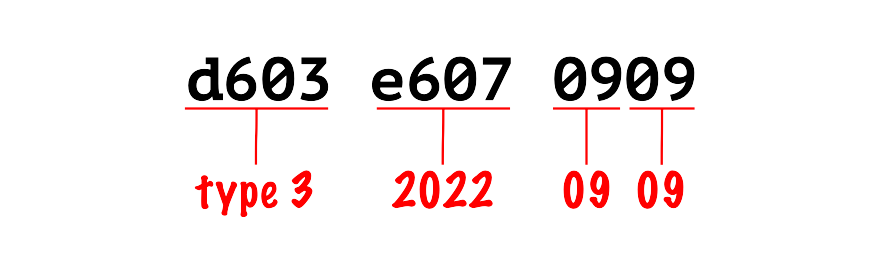

Here’s the Date extension type, the simplest of the extension types we use in the core monolith in production:

As you can see, the code for this type is 3, and its class is Date. Its packer takes a date and extracts the date’s year, month, and day. It then packs them into the format string "s< C C" using the Array#pack method with the year to a 16 bit signed integer, and the month and day to 8-bit unsigned integers. The type’s unpacker goes the other way: it takes a string and, using the same format string, extracts the year, month, and day using String#unpack, then passes them to Date.new to create a new date object.

Here’s how we would encode an actual date with this factory:

Converting the result to hex, we get d603 e607 0909 that corresponds to the date (e607 0909) prefixed by the extension type (d603):

Encoded date from the factory

As you can see, the encoded date is compact. Extension types give us the flexibility to encode pretty much anything we might want to put into the cache in a format that suits our needs.

Just Say No

If this were the end of the story, though, we wouldn’t really have had enough to go with MessagePack in our cache. Remember our original problem: we had a payload containing objects whose classes changed, breaking on deploy when they were loaded into old code that didn’t have those classes defined. In order to avoid that problem from happening, we need to stop those classes from going into the cache in the first place.

We need MessagePack, in other words, to refuse encoding any object without a defined type, and also let us catch these types so we can follow up. Luckily for us, MessagePack does this. It’s not the kind of “killer feature” that’s advertised as such, but it’s enough for our needs.

Take this example, where factory is the factory we created previously:

If MessagePack were to happily encode this—without any Object type defined—we’d have a problem. But as mentioned earlier, MessagePack doesn’t know Ruby objects by default and has no way to encode them unless you give it one.

So what actually happens when you try this? You get an error like this:

NoMethodError: undefined method `to_msgpack' for <#Object:0x...>

Notice that MessagePack traversed the entire object, through the hash, into the array, until it hit the Object instance. At that point, it found something for which it had no type defined and basically blew up.

The way it blew up is perhaps not ideal, but it’s enough. We can rescue this exception, check the message, figure out it came from MessagePack, and respond appropriately. Critically, the exception contains a reference to the object that failed to encode. That’s information we can log and use to later decide if we need a new extension type, or if we are perhaps putting things into the cache that we shouldn’t be.

The Migration

Now that we’ve looked at Marshal and MessagePack, we’re ready to explain how we actually made the switch from one to the other.

Making the Switch

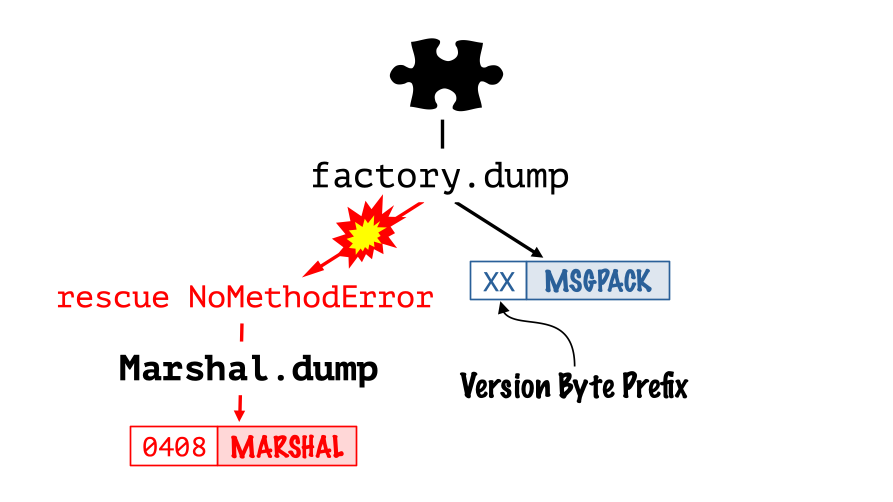

Our migration wasn’t instantaneous. We ran with the two side-by-side for a period of about six months while we figured out what was going into the cache and which extension types we needed. The path of the migration, however, was actually quite simple. Here’s the basic step-by-step process:

- First, we created a MessagePack factory with our extension types defined on it and used it to encode the mystery object passed to the cache (the puzzle piece in the diagram below).

- If MessagePack was able to encode it, great! We prefixed a version byte prefix that we used to track which extension types were defined for the payload, and then we put the pair into the cache.

- If, on the other hand, the object failed to encode, we rescued the

NoMethodErrorwhich, as mentioned earlier, MessagePack raises in this situation. We then fell back to Marshal and put the Marshal-encoded payload into the cache. Note that when decoding, we were able to tell which payloads were Marshal-encoded by their prefix: if it’s0408it’s a Marshal-encoded payload, otherwise it’s MessagePack.

The migration three step process

The step where we rescued the NoMethodError was quite important in this process since it was where we were able to log data on what was actually going into the cache. Here’s that rescue code (which of course no longer exists now since we’re fully migrated to MessagePack):

As you can see, we sent data (including the class of the object that failed to encode) to both logs and StatsD. These logs were crucial in flagging the need for new extension types, and also in signaling to us when there were things going into the cache that shouldn’t ever have been there in the first place.

We started the migration process with a small set of default extension types which Jean Boussier, who worked with me on the cache project, had registered in our core monolith earlier for other work using MessagePack. There were five:

Symbol(offered out of the box in the messagepack-ruby gem. It just has to be enabled)TimeDateTimeDate(shown earlier)BigDecimal

These were enough to get us started, but they were certainly not enough to cover all the variety of things that were going into the cache. In particular, being a Rails application, the core monolith serializes a lot of records, and we needed a way to serialize those records. We needed an extension type for ActiveRecords::Base.

Encoding Records

Records are defined by their attributes (roughly, the values in their table columns), so it might seem like you could just cache them by caching their attributes. And you can.

But there’s a problem: records have associations. Marshal encodes the full set of associations along with the cached record. This ensures that when the record is deserialized, the loaded associations (those that have already been fetched from the database) will be ready to go without any extra queries. An extension type that only caches attribute values, on the other hand, needs to make a new query to refetch those associations after coming out of the cache, making it much more inefficient.

So we needed to cache loaded associations along with the record’s attributes. We did this with a serializer called ActiveRecordCoder. Here’s how it works. Consider a simple post model that has many comments, where each comment belongs to a post with an inverse defined:

Note that the Comment model here has an inverse association back to itself via its post association. Recall that Marshal handles this kind of circularity automatically using the link type (@ symbol) we saw in part 1, but that MessagePack doesn’t handle circularity by default. We’ll have to implement something like a link type to make this encoder work.

![]()

Instance Tracker handles circularity

The trick we use for handling circularity involves something called an Instance Tracker. It tracks records encountered while traversing the record’s network of associations. The encoding algorithm builds a tree where each association is represented by its name (for example :comments or :post), and each record is represented by its unique index in the tracker. If we encounter an untracked record, we recursively traverse its network of associations, and if we’ve seen the record before, we simply encode it using its index.

This algorithm generates a very compact representation of a record’s associations. Combined with the records in the tracker, each encoded by its set of attributes, it provides a very concise representation of a record and its loaded associations.

Here’s what this representation looks like for the post with two comments shown earlier:

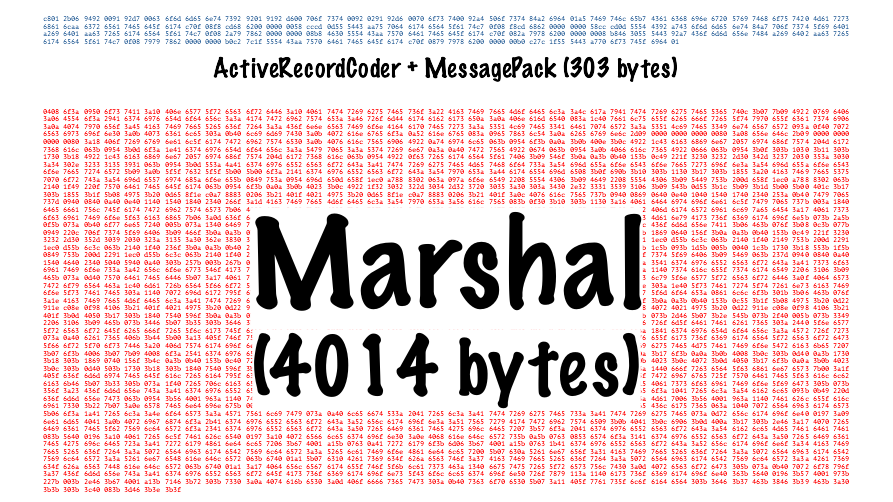

Once ActiveRecordCoder has generated this array of arrays, we can simply pass the result to MessagePack to encode it to a bytestring payload. For the post with two comments, this generates a payload of around 300 bytes. Considering that the Marshal payload for the post with no associations we looked at in Part 1 was 1,600 bytes in length, that’s not bad.

But what happens if we try to encode this post with its two comments using Marshal? The result is shown below: a payload over 4,000 bytes long. So the combination of ActiveRecordCoder with MessagePack is 13 times more space efficient than Marshal for this payload. That’s a pretty massive improvement.

ActiveRecordCoder + MessagePack vs Marshal

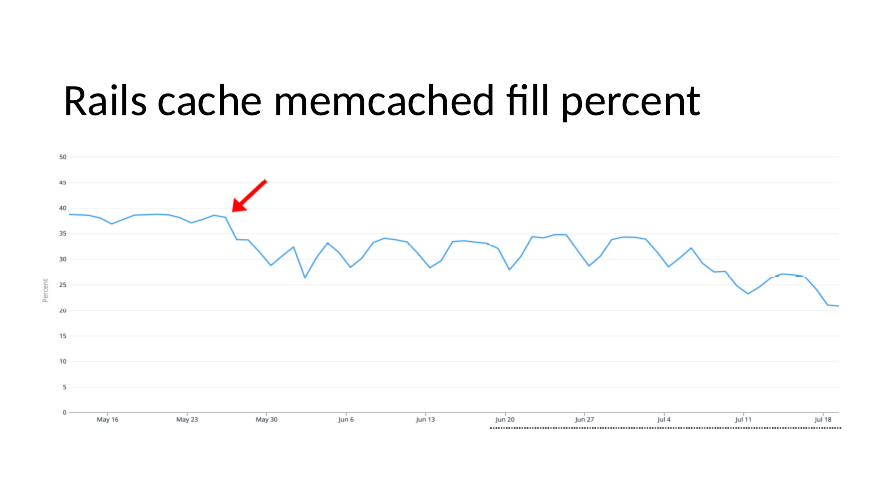

In fact, the space efficiency of the switch to MessagePack was so significant that we immediately saw the change in our data analytics. As you can see in the graph below, our Rails cache memcached fill percent dropped after the switch. Keep in mind that for many payloads, for example boolean and integer valued-payloads, the change to MessagePack only made a small difference in terms of space efficiency. Nonetheless, the change for more complex objects like records was so significant that total cache usage dropped by over 25 percent.

Rails cache memcached fill percent versus time

Rails cache memcached fill percent versus time

Handling Change

You might have noticed that ActiveRecordCoder, our encoder for ActiveRecord::Base objects, includes the name of record classes and association names in encoded payloads. Although our coder doesn’t encode all instance variables in the payload, the fact that it hardcodes class names at all should be a red flag. Isn’t this exactly what got us into the mess caching objects with Marshal in the first place?

And indeed, it is—but there are two key differences here.

First, since we control the encoding process, we can decide how and where to raise exceptions when class or association names change. So when decoding, if we find that a class or association name isn’t defined, we rescue the error and re-raise a more specific error. This is very different from what happens with Marshal.

Second, since this is a cache, and not, say, a persistent datastore like a database, we can afford to occasionally drop a cached payload if we know that it’s become stale. So this is precisely what we do. When we see one of the exceptions for missing class or association names, we rescue the exception and simply treat the cache fetch as a miss. Here’s what that code looks like:

The result of this strategy is effectively that during a deploy where class or association names change, cache payloads containing those names are invalidated, and the cache needs to replace them. This can effectively disable the cache for those keys during the period of the deploy, but once the new code has been fully released the cache again works as normal. This is a reasonable tradeoff, and a much more graceful way to handle code changes than what happens with Marshal.

Core Type Subclasses

With our migration plan and our encoder for ActiveRecord::Base, we were ready to embark on the first step of the migration to MessagePack. As we were preparing to ship the change, however, we noticed something was wrong on continuous integration (CI): some tests were failing on hash-valued cache payloads.

A closer inspection revealed a problem with HashWithIndifferentAccess, a subclass of Hash provided by ActiveSupport that makes symbols and strings work interchangeably as hash keys. Marshal handles subclasses of core types like this out of the box, so you can be sure that a HashWithIndifferentAccess that goes into a Marshal-backed cache will come back out as a HashWithIndifferentAccess and not a plain old Hash. The same cannot be said for MessagePack, unfortunately, as you can confirm yourself:

MessagePack doesn’t blow up here on the missing type because HashWithIndifferentAccess is a subclass of another type that it does support, namely Hash. This is a case where MessagePack’s default handling of subclasses can and will bite you; it would be better for us if this did blow up, so we could fall back to Marshal. We were lucky that our tests caught the issue before this ever went out to production.

The problem was a tricky one to solve, though. You would think that defining an extension type for HashWithIndifferentAccess would resolve the issue, but it didn’t. In fact, MessagePack completely ignored the type and continued to serialize these payloads as hashes.

As it turns out, the issue was with msgpack-ruby itself. The code handling extension types didn’t trigger on subclasses of core types like Hash, so any extensions of those types had no effect. I made a pull request (PR) to fix the issue, and as of version 1.4.3, msgpack-ruby now supports extension types for Hash as well as Array, String, and Regex.

The Long Tail of Types

With the fix for HashWithIndifferentAccess, we were ready to ship the first step in our migration to MessagePack in the cache. When we did this, we were pleased to see that MessagePack was successfully serializing 95 percent of payloads right off the bat without any issues. This was validation that our migration strategy and extension types were working.

Of course, it’s the last 5 percent that’s always the hardest, and indeed we faced a long tail of failing cache writes to resolve. We added types for commonly cached classes like ActiveSupport::TimeWithZone and Set, and edged closer to 100 percent, but we couldn’t quite get all the way there. There were just too many different things still being cached with Marshal.

At this point, we had to adjust our strategy. It wasn’t feasible to just let any developer define new extension types for whatever they needed to cache. Shopify has thousands of developers, and we would quickly hit MessagePack’s limit of 128 extension types.

Instead, we adopted a different strategy that helped us scale indefinitely to any number of types. We defined a catchall type for Object, the parent class for the vast majority of objects in Ruby. The Object extension type looks for two methods on any object: an instance method named as_pack and a class method named from_pack. If both are present, it considers the object packable, and uses as_pack as its serializer and from_pack as its deserializer. Here’s an example of a Task class that our encoder treats as packable:

Note that, as with the ActiveRecord::Base extension type, this approach relies on encoding class names. As mentioned earlier, we can do this safely since we handle class name changes gracefully as cache misses. This wouldn’t be a viable approach for a persistent store.

The packable extension type worked great, but as we worked on migrating existing cache objects, we found many that followed a similar pattern, caching either Structs or T::Structs (Sorbet’s typed struct). Structs are simple objects defined by a set of attributes, so the packable methods were each very similar since they simply worked from a list of the object’s attributes. To make things easier, we extracted this logic into a module that, when included in a struct class, automatically makes the struct packable. Here’s the module for Struct:

The serialized data for the struct instance includes an extra digest value (26450) that captures the names of the struct’s attributes. We use this digest to signal to the Object extension type deserialization code that attribute names have changed (for example in a code refactor). If the digest changes, the cache treats cached data as stale and regenerates it:

Simply by including this module (or a similar one for T::Struct classes), developers can cache struct data in a way that’s robust to future changes. As with our handling of class name changes, this approach works because we can afford to throw away cache data that has become stale.

The struct modules accelerated the pace of our work, enabling us to quickly migrate the last objects in the long tail of cached types. Having confirmed from our logs that we were no longer serializing any payloads with Marshal, we took the final step of removing it entirely from the cache. We’re now caching exclusively with MessagePack.

Safe by Default

With MessagePack as our serialization format, the cache in our core monolith became safe by default. Not safe most of the time or safe under some special conditions, but safe, period. It’s hard to underemphasize the importance of a change like this to the stability and scalability of a platform as large and complex as Shopify’s.

For developers, having a safe cache brings a peace of mind that one less unexpected thing will happen when they ship their refactors. This makes such refactors—particularly large, challenging ones—more likely to happen, improving the overall quality and long-term maintainability of our codebase.

If this sounds like something that you’d like to try yourself, you’re in luck! Most of the work we put into this project has been extracted into a gem called Shopify/paquito. A migration process like this will never be easy, but Paquito incorporates the learnings of our own experience. We hope it will help you on your journey to a safer cache.

Chris Salzberg is a Staff Developer on the Ruby and Rails Infra team at Shopify. He is based in Hakodate in the north of Japan.

Wherever you are, your next journey starts here! If building systems from the ground up to solve real-world problems interests you, our Engineering blog has stories about other challenges we have encountered. Intrigued? Visit our Engineering career page to find out about our open positions and learn about Digital by Design.