Scaling cross-team contributions to a native mobile app

Flagship applications are home to myriad functionalities that serve different parts of your userbase. Often, adding a new feature unintentionally causes reduced velocity, single points of failure, and monoliths that are hard to navigate. Such flagship apps are built from contributions from multiple teams each with varying degrees of familiarity with the codebase.

Onboarding a new team to such a codebase is expensive and usually prohibited for reasons like:

- Onboarding costs — It simply takes too long to bring someone up to speed with the codebase such that they can contribute. The cost of training and mentoring someone to do so is often as high if not more significant than implementing the feature would have been. This investment does not make sense for someone who will develop one feature and then move back to their team.

- Maintenance cost — Who will maintain this feature once the temporary developer has returned to their original team?

- Quality — What risk does the new feature, developed by someone without a strong understanding of the architecture and codebase pose to the main application?

We’ve seen this play out before with many enterprise apps, including the Salesforce Mobile app. But there’s a way to enable contributions from other teams while addressing the above challenges. This blog outlines the strategy we used within Salesforce to make it happen: an approach we call our “Plug-in Architecture.”

Plug-in architecture

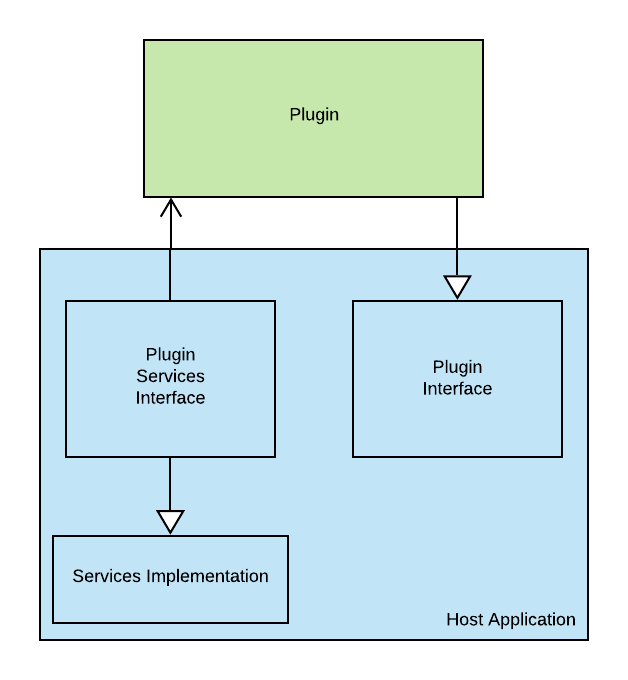

In a traditional plug-in architecture, third-party developers create plug-ins that are installed separately from the host application. These plug-ins extend the capability of the host application by adding new features. Typically, the architecture works like this:

- The host application defines a set of interfaces that the plug-in must implement.

- The host application injects a set of interfaces into the plug-in, which it may use.

- The plug-in registers itself when it installs, then the host application loads it.

Diagram showing the dependencies between plugins and implementations

Keep in mind when using this architecture:

- The host application shouldn’t depend on a particular plug-in, since users might not install it.

- The host application isn’t aware of a plug-in’s details, it only implements the necessary interface.

Plug-in architecture and mobile

Engineers create, install, and distribute software packages differently for mobile and desktop environments. In light of these differences, let’s discuss what characteristics of plug-in architecture apply to a mobile app development environment.

Plug-ins are the perfect example of modular architecture. They don’t have any critical dependencies because the host application can run without them. In many cases, the host application doesn’t know what the plug-in does, only that it supports the prescribed interface. Modularity is inherent to most recommended architectures, so your application is probably already structured like this.

Plug-ins implement an interface defined by the host app. This interface is an abstraction of what the app needs from the plug-in, and it’s the only dependency between the app and a plug-in. Correctly defining this interface is the most challenging aspect of plug-in architecture. But because all plug-ins conform to this interface, app developers don’t need to write code for integrating a specific plug-in. As mentioned earlier, the app may not even be aware of all the potential plug-ins that a user might install.

The app provides an interface to the plug-in. This interface lets the plug-in interact with the host application. The plug-in developers only need to learn this interface and can ignore any other complexity of the host application. This greatly simplifies the learning curve for developers, since the interface is only an abstraction of functionalities a plug-in might need from the application, like sending network requests, storing data, or presenting a UX.

Some restrictions of the plug-in architecture, enforced by necessity in a desktop environment, may seem like they could be ignored or relaxed in a mobile application. But it’s best to develop as if these restrictions were still in place.

In desktop app development, the host app must run without a particular plug-in, since there’s no guarantee a user will install it. But, in mobile development, the app developer bundles the plug-in’s framework or Android Archive (AAR) with the main app. Plug-ins have to be included at build time and deployed with the main app bundle; you can’t install plug-ins independently of the main application. Since the host application is aware of all available plug-ins at build time, you might think mobile apps don’t need to support graceful degradation without the plug-in, but that’s not the case.

For example, suppose that a developer builds the application to depend directly on a plug-in, since the plug-in is “already there.” This dependency breaks the abstraction and makes the application rely on specific versions of the plug-in, which forces the app team to be involved in any maintenance decisions for it. The dependency also implies integration work beyond adding the plug-in to the application, as some code in the app references the specific plug-in. A direct reference extends the work required for a plug-in beyond the interface boundaries.

This is why it’s best practice to gate features, allowing the developer to toggle them on or off if required. If the application cannot gracefully handle the absence of a plug-in, then there is no real opportunity to disable it via a feature toggle.

Implementing a plug-in like architecture

So how can we replicate the benefits of a plug-in architecture in a mobile app? Most experienced developers are familiar with the values of modularity, loose coupling, and high cohesion. For this article, we won’t focus on these specifically, since they’re primarily achieved automatically by the following two characteristics:

- Service Provider Interface, which exposes services to the plug-in.

- Feature Provider Interface, which extends feature implementations from the plug-in to the host app.

The Service Provider Interface (SPI)

The Service Provider Interface (SPI) is an abstraction that encapsulates all the services the application makes available to the plug-in. This interface allows a plug-in to treat the app as a platform. It helps avoid duplicating common services such as logging, network requests, instrumentation, and others within the plug-in. The application injects the SPI into the plug-in at initialization.

Although Apple and Google both provide network request libraries, most enterprise-class apps have a higher-level network layer responsible for supplying appropriate cookies, request parameters, or other domain-specific overheads. Rather than recreate this in each plug-in, a host application can expose an interface as part of the SPI. Let’s consider this example, and see how we might implement such an SPI.

The following code snippet is a simplistic example of how a host application might implement this abstraction.

The Feature Provider Interface (FPI)

While the SPI abstracts the application’s services to the plug-in, the Feature Provider Interface (FPI) is its counterpart, abstracting the services exposed by the plug-in. The SPI allows the plug-ins to run on top of the host application and avoid bloating the plug-in with redundant service implementations. The FPI, on the other hand, is how the plug-in extends the host application.

A new feature might consist of a root viewController (iOS) or fragment (Android) from which further interaction occurs. The following code snippet demonstrates what an FPI might look like for obtaining the view controller from the plug-in.

The plug-in will implement this to respond affirmatively if the destination is one the plug-in handles, and likewise returns the appropriate view controller when requested.

Our experience with plug-in architecture

In late 2018, another team at Salesforce reached out to us with a simple question: “How can we get our feature included in the Salesforce mobile app?” Typically, such a question has an easy answer. “File a request, and we’ll get back to you once it’s prioritized.” But as more and more teams had similar requests, it became apparent that limiting feature contributions to the Salesforce app development team was not going to scale. So we started asking a new question: “How can we get these features included in the Salesforce app despite prioritization conflicts?” This is what led to the plug-in architecture discussed in this article.

One obvious solution to the feature request was asking the requesting team to spend some time in our mobile development team to do the integration themselves. But as described above: who would maintain it after integration? Who would train their team on the intricacies of our flagship application code? How could we ensure the changes maintain the high quality that our customers expect from us?

By introducing a plug-in-like architecture, we could reshape how external teams contribute native features to our mobile app. Both the Salesforce App and the other team sat down to define the shape of the plug-in architecture and its APIs. A few months later, the initial adopter had successfully integrated their feature into the Salesforce app. But more importantly, we had established a successful model in which teams can push new updates to their feature anytime they want with no involvement from the Salesforce app team while maintaining the same level of quality.

In late 2019, a company recently acquired by Salesforce wanted to do the same thing. This team didn’t have any inside knowledge of our flagship application nor the intricacies of the Salesforce Platform. Nevertheless, the plug-in architecture let them integrate their product into the Salesforce app in record time. They achieved this because they could focus on a finite and straightforward set of APIs.

While these external integrations were happening, we decided to adopt the same plug-in model for features of the flagship application developed by the Salesforce app team members. The idea was to treat features of the Salesforce app the same as external integrations. The architecture enforced a clear boundary for the new feature code, which let developers focus on the value-added by the new feature instead of the main application code. The first internal feature written using the plug-in architecture was for calendar integration and management. The developer worked on the feature for a few days and came back delighted by the simplifications from the plug-in architecture. For example, a secure persistence SPI trivialized storing encrypted data, because the plug-in SPI provided an interface for doing just that. It was up to the host app layer to worry about the implementation. By developing her feature as a plug-in, she could also isolate it to an independent module, making it more easily tested and maintained.

The team is reaping the benefits, with several features in development using this architecture across iOS and Android platforms. The plug-in architecture transforms the Salesforce app into a native development platform with numerous plug-ins running on top of it. This transformation reduces the time it takes to bring features to our users.

Additionally, all the plug-ins developed on this architecture can easily be ported, without changes, to another application if needed, provided the other application implements the required plug-in APIs. This portability brings tremendous flexibility for our teams, who can simultaneously deploy their features in their application and the Salesforce app.

Revisiting external contributions

At the outset of this post, we discussed a scenario where other teams had feature requests for a flagship application, even offering resources to develop them. The app team rejected this offer for the following reasons: onboarding costs, maintenance costs, and quality concerns from letting an external entity modify the codebase. Let’s look at how the above architectural proposal addresses each of these in turn.

Onboarding Costs

The plug-in architecture significantly reduces onboarding costs by shrinking the surface space of required knowledge down to the SPI and FPI. In our case, although we offer office-hours-type support, most of the education on the SPI and FPI is done through simple interface documentation.

Maintenance Costs

Since plug-ins are modular and exposed as binary modules (frameworks, AARs), the development team that creates them also owns and maintains them. The host app team doesn’t incur any of the maintenance costs.

Quality Concerns

As the external team is not modifying the host app code, they pose little risk to the quality of the host app. The plug-in’s impact on the host app is constrained by what the host app exposes via the SPI. The plug-in itself must meet quality standards, as consumers will not distinguish between a plug-in-provided feature and a feature built by the app team. However, the concern here was not the quality of the plug-in feature but the risk of an external contributor modifying the host app code. Plus, due to the modular nature of plug-ins, if coupled with a feature toggle solution, a plug-in can be entirely disabled if there are quality issues.

Conclusion

Despite initial concerns that this approach would be overly restrictive, developers were surprised when, rather than creating friction and slowing down development, plug-in architecture accelerated it. Developing features as plug-ins shortened the timeframe for integrating features provided by external teams, and made features easier to develop and integrate for internal developers by developing them as plug-ins. We find once developers create a feature with the plug-in architecture, they have an “Aha!” moment and become true believers. If your team is looking for ways to enable scalable cross-team contributions to your mobile app, we recommend following a similar approach.