Enhancing Personalized CRM Communication with Contextual Bandit Strategies

In the competitive landscape of CRM (Customer Relationship Management), the effectiveness of communications like emails and push notifications is pivotal. Optimizing these communications can significantly enhance user engagement, which is crucial for maintaining customer relationships and driving business growth. At Uber, these communications typically begin with creating content using an email template, which often includes several components: a subject line, pre-header, header, banner, body, and more. Among these, the subject line and pre-header are especially crucial to email open rates. They’re often the first elements a customer interacts with and can significantly influence the open rate of an email. To overcome the challenges of static optimization methods like A/B testing, we use a multi-armed bandit approach called contextual bandits.

Why Is This a Contextual Bandit Problem?

Each customer’s interaction with email content is unique, influenced by their individual preferences, past behaviors, and the specific context at the time of receiving the message. For example, some users might be drawn to concise, action-oriented subject lines, while others may prefer detailed and informative ones with emojis. Similarly, the effectiveness of pre-headers can vary significantly across different user segments.

The traditional A/B test method allocates fixed traffic to each creative design and scales the best variant after the test concludes. This approach has three drawbacks. First, obtaining meaningful results requires a significant sample size, which limits the number of designs that can be tested. For example, a traditional A/B test often takes 4-6 weeks to compare just 2-3 variants, and scaling to 100 or more variants could take years due to the time needed to collect enough data for statistical significance. In contrast, Multi-Armed Bandit dynamically reallocates traffic to better-performing variants, allowing experiments with 100 or more variants to converge in just weeks. Second, insights are only available at the end of the test, resulting in prolonged exposure to sub-optimal experiences, which negatively impacts campaign performance and slows iteration. Lastly, in dynamic environments, user preferences may shift over time, rendering A/B test results less relevant by the end of the experiment.

On the other hand, this variability presents a classic contextual bandit problem, where the context includes each user’s specific preferences and creative content information. A contextual bandit approach allows us to dynamically adjust creative content to optimize user engagement. By treating each combination of elements (shown in Figure 1) of the email as an arm of the bandit, and the user’s context as the environment, we can apply machine learning models to predict and serve the optimal variant of email that’ll maximize the open rate.

Figure 1: The illustration of email variants generated by combining different alternate values of each component.

With consideration above, a contextual bandit algorithm continually learns from historical user data and progressively hones its strategy to personalize content more effectively. This approach enhances the relevance of each communication and further drives higher engagement by tailoring messages to meet diverse user expectations.

We adopted the GPT embedding technique to generate variant content features, which enables traditional ML models as the first step of our solution. We also developed an XGBoost™ model to tackle the cross-campaign contextual bandit problem. While XGBoost specializes in making predictions, SquareCB focuses on balancing exploration and exploitation. As part of this work, we integrated the new model into Uber’s content optimization platform to improve user engagement.

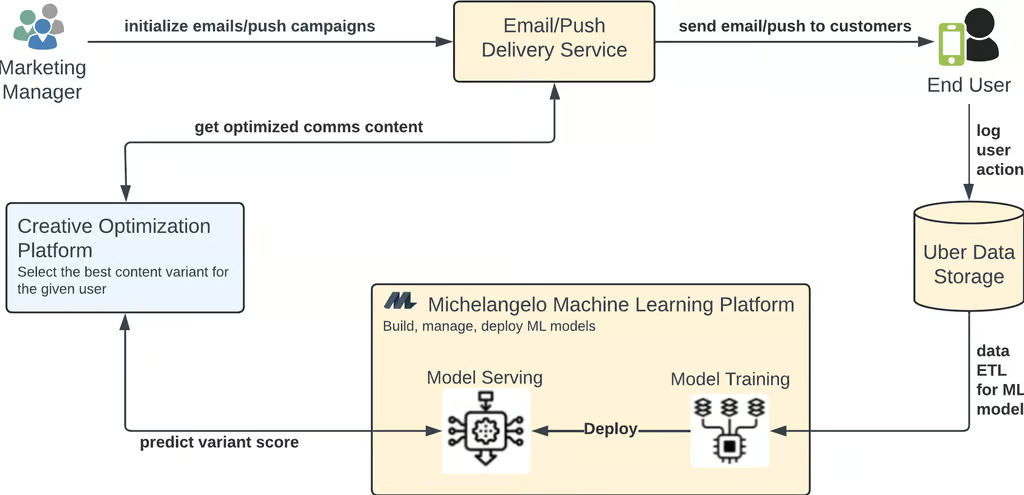

Figure 2 shows the simplified architecture of Uber’s personalized CRM communication solution using contextual bandit strategies. Marketing managers, the primary users of this system, collect CRM communication audiences, set communication schedulers, and create communication content templates before the personalized CRM communications are sent to end users.

Briefly, when the communications are initiated, a selected user is ingested into the communication scheduler, and the email content for this user is rendered based on their contextual features. Our model now takes the input, including user contextual features and the GPT embedding of each email variant, to predict the probability with which the user may open the email corresponding to the email variant. GPT embeddings convert email content into numerical representations that ML models can process. The email variant with the top predicted probability, with the consideration of post-processing using SquareCB (described below), will be rendered and sent to the end user. Note that the Creative Optimization Platform (COP) in Figure 2 is our optimization tool that leverages multi-armed bandit and contextual bandit techniques to select the most effective communication variant.

The user’s interactions with the communication are logged and stored in Uber’s data storage. This user action data is extracted and transformed to train/retrain machine learning models.

Our solution uses multiple platforms at Uber to send optimized communication content to end users, collect feedback, user action data, and train and serve optimization models to increase user engagement. These components are successfully integrated to create a scalable, flexible, and reliable personalized content optimization platform for Uber CRM.

Figure 2: The architecture of the personalized CRM communication solution with contextual bandit strategies.

LinUCB is a contextual bandit algorithm that assumes a linear relationship between reward and contextual features. It employs explore-exploit by leveraging the efficiency of closed-form calculations for prediction confidence in linear regression. At each time step, the algorithm selects the arm that maximizes the upper confidence bound of the reward prediction, as described in the formula (ref) below:

where a__t is the arm selected at each step, x__t is the contextual feature matrix_,_ and matrix A = X__T * X + I .

This approach is conceptually straightforward and practical to implement. In application, the model is typically trained in batches due to delays in user feedback, which renders real-time online learning impractical. A further advantage of LinUCB is the computational efficiency of the closed-form formulas, which allows for model training on the entire historical dataset at each time step. This characteristic makes the model stateless, facilitating efficient updates and scalability.

To capture more complex relationships between contextual features and rewards, we employ XGBoost in conjunction with the SquareCB algorithm shown in Figure 3. Unlike LinUCB, which assumes a linear relationship, XGBoost can model nonlinear interactions. Additionally, by incorporating text embeddings into the input features, XGBoost can leverage global information and learn from cross-campaign data.

However, estimating prediction intervals for XGBoost outputs is challenging. Instead of directly modeling the uncertainty associated with XGBoost predictions, we adopt a more straightforward approach. The SquareCB algorithm addresses this by assigning a probability to each action that’s inversely proportional to the gap between the action’s score and that of the best-performing arm.

Figure 3: An overview of the XGBoost model and SquareCB for optimized variant selection.

A key advantage of the SquareCB algorithm is its flexibility with respect to the prediction model. It doesn’t depend on the specific type of prediction model used, making it well-suited for integration with XGBoost. This adaptability allows for efficient and effective exploration and exploitation strategies, even when using complex models like XGBoost.

A general solution using text embedding eliminates the need for separate models for each text type, allowing any new template or variant to be input for predictions. Once developed, the Xgboost and SquareCB model works across various templates and campaigns. In contrast, LinUCB models require distinct linear regressions for each variant, facing a cold start issue with new templates. They also rely on linear assumptions about the relationship between rewards and features, which limit their generalizability despite potential improvements.

The Xgboost and SquareCB model is comprehensive but may not be ideal for limited data situations where specific solutions are needed. In these cases, lightweight models like LinUCB can be more appropriate, easier to deploy, and outperform general solutions in simpler problems.

User Contextual Features

The model is designed to analyze and incorporate various user-level behavior features to capture and understand user preferences regarding creative design effectively. By examining a user’s historical interactions with Uber’s CRM communications, the model can gain insights into their preferences for different types of creative content. This includes understanding which types of messages, visuals, or promotions resonate most with the user based on their past engagement.

In addition to CRM interactions, the model also considers users’ activity patterns on the Uber app itself. This includes how often they use the app, which features they engage with most, and their overall behavior within the app. By integrating these two sources of data—CRM communication history and app activity—the model can tailor and optimize the user experience. It aims to deliver the most relevant and appealing creative designs to each user, ultimately enhancing user satisfaction and engagement with the platform.

Variant Content Features

A CRM communication template can include various components optimized for performance, such as the subject line, pre-header, body text, images, and more. Each component can have multiple alternatives, resulting in different combinations, known as variants, which form the final communication content for the end user. The machine learning models we focus on are designed to predict the open rates of these communications based on their content. These models learn the relationship between user contextual features and the features of the variant content. Figure 4 outlines how to generate these variant content features in three stages.

Figure 4: Three stages of variant content feature generation.

-

Stage 1: Variant content generation. Using emails as an example, our primary goal is to increase the open rate of communications sent to end users. A higher open rate is essential for boosting click-through rate (CTR). The two main components that influence the open rate are the subject line and the pre-header, as these are the first elements a user notices. To generate the variant content, we combine the subject line and pre-header texts, including any emojis, into a single string for embedding generation.

-

Stage 2: Variant content embedding generation. The concatenated text of a variant serves as the input for a pre-trained GPT model (text-embedding-ada-002) to generate embeddings. A 1536-dimensional floating point number vector generates as the embedding of each input text. Note that emojis are also considered in this embedding generation.

-

Stage 3: Transform embeddings to features. The high dimensionality of the variant content embedding requires significant computational resources for open rate prediction using the Michelangelo OPS (Online Prediction Service). Additionally, the input text, consisting only of the subject line and pre-header, typically has a limited number of words. Therefore, using such high-dimensional embedding as model features is impractical. We introduce feature reduction using PCA (Principal Component Analysis) to generate the final feature vector of the variant content with a much lower dimension, 128. Our experiments show that this dimension can be further reduced.

User behavior features, as previously discussed, may not consistently reflect users’ creative design preferences. However, using extensive historical CRM communication data, alongside user feedback on these interactions, enables the training of user embeddings that can effectively capture overall creative preferences. Specifically, historical variant content features can be aggregated and input into a DNN (deep neural network) encoder to generate these embeddings. These embeddings can then be used in conjunction with other features for CRM Contextual Bandit modeling. Additionally, they can be applied to various other creative optimization scenarios, including in-app user engagement.

Post-Processing for XGBoost Optimization

Automated and modularized post-processing functions have been introduced in COP to implement exploration in our XGBoost-based optimization strategy. Unlike the LinUCB model, which inherently supports exploration-exploitation, the XGBoost model lacks an exploration component. Without a post-processing function, our solution to the contextual bandit problem would effectively become a solution to the related regression problem.

We adopted SquareCB as the post-processing method for XGBoost prediction results across all campaign variants. The specifics of this method are detailed in its original paper and the revised version described in this lecture. Based on the predicted scores returned from Michelangelo OPS, new scores are calculated using the proposed algorithm, followed by random sampling to select variants according to these new scores. By carefully tuning the exploration parameters, we ensure that a certain percentage of the selected variants aren’t the highest-scoring ones from the XGBoost model, thereby incorporating a necessary degree of exploration into our strategy.

Note that post-processing can be handled using different approaches, such as SquareCB, Thompson Sampling, or UCB. Our platform offers a plug-and-play solution, allowing users to select and apply various methods seamlessly through simple configuration. This enables easy switching between approaches without complex setups, providing flexibility and convenience. Figure 5 shows that users can freely select a combination of an ML model and a post-processing approach to predict the best content variants.

Figure 5: Users can freely configure a combination of a ML model and a post-processing approach to predict the best content variants.

The foundational framework established here facilitates the exploration of a broader range of feature inputs and model structures. The inclusion of image embeddings is being considered to enhance the model’s ability to optimize visual content in email communications. This would allow the model to tailor visual elements more effectively based on learned user preferences.

The team is also evaluating other model structures beyond XGBoost to leverage these new types of features fully. As part of this, we’ll also test alternative models such as neural networks and reinforcement learning algorithms to better integrate and use the diverse data inputs. Additionally, the ongoing work will simplify configuration management, making model iteration and campaign-level model configuration more efficient.

Finally, there’s an ongoing effort to develop new content-generation strategies informed by the insights gained from these advanced models. This will enable creating more personalized and effective content based on learned user preferences and behavioral patterns.

This blog explored how contextual bandit strategies, enhanced by Generative AI, optimize personalized CRM communications at Uber. We discussed the critical role of marketing content optimization and why this problem is well-suited to contextual bandits. Our approach considers user context and preferences for different communication content, represented by variant content features, to ultimately improve user engagement.

A key aspect of our approach is using embedding technology to generate content features for the XGBoost model. We leverage GPT embeddings to transform text components into high-dimensional vectors and then use PCA to generate features with reduced dimensionality, enabling effective machine learning predictions. Our platform supports two types of solutions to the contextual bandit problem: LinUCB provides campaign-level optimization, while XGBoost, coupled with SquareCB post-processing, incorporates necessary exploration to deliver a comprehensive content optimization solution.

This wouldn’t have been possible without the support of multiple teams at Uber. We’d like to thank Isabella Huang, Fang-Han Hsu, Ajit Pawar, and Bo Ling for helping get this work started. A big thanks to our tech partners from the Applied Scientist team (Yunwen Cai), the Creative Optimization Platform team (Navanit Tikare, Mohit Kaduskar), the Marketing Technology team (Emily Salshutz), and the Michelangelo team for their help in designing, building, and launching the system for Uber’s marketing campaigns.

Cover Photo Attribution: Generated by AI Generator | getimg.ai.