sqlalchemy_batch_inserts: a module for when you’re inserting thousands of rows and it’s slow

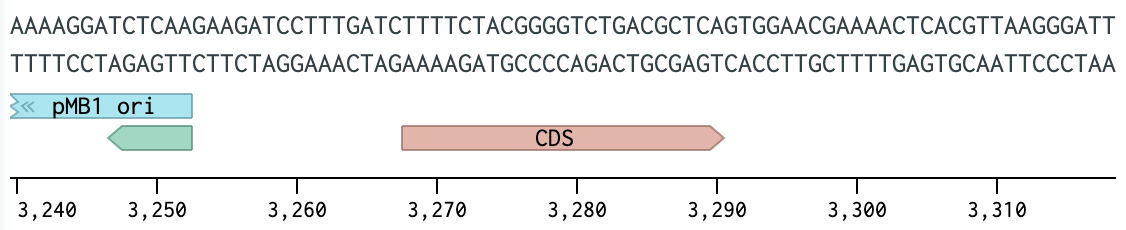

Users view and edit DNA sequences within Benchling. This worked great when they were creating a few sequences at a time, but as their science scaled up, our application couldn’t keep up.

Our users found that actions that insert lots of rows into the database were slow, such as:

- Importing 1,000 DNA sequences with 6 annotations each (7,000 rows)

- Validating integrity of 1,000 DNA sequences and writing the results (2,000 rows)

- Auto-annotating 1,000 DNA sequences with 100 annotations each (100,000 rows)

DNA sequence with 3 annotations in Benchling

Behind the scenes, we use Python and Postgres, with SQLAlchemy as our ORM. The core problem we faced: how could we improve the data-creation performance for our users, without rewriting our application to work around the ORM?

In this post, we’ll dive into how we dug into and fixed this issue. Overall, we found that, despite SQLAlchemy’s batching behavior for inserts, creating N models in SQLAlchemy still makes N round trips to the database if the models have an auto-incrementing primary key.

We’ve released benchling/sqlalchemy_batch_inserts as our solution. This module improves creating those N models to only require 2 round trips, without requiring any other changes to your code. You may find it useful if:

- You are using SQLAlchemy,

- You are using Postgres, and

- You care about the performance of creating many models at once

We’ll share more measurements below, but for inserting 1,000 models with a 3 ms round-trip latency to the database, we saw a 35x speedup and were able to complete the 1,000-row insert in 0.14 seconds (instead of 4.9 seconds before our solution).

Why are inserts slow?

We found the bottleneck to be hundreds of round trips between the application server and the database. To explain further, let’s dive into an example of creating DNA sequences.

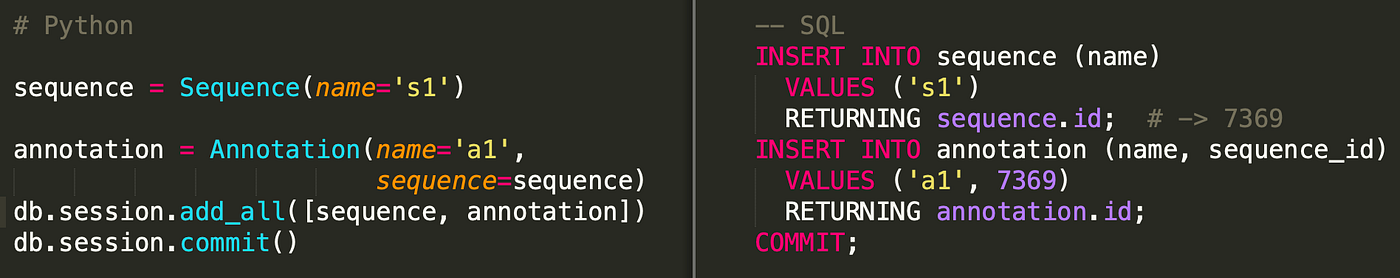

Creating 1 DNA sequence

Let’s say the DNA sequence table has an auto-incrementing primary key (ID) id and text name column.

Round trips: 1

On the commit, SQLAlchemy emits the INSERT. The database assigns the new row an id, and SQLAlchemy fetches it via RETURNING so it can populate the Sequence model’s id attribute. In other words, Sequence id is only set after commit talks to the database.

Creating 1 DNA sequence and annotation

Let’s say the annotation table, which stores markers on a DNA sequence, has an id, text name column, and many-to-one foreign key sequence_id.

Round trips: 2

The sequence needs to be inserted before the annotation to get the sequence_id back from the database.

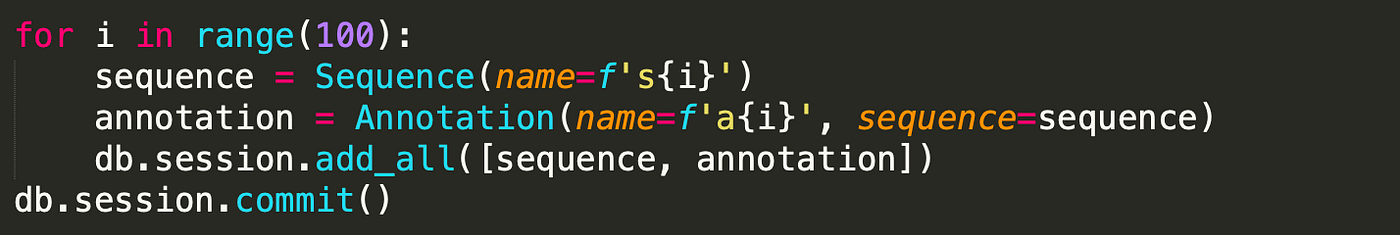

Creating 100 DNA sequences and annotations

Round trips: 200

SQLAlchemy inserts each sequence, then each annotation. We observed that creating N models in SQLAlchemy does N round trips to the database. In one test, we found that database calls took up 97% of the total time spent importing 100 sequences with 1 annotation each. So, we decided to focus on optimizing these round trips.

What have our tools already done to help with this?

Our SQLAlchemy setup uses psycopg2 to interact with Postgres. Daniele Varrazzo, the main psycopg2 maintainer, added execute_batch and execute_values in v2.7. Instead of N separate INSERT statements for N models, execute_values supports 1 INSERT statement with N parameters. On the right track!

Under the hood, this behaves like the DB-API’s [executemany](https://www.python.org/dev/peps/pep-0249/#id18) with even fewer round trips to the database. The implementation uses [mogrify](http://initd.org/psycopg/docs/cursor.html#cursor.mogrify), another psycopg2 extension, to pre-bind the arguments and send the result as a single command to the database.

The docs for execute are here and they report orders of magnitude better performance, including a 5,000% improvement during one test on a transatlantic network!

SQLAlchemy

Mike Bayer, the main SQLAlchemy maintainer, added support for psycopg2’s execute_batch in v1.2 and for execute_values in v1.3.7.

But even though we had enabled batch mode with execute_values on SQLAlchemy, the number of round trips for the 100 sequence and annotation case did not improve to 2 (batch insert all the sequences, then all the annotations). What happened?

We realized that SQLAlchemy only batches inserts if the primary keys are already defined on your model. In our example, SQLAlchemy needs to know the IDs of the sequences it’s batch inserting, so it can insert their annotations. However, as of psycopg2 v2.7*, execute_values doesn’t support getting returned values back. Therefore, SQLAlchemy only uses execute_values if everything being inserted already has an ID. Most of the time, we create objects without knowing their IDs (aka the database assigning them), so SQLAlchemy inserts them one at a time.

Solutions we considered

We suspected we weren’t the only ones to run into slow inserts, so we started looking at existing solutions.

SQLAlchemy bulk API

SQLAlchemy docs have a section dedicated to I’m inserting 400,000 rows with the ORM and it’s really slow! One option is to rewrite the Python code to call SQLAlchemy’s bulk API.

![Code: sequences = [Sequence(name=fs{i}) for i in range(100)] annotations = Annotation(name=fa{i}, sequence=sequences[i])

Round trips: 101

But this solution requires rewriting all of our application code.

Also, we initially expected the round trips to be 2, but because we needed to add return_defaults to populate sequence_id on each annotation, it increased to 101. (The docs similarly warn that return_defaults “greatly reduces the performance gains of the method overall.”)

Python-generated primary keys

Instead of having the database assigning the primary keys (IDs), we could have the Python code generate them up front. With defined primary keys, SQLAlchemy could leverage its batch-insert functionality.

One solution is to use UUIDs everywhere instead of auto-incrementing integer IDs. However, this solution also would have required migrating all of our existing models.

The problem we now faced: can we get the benefits of Python-generated IDs without changing all of our application code? Solving this would get us execute_values, 1 round trip instead of N, and the performance we wanted.

Database-generated, Python-assigned primary keys

Let’s do a quick recap of what we know:

- SQLAlchemy only batch inserts models with primary keys defined prior to insertion.

- We have models with auto-incrementing integer IDs, meaning that they’re generated and assigned by the database at insertion time.

Under the hood, Postgres uses sequences to back auto-incrementing integer IDs. Each sequence is a global counter that monotonically increments every time it’s advanced. In addition to being advanced automatically by auto-incrementing IDs, Postgres lets us call the nextval function directly to advance them. This means we can generate new IDs before doing any inserts! Thanks to Mike Bayer suggesting it on the mailing list.

In the DNA sequence example, sequence.id is backed by the sequence_id_seq sequence generator (sequenception). We can get the next ID with nextval:

This showed that we can use nextval to pre-fill integer IDs of our models before inserting them into the database.

Putting it all together

To summarize our investigation:

- SQLAlchemy batches inserts if primary keys are defined

- We have a mechanism of generating lots of new auto-incrementing primary keys at once, via database sequences

So, let’s rewrite the example Python code to pre-fill the IDs, using a generate_ids helper function that calls nextval to get the IDs:

![Code: sequences = [Sequence(name=fs{i}) for i in range(100)] annotations = Annotation(name=fa{i}, sequence=sequences[i])

Round trips: 4 (1 to generate_ids for each table, 1 to batch insert for each table)

Theoretical speedup: 50x (from 200 to 4 round trips)

Actual speedup: 10x (not all of the time spent is in round-trip latency)

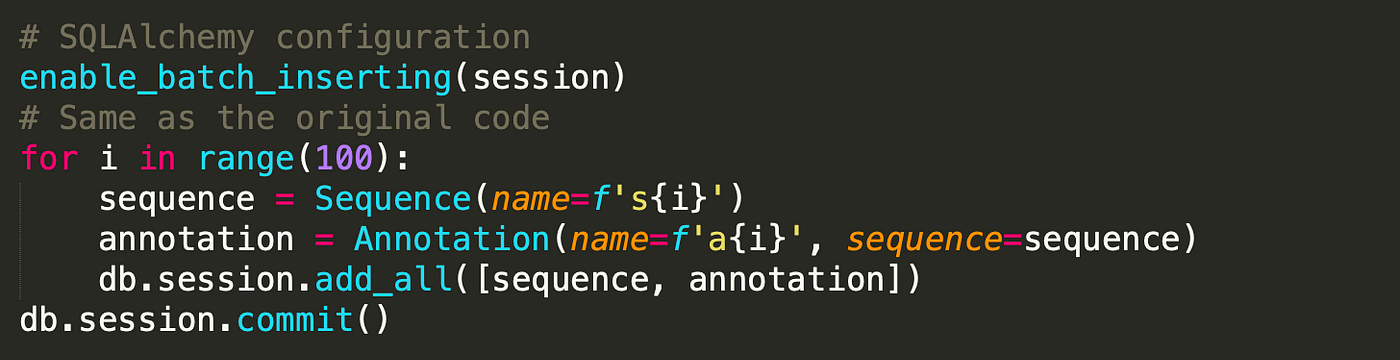

Without code changes: [sqlalchemy_batch_inserts](https://github.com/benchling/sqlalchemy_batch_inserts)

We abstracted away all of the code changes into a SQLAlchemy hook that runs before the commit. It automatically

- Groups new models by type. For each type, it pre-fills their IDs.

- Reorders how the models will be inserted, so models of the same type are inserted together for maximum batching.

That way, the number of round trips is 2 * number of types.

After another chat on the mailing list, we implemented this exact strategy in [sqlalchemy_batch_inserts](https://github.com/benchling/sqlalchemy_batch_inserts).

Round trips: 4

Challenges

We ran into some complications while building this module:

- A lot of care was needed in dealing with joined table inheritance.

- SQLAlchemy guarantees that models of the same type will be inserted in the order that they were created in the session. We needed to match that guarantee.

The code is fairly defensive about what it tries to optimize, so the worst result is unimproved performance. There are lots of details and documentation in the implementation if you are interested!

The repo also has a Dockerfile and instructions for easy testing. We use [pumba](https://github.com/alexei-led/pumba) to simulate large network latencies.

Performance tests

The SQLAlchemy docs have a performance test that inserts 100,000 rows into a table using different methods. We reran the same test using Python v3.5.3 within our repo’s Dockerfile and tested with batch mode enabled:

- SQLAlchemy ORM: 35.5 seconds

-

With batch mode and

**sqlalchemy_batch_inserts**enabled: 7.3 seconds - SQLAlchemy ORM assigned primary keys: 7.3 seconds

- SQLAlchemy ORM

bulk_save_objects: 3.0 seconds - SQLAlchemy ORM

bulk_insert_mappings: 1.5 seconds - SQLAlchemy Core: 1.2 seconds

Then, we retested with 3 ms latency and 1,000 rows:

- SQLAlchemy ORM: 4.9 seconds

-

With batch mode and

**sqlalchemy_batch_inserts**enabled: 0.14 seconds - SQLAlchemy ORM assigned primary keys: 0.13 seconds

- SQLAlchemy ORM

bulk_save_objects: 0.09 seconds - SQLAlchemy ORM

bulk_insert_mappings: 0.07 seconds - SQLAlchemy Core: 0.07 seconds

Theoretical speedup: 500x (from 1000 to 2 round trips)

Actual speedup: 35x

These numbers showed us that sqlalchemy_batch_inserts speeds up inserting lots of rows, without requiring code changes. The times are nearly the same as the ORM with assigned primary keys and they’re comparable to the bulk API (1.5-2x slower, rather than the original 70x slower, for the 3 ms tests).

The actual speedup falls short of theoretical, which reveals that not all of the time spent is in round-trip latency. The speedups also scale with batch size and latency. We chose 3 ms as an approximate inter-AZ latency within AWS, but in the real world it could vary (could be up to 90 ms for transatlantic).

The performance also approaches the other solutions like the bulk API. Plus, this test only shows 1 table — sqlalchemy_batch_inserts also works across multiple tables, like the sequence and annotation example or joined table inheritance.

Real-world usage

At Benchling, we’ve been running sqlalchemy_batch_inserts in production for 2 years. In that time, we haven’t seen any bugs because of the extension, and our developers haven’t needed to make any code changes to be compatible with it. The 3 actions from the top are no longer performance issues, and generally we no longer consider insert speeds to be a major bottleneck (but of course there may be others!).

We’ve seen a huge payoff for this narrow slice of tooling, and we hope that others will find it as useful and pain-free as we have.

Our docs explain how to install sqlalchemy_batch_inserts. It boils down to adding a line like this to your SQLAlchemy setup:

* P.S. execute_values in psycopg2 v2.8 supports getting returned values back, so it’s possible that SQLAlchemy may support batch inserting these models (with an auto-incrementing primary key) in the future.

Acknowledgments

- Daniele Varrazzo’s

psycopg2support forexecute_values - Mike Bayer’s SQLAlchemy support for

execute_values, and helpful advice on the mailing list -

Raymond for coauthoring this and creating the

sqlalchemy_batch_insertspackage - Isaac and Vineet for reading drafts of this

If you’re interested in working on problems like this, we’re hiring!